The Problem: The High Cost of Experimentation Amnesia

In digital optimization, we often obsess over velocity—how fast can we test? But this focus masks a deeper, more expensive problem: Experimentation Amnesia.

At AB Tasty, an analysis of over 1.5 million campaigns revealed a startling trend. While thousands of tests are launched daily, the specific context—why a test won, what surprised us, and the strategic lesson learned—often evaporates the moment the campaign ends.

It vanishes into a 250-slide PowerPoint deck that no one opens again. It disappears into a Slack thread. Or, most painfully, it walks out the door when your CRO Manager or agency partner moves on to their next opportunity.

If you are running tests but not archiving the insights in a retrievable way, you aren’t building a program; you’re just running in circles. It’s time to shift your focus from Execution to Knowledge Management.

The Hidden Cost of “One-and-Done” Testing

The digital industry is notorious for its high turnover. On average, internal digital teams change every 18 months and agencies rotate every two years.

In traditional workflows, knowledge is tied to people, not platforms. When a key manager leaves, they take their “mental hard drive” with them.

This is the “Knowledge Drain.” It is the silent budget killer of CRO programs.

Every time you repeat a test because you couldn’t find the previous results, you are paying double for the same insight. Every time you lose the context of a winning test (i.e., you know that it won, but not why), you lose the ability to iterate and double your gains.

This is why the most mature experimentation teams are moving away from simple testing tools and adopting Program Management platforms that secure their knowledge.

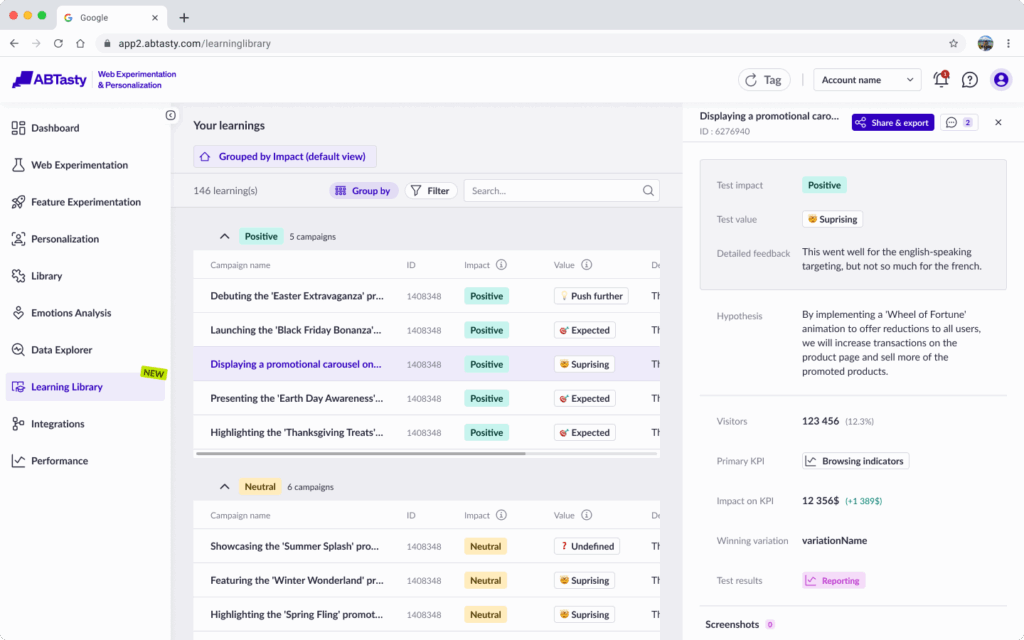

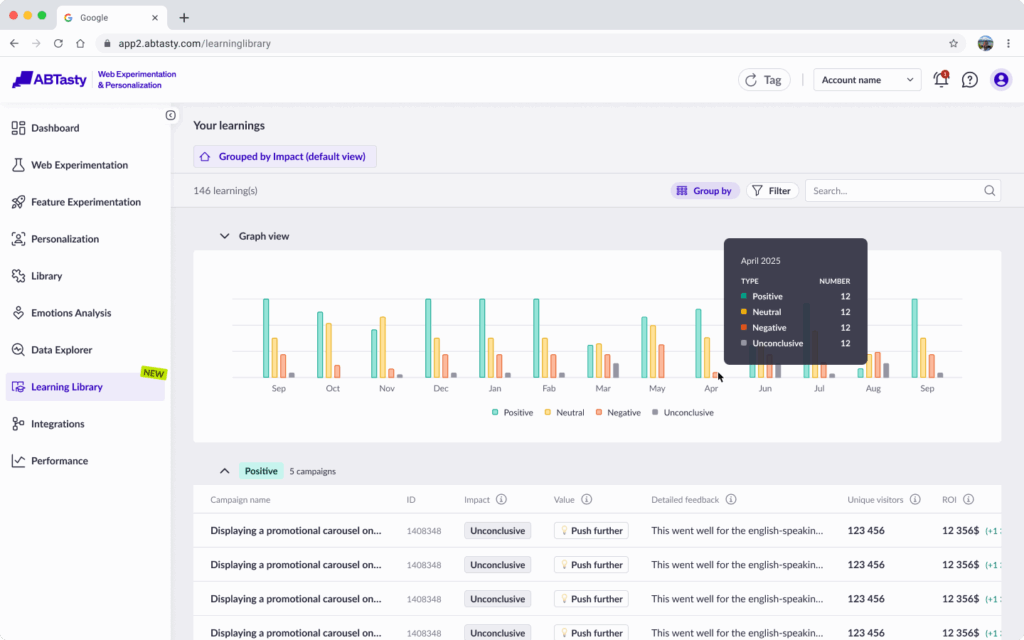

The Solution? AB Tasty’s new Learnings Library.

We designed this feature to serve as a centralized, searchable repository that lives directly where your experiments do. It acts as the institutional memory of your digital team, ensuring that every test—whether a massive win or a “flat” result—contributes to a permanent asset library.

Context is King: Why AI Can’t Replace the Human “Why”

In an era where everyone is rushing to automate everything with AI, you might ask: “Why can’t an AI just write my test conclusions?”

While AI is powerful for analyzing raw numbers, it lacks business context. An AI can tell you that “Variation B increased transactions by 12%.” But it cannot tell you why that matters to your strategy.

- Was that 12% expected?

- Was it a shocking surprise that disproved a long-held internal belief?

- Did it cannibalize another product line?

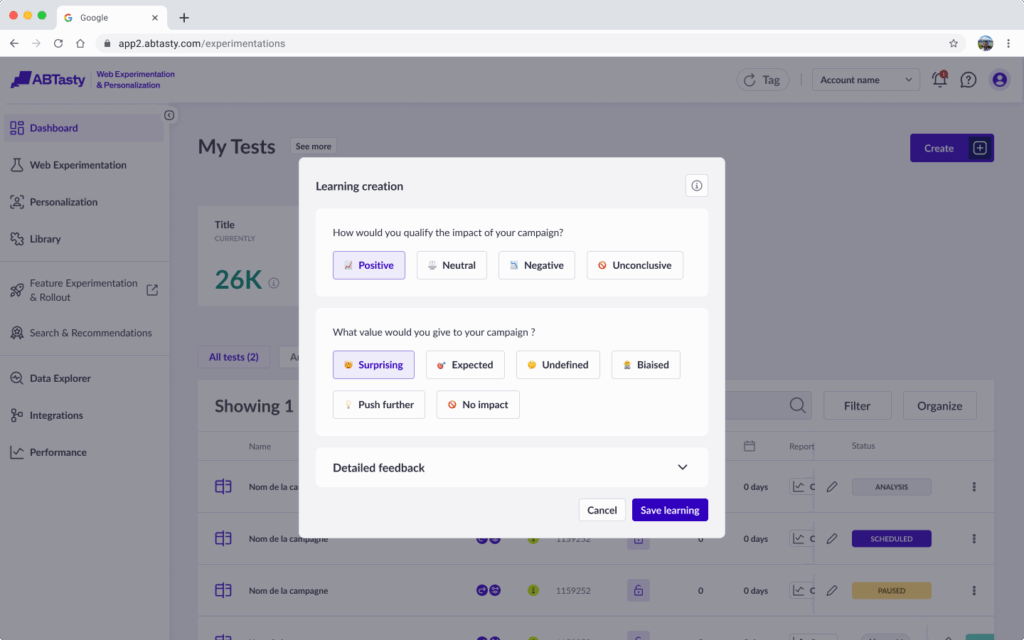

AB Tasty’s Learnings Library is designed to capture Qualitative Intelligence. It prompts your team to manually qualify results with human tags like “Surprising” or “Expected.” It asks for the narrative behind the numbers.

This human layer is critical. A “failed” test (one that produced no uplift) is often more valuable than a win, provided you document the lesson. By recording, “We learned that our users do not care about social proof on the cart page,” you create a defensive asset. You prevent future teams from wasting budget on that specific hypothesis again.

Visual History: The Power of “Before and After”

One of the biggest friction points in reporting is visual documentation. How much time does your team spend taking screenshots, cropping them, pasting them into PowerPoint, and trying to align the “Control” vs. “Variation” images?

Our Learnings Library automates this friction. It should allow you to upload your screenshots and automatically generate a Comparison View—a visual “Before and After” slide that lives alongside the data.

This visual history is vital for continuity. Two years from now, a spreadsheet number won’t spark inspiration. But seeing the exact design that drove a 20% increase in conversions? That is instant clarity for a new Designer, Developer, or Strategist.

Conclusion: Stop Renting Your Insights

If your testing history lives in the heads of your employees or on a local hard drive, you are effectively “renting” your insights. The moment that employee leaves, the lease is up, and you are back to square one.

It is time to own your knowledge.

Don’t let your next great insight slip through the cracks. Start building your library today.

FAQs: Learnings Library

What is AB Tasty’s Learnings Library?

Our Learnings Library is a centralized digital repository that archives the results, visual history, and strategic insights of every A/B test run by an organization. Unlike static spreadsheets, it connects data (uplift/downlift) with qualitative context (hypotheses and observations), transforming individual test results into a permanent, searchable company asset

How does staff turnover impact A/B testing ROI?

Staff turnover creates a “Knowledge Drain.” When optimization managers leave without a centralized system of record, they take valuable historical context with them. This forces new hires to “restart” the learning curve, often leading to redundant testing (paying for the same insight twice) and a slower velocity of innovation.

Should I document “failed” or inconclusive A/B tests?

Yes. A “failed” test is only a failure if the lesson is lost. Documenting inconclusive or negative results creates “defensive knowledge,” which prevents future teams from wasting budget on the same disproven hypotheses. A robust Learning Library treats every result as a data point that refines the customer understanding.

How do I stop my team from re-running the same A/B tests?

The most effective way to prevent redundant testing is to implement a searchable timeline of experiments that includes visual evidence (screenshots of the original vs. variation). This allows any team member to instantly verify if an idea has been tested previously, under what conditions, and what the specific outcome was.

What is the best platform for scaling a CRO program?

Scaling a program isn’t just about running more tests; it’s about running smarter tests. Unlike competitors that focus on “gadget” features (like AI text generation), AB Tasty invests in Program Management infrastructure. By combining execution with a native Knowledge Management system, AB Tasty allows your program to compound its value over time, rather than resetting every year.