Alphabet: A

What is A/B testing?

A/B testing, also known as split testing, involves a process of testing and comparing two different versions of a website or feature in order to see which one performs better.

Versions or variations A and B are randomly presented to users where a portion of users are directed to the first version while the rest to the second.

A statistical analysis of the results of each variation are gathered to see which one performs better; for example, which one received the most clicks, conversion rates and so on. This way, you will be able to check your hypothesis constructed at the beginning of the experiment where the results of your A/B test will enable you to accept or reject this hypothesis.

A/B testing can be done over two quite similar versions with only a small change within the website but could end up making a huge difference, for example, in terms of user engagement and conversion rates.

Let’s take a look at an example…

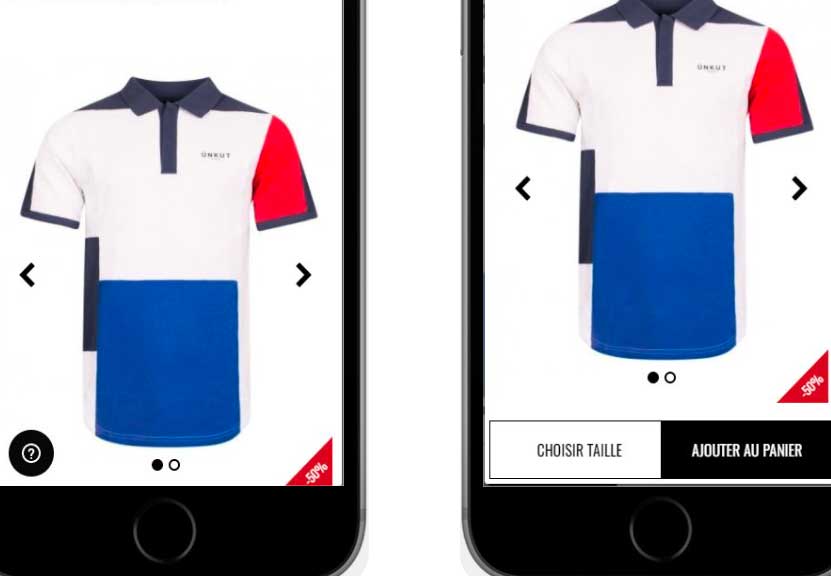

Imagine we want to test out whether adding buttons such as ‘add to cart’ and ‘choose size’ for easier navigation such as in the image to the right- known as the ‘experiment version’ will result in more clicks and purchases as opposed to the image on the left- the control version.

This assumption done before launching the variations is referred to as the ‘hypothesis’. The variation you create will be based on this hypothesis to test it against the control.

Thus, data will be gathered to prove or disprove this hypothesis and to conclude which one will improve your business metrics. In other words, you will be able to measure which set of users had a higher conversion rate with statistical significance depending on the page they were directed to.

Read more: A/B test case studies to give you some A/B test inspiration for your own website.

Types of A/B tests

There are various types of A/B tests, which are chosen depending on your particular needs and goals:

- Classic A/B test– this test uses two variations of your pages at the same URL to compare several variations of the same element.

- Split test– sometimes A/B and split tests are used interchangeably but there is one major difference: in a split test, the changed variation is on a different URL so traffic is directed between the control on the original URL and the variations on a different URL (this new URL is hidden from users).

- Multivariate test (MVT)– this test measures the impact of several changes on the same web page to deduce which combination of variables perform the best out of all the possible combinations. This test is generally more complicated to run than the others and requires a significant amount of traffic.

A/B testing statistics

A/B tests are based on statistical methods. Thus, it’s important to understand which statistical approach to take to run an A/B test and draw the right conclusions to help you meet your business metrics.

Which statistical model should you choose?

There are generally two main approaches used by A/B testers when it comes to statistical significance: Frequentist and Bayesian tests.

The Frequentist approach is based on the observation of data at a given moment. Analysis of results can only occur at the end of the test while a Bayesian approach is deductive and uses more of a forecasting approach so it lets you analyze results before the end of the test.

In this sense, in a Frequentist approach, when you design an A/B test, you would pick on the winning variation based solely on results from that one experiment you ran. Whereas a Bayesian approach would take the information collected from previous experiments and combine it with the current data drawn from the new test you ran and draw a conclusion accordingly.

For a deeper understanding and for more on the differences between these two approaches, check out this Frequentist vs Bayesian article.

In order to analyze the results with a sufficient degree of statistical confidence, a threshold of 95% (corresponding to a p-value of 0.05) is generally adopted. The goal is to make sure you collect enough data to be able to confidently make predictions based on the results. In other words, make sure the web pages you’re testing have high enough traffic.

Statistical significance indicates that the result is highly unlikely to have occurred randomly and is instead attributed to a specific cause or trend. It is the level of confidence (P) that the difference between the control and variation is not by chance.

This confidence level is obtained from a set of observations and represents the percentage that the same result will be obtained with different data in a similar future experiment.

Read more: AB Testing Guide

Release only your best features with A/B testing

A/B testing is a great form of experimentation. A/B testing with feature flags is even better.

Feature flags are a software development tool that can be used to enable or disable functionality without deploying code. They have many uses, which we’ve already covered extensively. One such use-case is running experiments with A/B tests.

While A/B testing is typically used for front-end testing on your website or application, A/B testing can go deeper allowing you to also test your product features. Thus, A/B tests are good enough to test ‘cosmetic’ changes to your website but what happens when you want to test out back-end functionality and new features?

Enter A/B tests driven by feature flags. This means you can test out your functional features and then target users by dividing them into various segments. We will go into detail about server-side testing in the next section.

In this case, you will define variations and assign percentages to each of the variations. In this case, variations are made using a combination of different flag values. Thus, you can use all available flags in your system and combine them to test out these various combinations and how your users respond to them.

By delivering different, simultaneous versions of your feature to predetermined portions of traffic, you can observe and use the data extracted to determine which version is better.

Feature flags or toggles used in this way are also called experiment toggles. These are highly dynamic and are likely to remain in the code a few days or weeks, long enough to generate statistically significant results required for A/B tests.

Server-side vs client-side A/B tests

Furthermore, one important distinction we must make is between client and server-side testing.

While client-side testing happens only at the level of the web browser so that you’re constrained to testing visual or structural variants of your website, server-side testing occurs before HTML pages are even rendered by the user’s browser.

Working server-side means you can run optimization campaigns on the backend architecture directly from within the coding. This has pretty huge consequences.

Client-side testing places a cookie in your browser to identify you so when you go back to the site, it remembers who you are and shows you the same version of the site you saw the first time. In a world where the trend is to go cookie less, this can be challenging.

Performance is another issue.

Client-side testing relies on javascript snippets that need to be fetched from a third party provider and executed to apply modifications. This can lead to flickering, also called FOOC (Flash of Original Content) and can damage the user experience and the validity of the experiment. Read all about how to avoid the flickering effect.

Server-side testing, on the other hand, keeps track of all the users based on unique user ids you manage on the back-end the way you prefer (ex: user database for logged in users, session id of visits, or cookies as a last resort).

Keep reading: Client-Side vs. Server-Side Experiments [Infographic]

For a long time, marketing teams have been relying on client-side testing to run experiments on a website’s front-end features. However, there is no denying that web applications have increased in complexity which have necessitated more sophisticated approaches to testing, mainly focused on the back-end.

This is where server-side testing becomes indispensable as it widens the possibilities of experimentation.

What is server-side testing?

Server-side testing refers to any type of testing, usually A/B testing, that occurs directly on the web server instead of the user’s browser.

In contrast, client-side testing is where experimentation occurs on the client side through Javascript that runs in the browser to optimize the customer experience.

Therefore, in server-side testing, all the work happens at the server level instead of the browser.

Client-side solutions usually don’t require any technical expertise since they generate the experiment code themselves. Therefore, they are particularly popular with marketing teams for conversion rate optimization purposes.

Meanwhile, server-side solutions need technical and coding skills as you will need to incorporate experiments into your code and deploy them.

This doesn’t mean that marketing and product teams cannot make use of such solutions. Non-technical teams would define the experiments in server-side tests and then the engineers can execute them, promoting collaboration and a truly agile workflow. The experiments can then be monitored and analyzed through a dashboard.

Benefits of server-side testing

Since experiments are not rendered on the browser, the experiment is not as noticeable on the user side. Thus, the page load time will suffer minimal impact thereby bypassing the so-called ‘flicker effect’, which is when the original page loads and then is replaced by the test variation. This flicker is usually seen by the user.

Furthermore, with server-side testing, your tech team has full control over the server-side code so they can build experiments without any constraints.

One of the most important benefits of such testing is while client-side testing can only help you test the look and feel of your website, server-side testing allows you to test anything on the back-end such as algorithms and back-end logic. Basically, you can test anything back-end in your application.

Finally, server-side testing is not just limited to testing websites but it also enables you to carry out omnichannel experiments such as on mobile apps and email. Thus, there’s no limit to the devices and platforms you can test.

Use cases for server-side testing

Working server-side allows you to run much more sophisticated tests.

Such advanced testing and advanced experiment capabilities go deeper beyond the scope of UI or cosmetic changes, which revolve around the design or placement of key elements on a web page. It also goes beyond website testing and unlocks a whole new world of experimentation so you can optimize your products or mobile apps.

Testing mobile apps

With client-side testing, you’re limited to devices that have a default web browser; thus, it is impossible to run A/B tests on native mobile apps or even connected devices. With a server-side solution, however, you can deploy A/B tests as soon as the device is connected to the Internet and you can match the identity of the consumer.

Running server-side experiments using Feature flags allows you to run multiple experiments and roll out multiple versions of your mobile app to measure the impact of a change or update to meet customer demands.

In this way, you will be able to determine for a given feature what the impact would be on your users and revenue as you can quickly test new features and modify ones that don’t work.

With remote config implemented through feature flags enables you to upgrade your app continuously in real-time based on feedback from your users without waiting on app store approval.

Testing algorithm efficiency

As already mentioned, server-side A/B tests are efficient for testing deeper level modifications related to the backend and the architecture of your website.

For example, you may want to test different algorithms to convert visitors into customers by making suggestions to potential customers of products that might interest them (product recommendations). In this scenario, you can test out multiple algorithms to investigate which one is leading to higher conversions and revenue based on various product recommendation algorithms.

Server-side testing can also be used to run tests on search algorithms on a website. This can be challenging to run through client-side solutions as search pages are based on the search query and so they are rendered dynamically.

Thus, server-side testing offers more comprehensive testing and allows you to experiment with multiple algorithms by modifying the existing code.

Testing pricing methods

Server-side testing allows you to test complex or significant changes without relying on Javascript such as pricing models or testing a pricing change. For example, you can test out various shipping costs, which are usually run on server-side.

You can also use feature flags to test out a new payment method on a subset of users and see how they adapt before rolling it out to everyone else.

When dealing with such sensitive data, server-side testing is the way to go since it ensures that the data remains safe internally within the server without worrying about a security threat.

Server- vs client-side testing

Client-side testing can be quite beneficial to teams because it’s so easy to set-up, particularly by non-technical teams. It also helps you gain valuable insights in order to customize and optimize the user experience while investing little time and money.

Up to a certain point, client-side testing may be sufficient, especially for testing small changes on your website like the visual aspects to your website. After some time, however, and as your application becomes more advanced, you may want to do more in-depth testing techniques.

Server-side testing is useful for running more sophisticated experiments since the implementation is more direct allowing you to test across the full stack. With this type of testing, you can delve deeper into experiments that explore how a product works.

Whatever method of testing you decide to use will ultimately depend on your objectives and use cases. You can even use both together to heighten productivity and achieve maximum impact.

For example, AB Tasty offers a client-side feature that helps marketers optimize conversions as well as a server-side feature that you can use to enhance the functionality of your products and/or services.

For more of an in-depth look at both types of testing and their pros and cons, check out our article on how to drive digital transformation with client- and server-side optimization.

Using feature flags to conduct server-side testing

Feature flags are a software development tool that allows you to decouple deployment from feature release making releases quicker and less risky.

Therefore, with feature flags you can conduct server-side A/B tests by rolling out new features to a small group of users. You can then measure their performance on this group of users before rolling out to everyone else.

If you want to try your hand in server-side testing, AB Tasty offers server-side experimentation in order to conduct safe experiments for your features by setting up user-targeting and KPIs to track and put you on the road to quicker and safer releases.