Picking an effective deployment strategy is an important decision for every DevOps team. Many options exist, and you want to find the strategy that best aligns with how you work. Today, we’ll go over canary deployments.

Are you an agile organization? Are you performing continuous integration and continuous delivery (CI/CD)? Are you developing a web app? Mobile app? Local desktop or cloud-based app? These factors, and many others, will determine how effective any given deployment strategy will be.

But no matter which strategy you use, remember that deployment issues will be inevitable. A merge may go wrong, bugs may appear, human error may cause a problem in production. The point is, don’t wear yourself out trying to find a deployment strategy that will be perfect. That strategy doesn’t exist.

Instead, try to find a strategy that is highly resilient and adaptive to the way you work. Instead of trying to prevent inevitable errors, deploy code in a way that minimizes errors and allows you to respond when they do occur quickly.

Canary deployments can help you put your best code into production as efficiently as possible. In this article, we’ll go over what they are and what they aren’t. We’ll go over the pros and cons, compare them to other deployment strategies, and show you how you can easily begin performing such deployments with your team.

In this article, we’ll go over:

[toc]

What is a canary deployment?

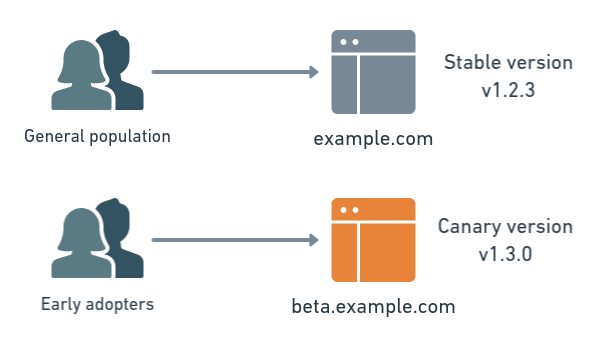

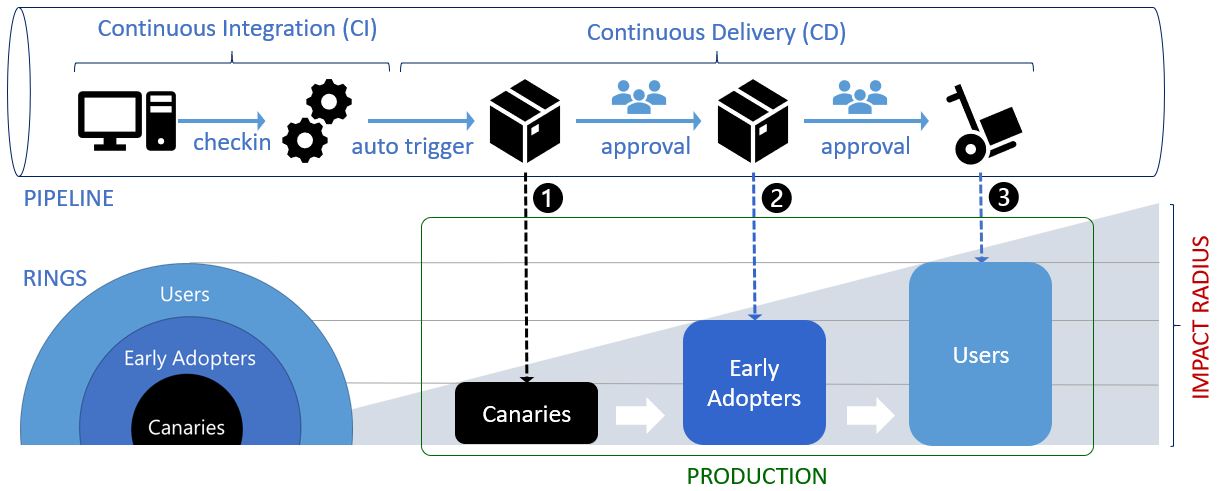

Canary deployments are a best practice for teams who’ve adopted a continuous delivery process. With this strategy, a new feature is first made available to a small subset of users. The new feature is monitored for several minutes to several hours, depending on the traffic volume, or just long enough to collect meaningful data. If the team identifies an issue, the new feature is quickly pulled. If no problems are found, the feature is made available to the entire user base.

The term “canary deployment” has a fascinating history. It comes from the phrase “canary in a coal mine,” which refers to the historical use of canaries and other small songbirds as living early-warning systems in mines. Miners would bring caged birds with them underground. If the birds fell ill or died, it was a warning that odorless toxic gases, like carbon monoxide, were present. While inhumane, it was an effective process used in Britain and the US until 1986, when electronic sensors replaced canaries.

A canary deployment turns a subset of your users —ideally a bug-tolerant subset— into your own early warning system. That user group identifies bugs, broken features, and unintuitive features before your software gets wider exposure.

Your canary users could be self-identified early adopters, a demographically targeted segment, or a random sampling. Whichever mix of users makes the most sense for verifying your new feature in production.

One helpful way to think about canary deployments is risk management. You are free to push new, exciting features more regularly without having to worry that any one new feature will harm the experience of your entire user base.

Canary releases vs. canary deployments

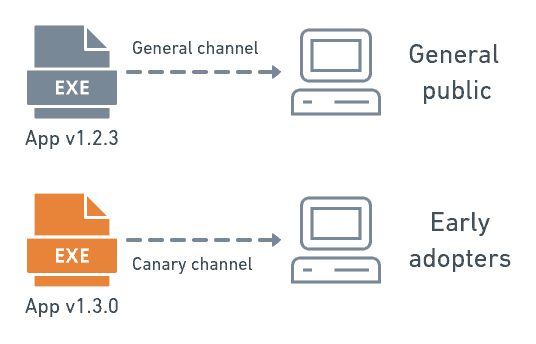

The phrases “canary release” and “canary deployment” are sometimes used interchangeably, but in DevOps, they really should be thought of as separate. A canary release is a test build of a complete application. It could be a nightly release or a beta, for example.

Teams will often distribute canary releases hoping that early adopters and power users, who are more familiar with development processes, will download the new application for real-world testing. The browser teams at Mozilla and Google, and many other open-source projects, are fond of this release strategy.

On the other hand, canary deployments are what we described earlier. A team will release new features into production with early adopters or different user subsets, routed to the new software by a load balancer or feature flag. Most of the user base still sees the current, stable software.

Canary deployment pros and cons

Canary deployments can be a powerful and effective release strategy. But they’re not the correct strategy in every possible scenario. Let’s run through some of the pros and cons so you can better determine whether they make sense for your DevOps team.

Pros

Support for CI/CD processes

Canary deployments shorten feedback loops on new features delivered to production. DevOps teams get real-world usage data faster, which allows them to refine and integrate the next round of features faster and more effectively. Shorter development loops like this are one of the hallmarks of continuous integration/continuous delivery processes.

Granular control over feature deployments

If your team conducts smaller, regular feature deployments, you reduce the risk of errors disrupting your workflow. If you catch a mistake in the deployment, you won’t have exposed many users to it, and it will be a minor matter to resolve. You won’t have exposed your entire user population and needed to pull colleagues off planned work to fix a major production issue.

Real-world testing

Internal testing has its place, but it is no substitute for putting your application in front of real-world users. Canary deployments are an excellent strategy for conducting small-scale real-world testing without imposing the significant risks of pushing an entirely new application to production.

Quickly improve engagement

Besides offering better technical testing, canary deployments allow you to quickly see how users engage with your new features. Are session lengths increasing? Are engagement metrics rising in the canary? If no bugs are found, get that feature in front of everyone.

There is no need to wait for a more extensive test deployment to complete. Engage those users and get iterating on your next feature.

More data to make business cases

Developers may see the value in their code, but DevOps teams still need to make business cases to leadership and the broader organization when they need more resources.

Canary deployments can quickly show you what demand might be for new features. Conduct a deployment for a compelling new feature on a small group of influencer users to get them talking. Use engagement and publicity metrics to make the case why you want to push a major new initiative tied to that feature.

Stronger risk management

Canary deployments are effectively a series of microtests. Rolling out new features incrementally and verifying them one at a time with canary testing can significantly reduce the total cost of errors or more significant system issues. You’ll never need to roll back a major release, suffer a PR hit, and need to rework a large and unwieldy codebase.

Cons

More overhead

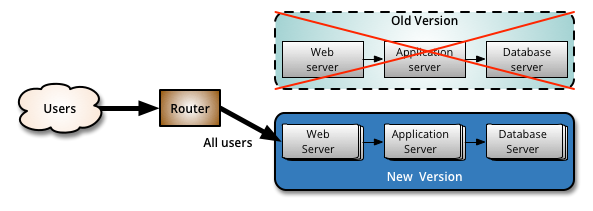

Like any complex process, canary deployments come with some downsides. If you’re going to use a load balancer to partition users, you will need additional infrastructure and need to take on some additional administration.

In this scenario, you create a second production environment and backend that will run alongside your primary environment. You will have two codebases, two app servers, potentially two web servers, and networking infrastructure to maintain.

Alternatively, many DevOps teams use feature flags to manage their canary deployments on a single system. A feature flag can partition users into a canary test at runtime within a single code base. Canary users see the new feature, and everyone else runs the existing code.

Deploying local applications is hard

If you’re developing a locally installed application, you run the risk of users needing to initiate a manual update to get the latest version of your software. If your canary deployment sits in that latest update, your new feature may not get installed on as many client systems as you need to get good test results.

In other words, the more your software runs client-side, the less amenable it is to canary deployments. A full canary release might be a more suitable approach to get real-world test results in this scenario.

Users are still exposed to software issues

While the whole point of a canary deployment is to expose only a few users to a new feature to spare the broader user base, you will still expose end users to less-tested code. If the fallout from even a few users encountering a problem with a particular feature is too significant, then consider skipping this kind of deployment in favor of more rigorous internal testing.

How to perform a canary deployment

Planning out a canary deployment takes a few simple steps:

Identify your canary group

There are several different ways you can select a user group to be your canary.

Random subset

Pick a truly random sampling of different users. While you can do this with a load balancer, feature flag management software can easily route a certain percentage of total traffic to a canary test using a simple modulo.

Early adopters

If you run an early adopter program for highly engaged users, consider using them as your canary group. Make it a perk of their program. In exchange for tolerating bugs they might encounter in a canary deployment, you can offer them loyalty rewards.

By region

You might want to assign a specific region to be your canary. For example, you could set European IPs during late evening hours to go to your canary deployment. You would avoid exposing daytime users to your new features but still get a handful of off-hours user sessions to use as a test.

Internal testers

You can always configure sessions from your internal subnets to be the canary.

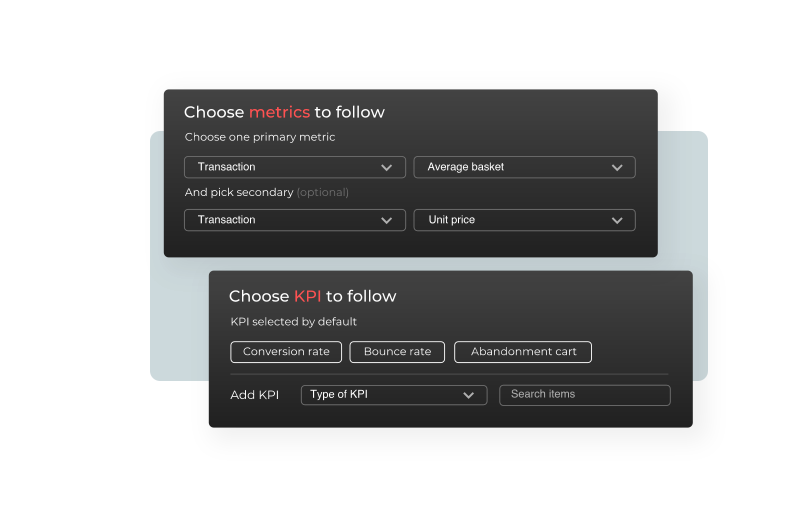

Decide on your canary metrics

The purpose of conducting a canary deployment is to get a firm “yes” or “no” answer to the question of whether your feature is safe to push into wider production. To answer that question, you first need to decide what metrics you’re going to use and install the means for monitoring performance.

For example, you may decide you want to monitor:

- Internal error counts

- CPU utilization

- Memory utilization

- Latency

You can customize feature management software quickly and easily to monitor performance analytics. These platforms can be excellent tools for encouraging a culture of experimentation.

Decide how to transition from canary to full deployment

As discussed, canary releases should only last on the order of several minutes to several hours. They are not intended to be overly long experiments. Because the timeframe is so short, your team should decide up front how many users or sessions you want in the canary and how you’re going to move to full deployment once your metrics hit positive benchmarks.

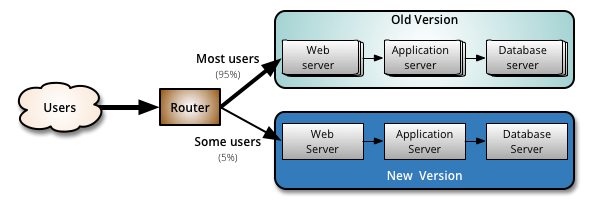

For example, you could go with a 5/95 random canary deployment. Configure a feature flag to move a random 5 percent of your users to the canary test while the remaining 95 percent stay on the stable production release. If you see positive results, remove the flag and deploy the feature completely.

Or you might want to take a more conservative approach. Another popular canary strategy is to deploy a canary test logarithmically, going from a 1 percent random sample to 10 percent to see how the new feature stands up to a larger load, then up to a full 100 percent.

Determine what infrastructure you need

Once your team is on the same page about the approach you’ll take, you’ll need to make sure you have all the proper infrastructure in place to make your canary deployment go off without a hitch.

You need a system for partitioning the user base and for monitoring performance. You can use a router or load balancer for the partitioning, but you can also do it right in your code with a feature flag. Feature flags are often more cost-effective and quick to set up, and they can be the more powerful solution.

Canary vs. blue/green deployments

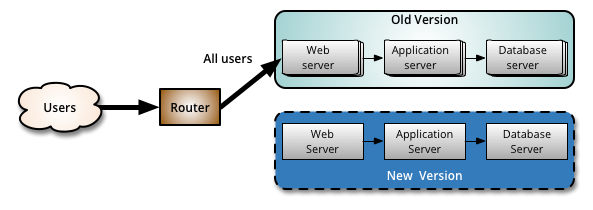

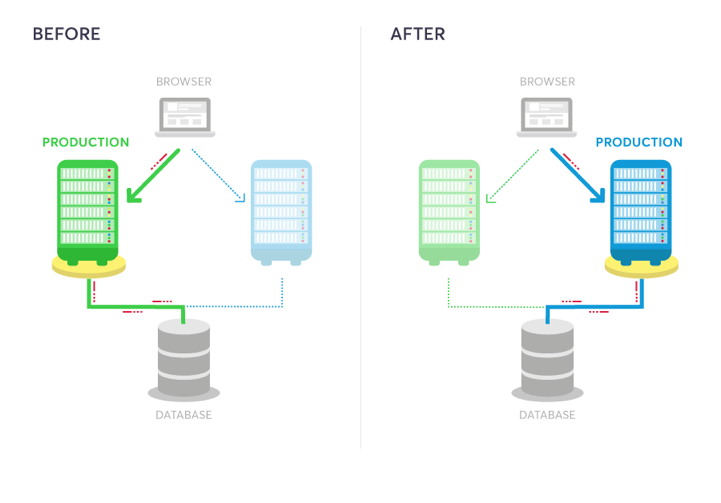

Canary deployments are also sometimes confused with blue/green deployments. Both can use parallel production environments —managed with a load balancer or feature flag— to mitigate the risk of software issues.

In a blue/green deployment, those environments start identical, but only one receives traffic (the blue server). Your team releases a new feature onto the hot backup environment (the green server). Then the router, feature flag, or however you’re managing traffic, gradually shifts new user sessions from blue to green until 100 percent of all traffic goes to green. Once the cutover is complete, the team updates the now-old blue server with the new feature, and then it becomes the hot backup environment.

The way the switchover is handled in these two strategies differs because of the desired outcome. Blue/green deployments are used to eliminate downtime. Canary deployments are used to test a new feature in a production environment with minimal risk and are much more targeted.

Use feature flags for better deployments

When you boil it right down, a feature flag is nothing more than an “if” statement from which users take different code paths at runtime depending on a condition or conditions you set. In a canary deployment, that condition is whether the user is in the canary group or not.

Let’s say we’re running a fledgling social networking site for esports fans. Our DevOps team has been hard at work on a content recommender that gives users real-time recommendations based on livestreams they’re watching. The team has refined the recommendation feature to be significantly faster. It has performed well in internal testing, and now they want to see how it performs under real-world conditions.

The team doesn’t want to invest time and money into installing new physical infrastructure to conduct a canary deployment. Instead, the team decides to use a feature flag to expose the new recommendation engine to a random 5 percent sample of the user base.

The feature flag splits users into two groups with a simple modulo when users load a live stream. Within minutes your team gets results back from a few thousand user sessions with the new code. It does, in fact, load faster and improves user engagement, but there is an unanticipated spike in CPU utilization on the production server. Ops staff realize it is about to degrade performance, so they kill the canary flag.

The team agrees not to proceed with rollout until they can debug why the new code caused the unexpected server CPU spike. Thanks to the real-world test results provided by the canary deployment, they have a pretty good idea of what was going on and get back to work.

Features flags streamline and simplify canary deployments. They mitigate the need for a second production environment. Using feature flag management software like AB Tasty allows sophisticated testing and analysis.