“Failure” can feel like a dirty word in the world of experimentation. Your team spends time thinking through a hypothesis, crafting a test, and finally when it rolls out … it falls flat. While it can feel daunting to see negative results from your a/b tests, you have gained valuable insights that can help you make data-driven, strategic decisions for your next experiment. Your “failure” becomes a learning opportunity.

Embracing the risk of negative results is a necessary part of building a culture of experimentation. On the first episode of the 1,000 Experiments Club podcast, Ronny Kohavi (formerly of Airbnb, Microsoft, and Amazon) shared that experimentation is a time where you will “fail fast and pivot fast.” As he learned while leading experimentation teams for the largest tech companies, your idea might fail. But it is your next idea that could be the solution you were seeking.

“There’s a lot to learn from these experiments: Did it work very well for the segment you were going after, but it affected another one? Learning what happened and why will lead to developing future strategies and being successful,” shares Ronny.

In order to build a culture of experimentation, you need to embrace the failures that come with it. By viewing negative results as learning opportunities, you build trust within your team and encourage them to seek creative solutions rather than playing it safe. Here are just a few benefits to embracing “failures” in experimentation:

- Encourage curiosity: With AB Tasty, you can test your ideas quickly and easily. You can bypass lengthy implementations and complex coding. Every idea can be explored immediately and if it fails, you can get the next idea up and running without losing speed, saving you precious time and money.

- Eliminate your risks without a blind rollout: Testing out changes on a few pages or with a small audience size can help you gather insights in a more controlled environment before planning larger-scale rollouts.

- Strengthen hypotheses: It’s easy to fall prey to confirmation bias when you are afraid of failure. Testing out a hypothesis with a/b testing and receiving negative results confirms that your control is still your strongest performer, and you’ll have data to support the fact that you are moving in the right direction.

- Validate existing positive results: Experimentation helps determine what small changes can drive a big impact with your audience. Comparing negative a/b test results against positive results for similar experiments can help to determine if the positive metrics stand the test of time, or if an isolated event caused skewed results.

In a controlled, time-limited environment, your experiment can help you learn very quickly if the changes you have made are going to support your hypothesis. Whether your experiment produces positive or negative results, you will gain valuable insights about your audience. As long as you are leveraging those new insights to build new hypotheses, your negative results will never be a “failure.” Instead, the biggest risk would be allowing a status quo continuing to go unchecked.

“Your ability to iterate quickly is a differentiation,” shares Ronny. “If you’re able to run more experiments and a certain percentage are pass/fail, this ability to try ideas is key.”

Below are some examples of real-world a/b tests and the crucial learnings that came from each experiment:

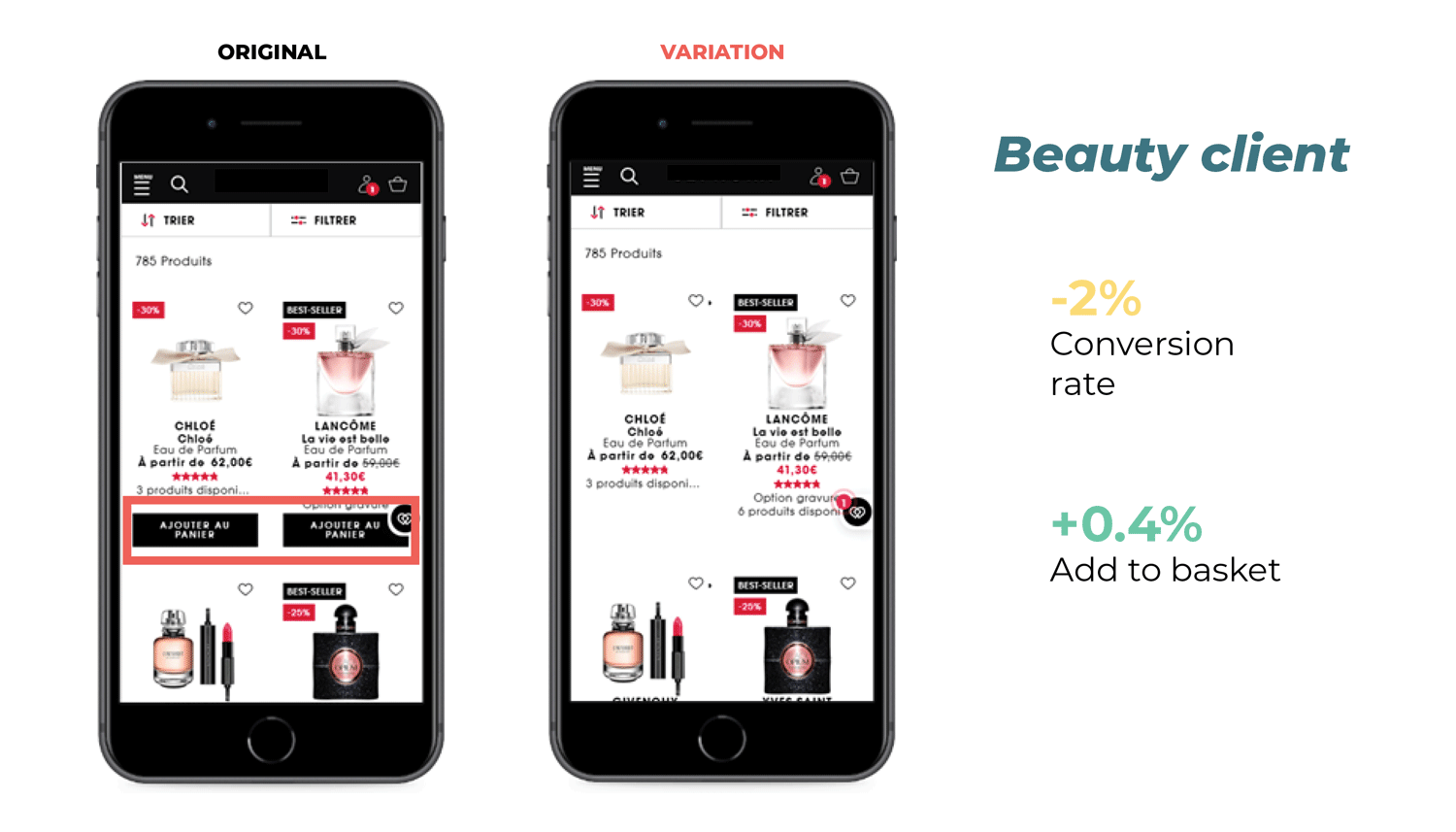

Lesson learned: Removing “Add to Basket” CTAs decreased conversion

In this experiment, our beauty/cosmetics client tested removing the “Add to Basket” CTA from their product pages. The idea behind this was to test if users would be more interested in clicking through to the individual pages, leading to a higher conversion rate. The results? While there was a 0.4% increase in visitors clicking “Add to Basket,” conversions were down by 2%. The team took this as proof that the original version of the website was working properly, and they were able to reinvest their time and effort into other projects.

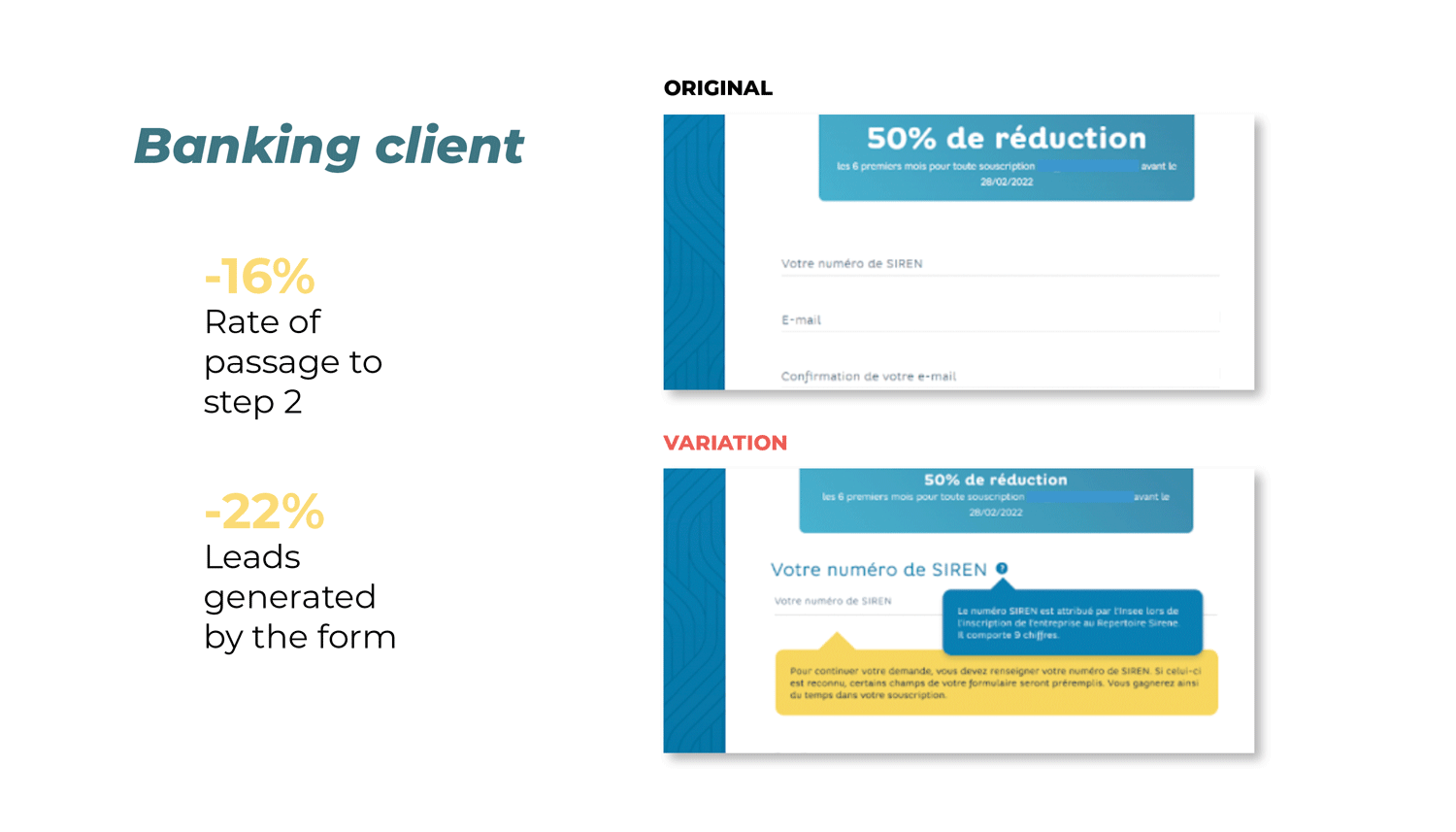

Lesson learned: Busy form fields led to decreased leads

A banking client wanted to test if adjusting their standard request form would drive passage to step 2 and ultimately increase the number of leads from form submissions. The test focused on the mandatory business identification number field, adding a pop-up explaining what the field meant in the hopes of reducing form abandonment. The results? They saw a 22% decrease in leads as well as a 16% decrease in the number of visitors continuing to step 2 of the form. The team’s takeaways from this experiment were that in trying to be helpful and explain this field, their visitors were overwhelmed with information. The original version was the winner of this experiment, and the team saved themselves a huge potential loss from hardcoding the new form field.

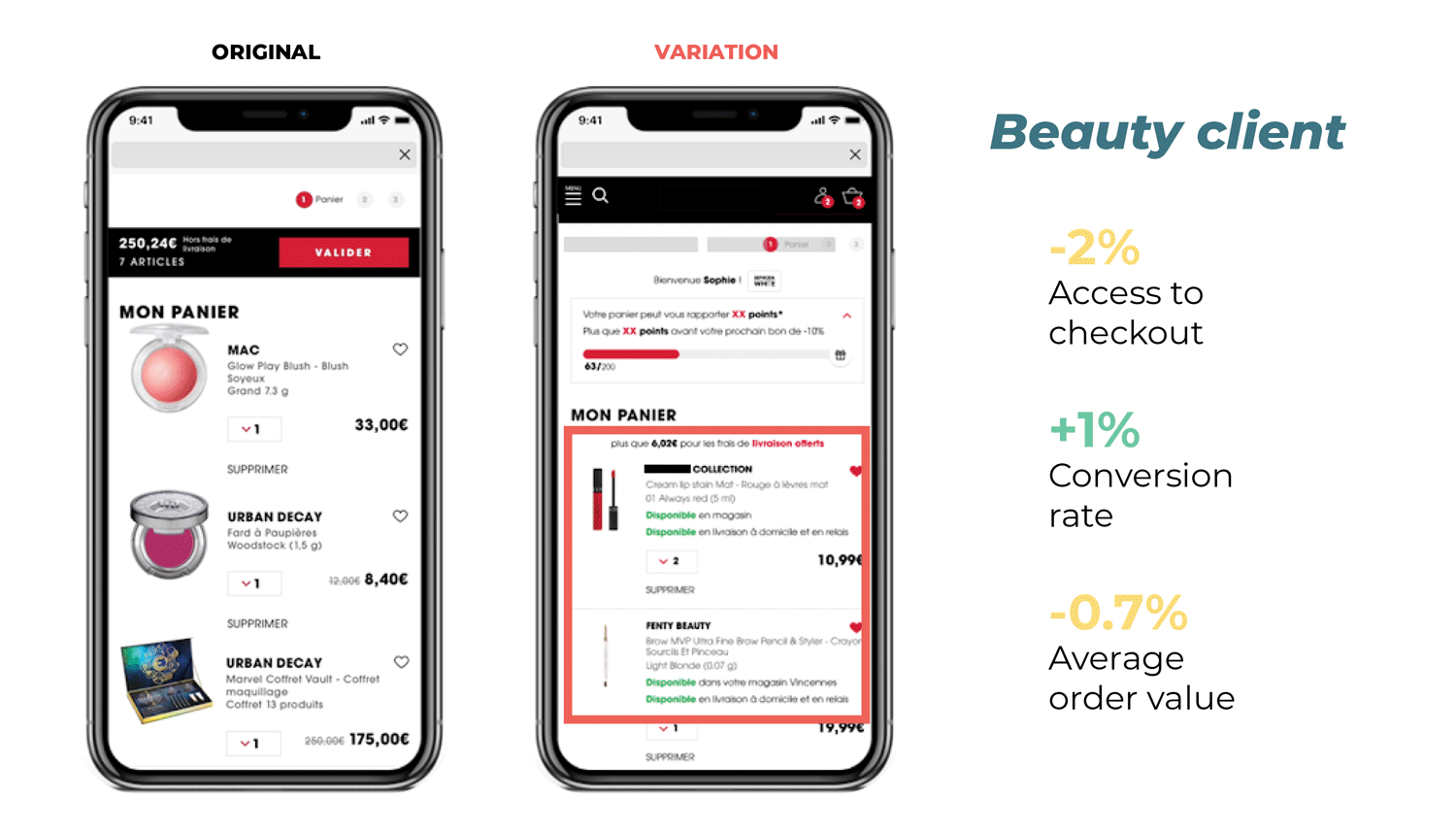

Lesson learned: Product availability couldn’t drive transactions

The team at this beauty company designed an experiment to test whether displaying a message about product availability on the basket page would lead to an increase in conversions by appealing to the customer’s sense of FOMO. Instead, the results proved inconclusive. The conversion rate increased by 1%, but access to checkout and the average order value decreased by 2% and 0.7% respectively. The team determined that without the desired increase in their key metrics, it was not worth investing the time and resources needed to implement the change on the website. Instead, they leveraged their experiment data to help drive their website optimization roadmap and identify other areas of improvement.

Despite negative results, the teams in all three experiments leveraged these valuable insights to quickly readjust their strategy and identify other places for improvement on their website. By reframing the negative results of failed a/b tests into learning opportunities, the customer experience became their driver for innovation instead of untested ideas from an echo chamber.

Jeff Copetas, VP of E-Commerce & Digital at Avid, stresses the importance of figuring out who you are listening to when building out an experimentation roadmap. “[At Avid] we had to move from a mindset of ‘I think …’ to ‘let’s test and learn,’ by taking the albatross of opinions out of our decision-making process,” Jeff recalls. “You can make a pretty website, but if it doesn’t perform well and you’re not learning what drives conversion, then all you have is a pretty website that doesn’t perform.”

Through testing you are collecting data on how customers are experiencing your website, which will always prove to be more valuable than never testing the status quo. Are you seeking inspiration for your next experiment? We’ve gathered insights from 50 trusted brands around the world to understand the tests they’ve tried, the lessons they’ve learned, and the successes they’ve had.