A/B testing is often seen as the magic bullet for improving e-commerce performance. Many believe that small tweaks—like changing the color of a “Buy Now” button—will significantly boost conversion rates. However, A/B testing is much more complex.

Random changes without a well-thought-out plan often lead to neutral or even negative results, leaving you frustrated and wondering if your efforts were wasted.

Success in A/B testing doesn’t have to be defined solely by immediate KPI improvements. Instead, by shifting your focus from short-term gains to long-term learnings, you can turn every test into a powerful tool for driving sustained business growth.

This guest blog was written by Trevor Aneson, Vice President Customer Experience at 85Sixty.com, a leading digital agency specializing in data-driven marketing solutions, e-commerce optimization, and customer experience enhancement. In this blog, we’ll show you how to design A/B tests that consistently deliver value by uncovering the deeper insights that fuel continuous improvement.

Rethinking A/B Testing: It’s Not Just About the Outcome

Many people believe that an A/B test must directly improve core e-commerce KPIs like conversion rates, average order value (AOV), or revenue per visitor (RPV) to be considered successful. This is often due to a combination of several factors:

1. Businesses face pressure to show immediate, tangible results, which shifts the focus toward quick wins rather than deeper learnings.

2. Success is typically measured using straightforward metrics that are easy to quantify and communicate to stakeholders.

3. There is a widespread misunderstanding that A/B testing is a one-size-fits-all solution, which can lead to unrealistic expectations.

However, this focus on short-term wins limits the potential of your A/B testing program. When a test fails to improve KPIs, you might be tempted to write it off as a failure and abandon further experimentation. However, this mindset can prevent you from discovering valuable insights about your users that could drive meaningful, long-term growth.

A Shift in Perspective: Testing for Learnings, Not Just Outcomes

To maximize the success and value of your A/B tests, it’s essential to shift from an outcome-focused approach to a learning-focused one.

Think of A/B testing not just as a way to achieve immediate gains but as a tool for gathering insights that will fuel your business’s growth over the long term.

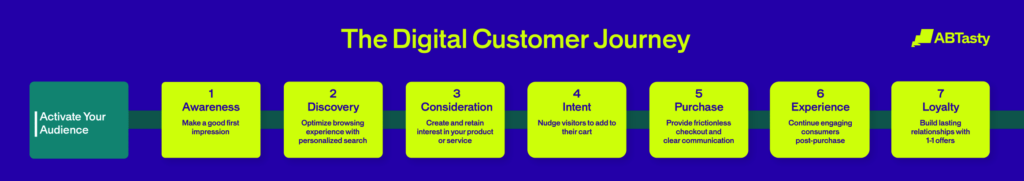

The real power of A/B testing lies in the insights you gather about user behavior — insights that can inform decisions across your entire customer journey, from marketing campaigns to product design. When you test for learnings, every result — whether it moves your KPIs or not — provides you with actionable data to refine future strategies.

Let’s take a closer look at how this shift can transform your testing approach.

Outcome-Based Testing vs. Learning-Based Testing: A Practical Example

Consider a simple A/B test aimed at increasing the click-through rate (CTR) of a red call-to-action (CTA) button on your website. Your analytics show that blue CTA buttons tend to perform better, so you decide to test a color change.

Outcome-Based Approach

Your hypothesis might look something like this: “If we change the CTA button color from red to blue, the CTR will increase because blue buttons typically receive more clicks.”

In this scenario, you’ll judge the success of the test based on two possible outcomes:

1. Success: The blue button improves CTR, and you implement the change. 2. Failure: The blue button doesn’t improve CTR, and you abandon the test.

While this approach might give you a short-term boost in performance, it leaves you without any understanding of why the blue button worked (or didn’t). Was it really the color, or was it something else — like contrast with the background or user preferences — that drove the change?

Learning-Based Approach

Now let’s reframe this test with a focus on learnings. Instead of testing just two colors, you could test multiple button colors (e.g., red, blue, green, yellow) while also considering other factors like contrast with the page background.

Your new hypothesis might be: “The visibility of the CTA button, influenced by its contrast with the background, affects the CTR. We hypothesize that buttons with higher contrast will perform better across the board.”

By broadening the test, you’re not only testing for an immediate outcome but also gathering insights into how users respond to various visual elements. After running the test, you discover that buttons with higher contrast consistently perform better, regardless of color.

This insight can then be applied to other areas of your site, such as text visibility, image placement, or product page design.

Key Takeaway:

A learning-focused approach reveals deeper insights that can be leveraged far beyond the original test scenario. This shift turns every test into a stepping stone for future improvements.

How to Design Hypotheses That Deliver Valuable Learnings

Learning-focused A/B testing starts with designing better hypotheses. A well-crafted hypothesis doesn’t just predict an outcome—it seeks to understand the underlying reasons for user behavior and outlines how you’ll measure it.

Here’s how to design hypotheses that lead to more valuable insights: 1. Set Clear, Learning-Focused Goals

Rather than aiming only for KPI improvements, set objectives that prioritize learning. For example, instead of merely trying to increase conversions, focus on understanding which elements of the checkout process create friction for users.

By aligning your goals with broader business objectives, you ensure that every test contributes to long-term growth, not just immediate wins.

2. Craft Hypotheses That Explore User Behavior

A strong hypothesis is specific, measurable, and centered around understanding user behavior. Here’s a step-by-step guide to crafting one:

● Start with a Clear Objective: Define what you want to learn. For instance, “We want to understand which elements of the checkout process cause users to abandon their carts.”

● Identify the Variables: Determine the independent variable (what you change) and the dependent variable (what you measure). For example, the independent variable might be the number of form fields, while the dependent variable could be the checkout completion rate.

● Explain the Why: A learning-focused hypothesis should explore the “why” behind the user behavior. For example, “We hypothesize that removing fields with radio buttons will increase conversions because users find these fields confusing.”

3. Design Methodologies That Capture Deeper Insights

A robust methodology is crucial for gathering reliable data and drawing meaningful conclusions. Here’s how to structure your tests:

● Consider Multiple Variations: Testing multiple variations allows you to uncover broader insights. For instance, testing different combinations of form fields, layouts, or input types helps identify patterns in user behavior.

● Ensure Sufficient Sample Size & Duration: Use tools like an A/B test calculator to determine the sample size needed for statistical significance. Run your test long enough to gather meaningful data but avoid cutting it short based on preliminary results.

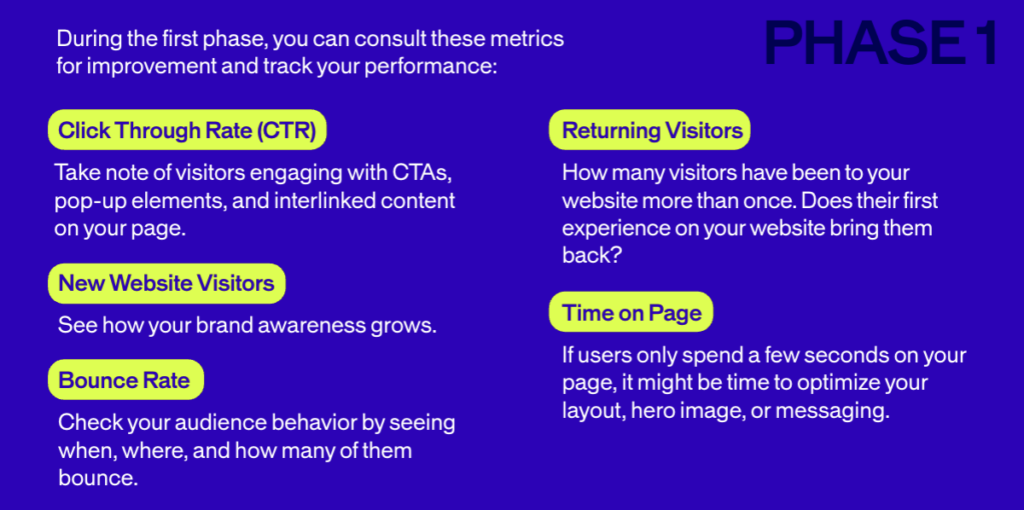

● Track Secondary Metrics: Go beyond your primary KPIs. Measure secondary metrics, such as time on page, engagement, or bounce rates, to gain a fuller understanding of how users interact with your site.

4. Apply Learnings Across the Customer Journey

Once you’ve gathered insights from your tests, it’s time to apply them across your entire customer journey. This is where learning-focused testing truly shines: the insights you gain can inform decisions across all touchpoints, from marketing to product development.

For example, if your tests reveal that users struggle with radio buttons during checkout, you can apply this insight to other forms across your site, such as email sign-ups, surveys, or account creation pages. By applying your learnings broadly, you unlock opportunities to optimize every aspect of the user experience.

5. Establish a Feedback Loop

Establish a feedback loop to ensure that these insights continuously inform your business strategy. Share your findings with cross-functional teams and regularly review how these insights can influence broader business objectives. This approach fosters a culture of experimentation and continuous improvement, where every department benefits from the insights gained through testing.

Conclusion: Every Test is a Win

When you shift your focus from short-term outcomes to long-term learnings, you transform your A/B testing program into a powerful engine for growth. Every

test—whether it results in immediate KPI gains or not—offers valuable insights that drive future strategy and improvement.

With AB Tasty’s platform, you can unlock the full potential of learning-focused testing. Our tools enable you to design tests that consistently deliver value, helping your business move toward sustainable, long-term success.

Ready to get started? Explore how AB Tasty’s tools can help you unlock the full potential of your A/B testing efforts. Embrace the power of learning, and you’ll find that every test is a win for your business.