Our comprehensive guide is here to provide you with expert insights to help you optimize your website’s performance and enhance user experiences.

What is A/B Testing?

A/B testing, also known as split testing, is a marketing technique that involves comparing two versions of a web page or application to see which performs better. These variations, known as A and B, are presented randomly to users. A portion of them will be directed to the first version, and the rest to the second. A statistical analysis of the results then determines which version, A or B, performed better, according to certain predefined indicators such as conversion rate.

In other words, you can verify which version gets the most clicks, subscriptions, purchases, and so on. These results can then help you optimize your website for conversions.

In other words, you can verify which version gets the most clicks, subscriptions, purchases, and so on. These results can then help you optimize your website for conversions.

A/B testing examples

Many of you may be looking for ideas for your next A/B tests. Although the possibilities for A/B testing on your website are endless, sometimes a little inspiration from a success story can go a long way.

Here are some links to a few examples of A/B tests and results:

- 5 A/B Test Case Studies and What You Can Learn From Them?

- AB Tasty Case Studies Library

- 100 Conversion Optimization Case Studies

- 50 A/B Split Test Conversion Optimization Case Studies

What types of websites are relevant for A/B testing?

Any website can benefit from A/B testing since they all have a ‘reason for being’ – and this reason is quantifiable. Whether you’re an online store, a news site, or a lead generation site, A/B testing can help in various way. Whether you’re aiming to improve your ROI, reduce your bounce rate, or increase your conversions, A/B testing is a very relevant and important marketing technique.

Lead

The term “lead” refers to a prospective client when we’re talking about sales. E-mail marketing is very relevant to nurturing leads with more content, keeping the conversation going, suggesting products, and ultimately boosting your sales. with A/B testing e-mails, your brand should start to identify trends and common factors that lead to higher open and click-through rates.

Media

In a media context, it’s more relevant to talk about “editorial A/B testing”. In industries that work closely with the press, the idea behind A/B testing is to test the success of a given content category. For example, if you want to see if it’s a perfect fit with the target audience. Here, as opposed to the above example, A/B testing has an editorial function, not a sales one. A/B testing content headlines is a common practice in the media industry.

E-commerce

Unsurprisingly, the aim of using A/B testing in an e-commerce context is to measure how well a website or online commercial app is selling its merchandise. A/B testing uses the number of completed sales to determine which version performs best. It’s particularly important to look at the home page and the design of the product pages, but it’s also a good idea to consider all the visual elements involved in completing a purchase (buttons, calls-to-action).

What A/B tests should you use?

Classic A/B test: The classic A/B test presents users with two variations of your pages at the same URL. That way, you can compare two or several variations of the same element.

Split tests or redirect tests: The split test redirects your traffic toward one or several distinct URLs. If you are hosting new pages on your server, this could be an effective approach.

Multivariate or MVT test: Lastly, multivariate testing measures the impact of multiple changes on the same web page. For example, you can modify your banner, the color of your text, your presentation, and more.

In terms of A/B testing technology, you can:

A/B test on websites

By A/B testing on the web, you can compare two versions of your page. After, the results are analyzed according to predefined objectives—clicks, purchases, subscriptions, etc.

A/B test native mobile apps

A/B testing in apps is complex because you can’t show two versions after download. Yet, quick updates allow easy design changes and direct impact analysis.

Server-side A/B test via APIs

An API is a programming interface for connecting with applications to exchange data, allowing automatic campaign creation or variation from stored data.

A/B testing and conversion optimization

Conversion optimization and A/B testing are two ways for companies to increase profits. Their promise is a simple one: generate more revenues with the same amount of traffic. In light of high acquisition costs and complex traffic sources, why not start by getting the most out of your current traffic?

Surprisingly, average conversion rates for e-commerce sites continue to hover between 1% and 3%. Why? Because conversion is a complex mechanism that depends on a number of factors. This includes things like the quality of traffic generated, user experience, offer quality, the website’s reputation, as well as what the competition is doing. E-commerce professionals will naturally aim to minimize any negative impact the interplay of the above elements might have on consumers along the buyer journey.

A variety of methods exist to help them achieve this, including A/B testing, a discipline that uses data to help you make the best decisions. A/B testing is useful to establish a broader conversion optimization strategy, but it is by no means sufficient all on its own. An A/B testing solution lets you statistically validate certain hypotheses, but alone, it cannot give you a sophisticated understanding of user behavior.

However, understanding user behavior is certainly key to understanding problems with conversion. Therefore, it’s essential to enrich A/B testing with information provided by other means. This will allow you to gain a fuller understanding of your users, and crucially, help you come up with hypotheses to test. There are many sources of information you can use to gain this fuller picture:

- Web analytics data. Although this data does not explain user behavior, it may bring conversion problems to the fore (e.g. identifying shopping cart abandonment). It can also help you decide which pages to test first.

- Ergonomics evaluation. These analyses make it possible to inexpensively understand how a user experiences your website.

- User test. Though limited by sample size constraints, user testing can provide a myriad of information not otherwise available using quantitative methods.

- Heatmap and session recording. These methods offer visibility on the way that users interact with elements on a page or between pages.

- Client feedback. Companies collect large amounts of feedback from their clients (e.g. opinions listed on the site, questions for customer service). Their analysis can be completed by customer satisfaction surveys or live chats.

How to find A/B test ideas?

Your A/B tests must be complemented by additional information to identify conversion problems and offer an understanding of user behavior. This analysis phase is critical and must help you to create “strong” hypotheses. The disciplines mentioned above will help. A correctly formulated hypothesis is the first step towards a successful A/B testing program and must respect the following rules. Hypotheses must:

- be linked to a clearly discerned problem that has identifiable causes

- mention a possible solution to the problem

- indicate the expected result, which is directly linked to the KPI to be measured

For example, if the identified problem is a high abandon rate for a registration form that seems like it could be too long, a hypothesis might be: “Shortening the form by deleting optional fields will increase the number of contacts collected.”

What should you A/B test on your website?

What should you test on your site? This question comes up again and again because companies often don’t know how to explain their conversion rates, whether good or bad. If a company could be sure that their users were having trouble understanding their product, they wouldn’t bother testing the location or color of an add-to-cart button – this would be off-topic.

Instead, they would test various wordings of their customer benefits. Every situation is different. Rather than providing an exhaustive list of elements to test, we prefer to give you an A/B testing framework to identify these elements.

Below are some good places to start:

1. Titles and Headers

You can start by changing the title or content of your articles so that they draw people in. Regarding form, a change of color or font can also make a difference.

2. Call to Action

The call to action is a very important button. Color, copy, position, and type of words used (buy, add to cart, order, etc.) can have a decisive impact on your conversion rate.

3. Forms

It’s important to create a clear and concise form. You can try modifying a field title, removing optional fields, changing field placement, formatting using lines or columns, etc.

4. Navigation

You can test different page connections by offering multiple conversion options. For example, you can combine or separate payment and delivery information.

5. Landing Pages

Lead generation landing pages are vital for prompting user action. Split testing compares different page versions, assessing varied layouts or designs.

6. Images

Images are just as important as text. Play with the size, aesthetic, and location of your photos to see what resonates best with your audiences.

7. Page Structure

The structure of your pages, whether home page or category pages, should be particularly well-crafted. You can add a carousel, choose fixed images, change your banners, etc.

8. Algorithms

Use different algorithms to transform your visitors into customers or increase their cart: similar articles, most-search products, or products they’ll love.

9. Pricing

A/B testing on pricing can be delicate. This is because you cannot sell the same product or service for a different price. You’ll need a little ingenuity when testing your conversion rate.

10. Business Model

Think over our action plan to generate additional profits. For example, if you’re selling target merchandise, why not diversify by offering additional products or complementary services?

If you want more concrete ideas based on your unique users’ journeys on your website, be sure to check out our digital customer journey e-book and use case booklet to inspire you with A/B test success stories.

Tips and best practices for A/B testing

Below are several best practices that can help you avoid running into trouble. They are the result of the experiences, both good and bad, of our clients during their testing activity.

Ensure the data reliability for the A/B testing solution

Conduct at least one A/A test to ensure a random assignment of traffic to different versions. This is also an opportunity to compare the A/B testing solution indicators and those of your web analytics platform. This is done to verify that figures are in the ballpark, not to make them correspond exactly.

Conduct an acceptance test before starting

Do some results seem counter-intuitive? Was the test set up correctly and were the objectives correctly defined? In many cases, time dedicated to acceptance testing saves precious time which would be spent interpreting false results.

Test one variable at a time

This makes it possible to precisely isolate the impact of the variable. If the location of an action button and its label are modified simultaneously, it’s impossible to identify which change produced the observed impact.

Run only one test at a time

For the same reasons cited above, it’s advisable to conduct only one test at a time. The results will be difficult to interpret if two tests are conducted in parallel, especially if they’re on the same page.

Adapt the number of variations to the volume

If there’s a high number of variations for little traffic, the test will last a very long time before giving any interesting results. The lower the traffic allocated to the test, the less there should be different versions.

Wait to have statistical reliability before acting

So long as the test has not attained a statistical reliability of at least 95%, it’s not advisable to make any decisions. The probability that differences in results observed are due to chance and not to the modifications made is very high otherwise.

Let tests run long enough

Even if a test rapidly displays statistical reliability, it’s necessary to take into account the size of the sample and differences in behavior linked to the day of the week. It’s advisable to let a test run for at least a week (two ideally) and to have recorded at least 5,000 visitors and 75 conversions per version.

Know when to end a test

If a test takes too long to reach a reliability rate of 95%, it’s likely that the element tested doesn’t have any impact on the measured indicator. In this case, it’s pointless to continue the test, since this would unnecessarily monopolize a part of the traffic that could be used for another test.

Measure multiple indicators

It’s recommended to measure multiple objectives during the test. One primary objective is to help you decide on versions and secondary objectives to enrich the analysis of results. These indicators can include click rate, cart additional rate, conversion rate, average cart, and others.

Take note of marketing actions during a test

External variables can falsify the results of a test. Oftentimes, traffic acquisition campaigns attract a population of users with unusual behavior. It’s preferable to limit collateral effects by detecting these kinds of tests or campaigns.

Segment tests

In some cases, conducting a test on all of a site’s users is nonsensical. if a test aims to measure the impact of different formulations of customer advantages on a site’s registration rate, submitting the current database of registered users is ineffective. The test should instead target new visitors.

Choosing an A/B testing software

Choosing the best A/B testing tool is difficult.

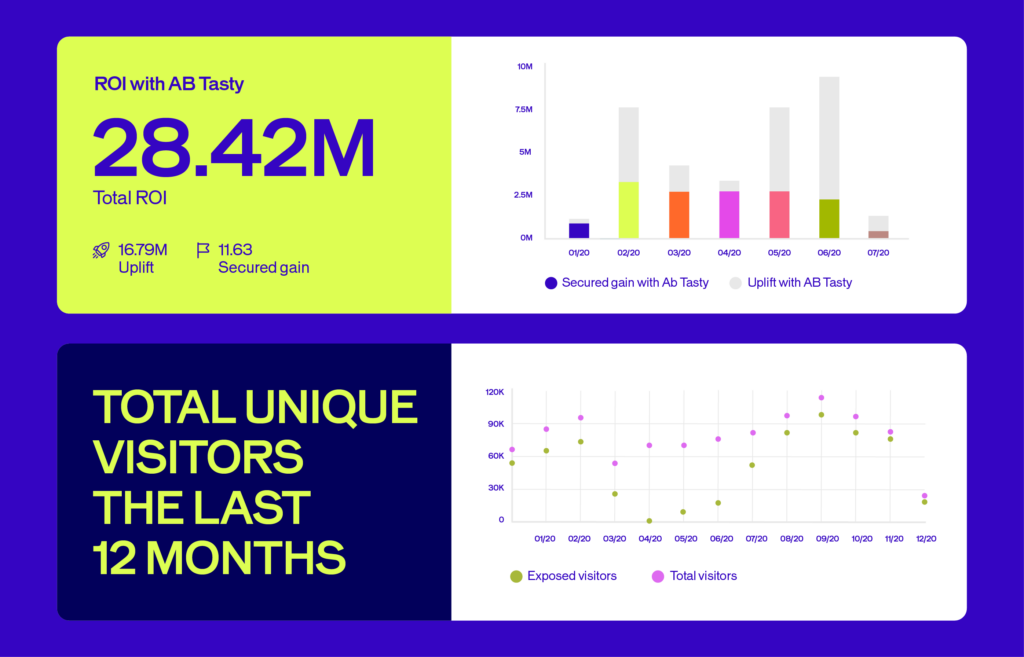

We can only recommend you use AB Tasty. In addition to offering a full A/B testing solution, AB Tasty offers a suite of software to optimize your conversions. You can also personalize your website in terms of numerous targeting criteria and audience segmentation.

But, in order to be exhaustive, and also to provide you with as much valuable information as possible when it comes to choosing a vendor, here are a few articles to help you choose your A/B testing tool with software reviews:

- The 20 Most Recommended AB Testing Tools By Leading CRO: Experts

- Which A/B testing tools or multivariate testing software should you use for split testing?

- 18 Top A/B Testing Tools Reviewed by CRO Experts

Understanding A/B testing statistics

The test analysis phase is the most sensitive. The A/B testing solution must at least offer a reporting interface indicating the conversions saved by variation, the conversion rate, the percentage of improvement compared with the original, and the statistical reliability index saved for each variation. The most advanced ones narrow down the raw data, segmenting results by dimension (e.g. traffic source, geographical location of visitors, customer typology, etc.).

Before it is possible to analyze test results, the main difficulty involves obtaining a sufficient level of statistical confidence. A threshold of 95% is generally adopted. This means that the probability that result differences between variations are due to chance is very low. The time necessary to reach this threshold varies considerably according to site traffic for tested pages, the initial conversion rate for the measured objective, and the impact of modifications made. It can go from a few days to several weeks. For low-traffic sites, it is advisable to test a page with higher traffic. Before the threshold is reached, it is pointless to make any conclusions.

Furthermore, the statistical tests used to calculate the confidence level (such as the chi-square test) are based on a sample size close to infinity. Should the sample size be low, exercise caution when analyzing the results, even if the test indicates a reliability of more than 95%.

With a low sample size, it is possible that leaving the test active for a few more days will greatly modify the results. This is why it is advisable to have a sufficiently sized sample. There are scientific methods to calculate the size of this sample, but from a practical standpoint, it is advisable to have a sample of at least 5,000 visitors and 75 conversions saved per variation.

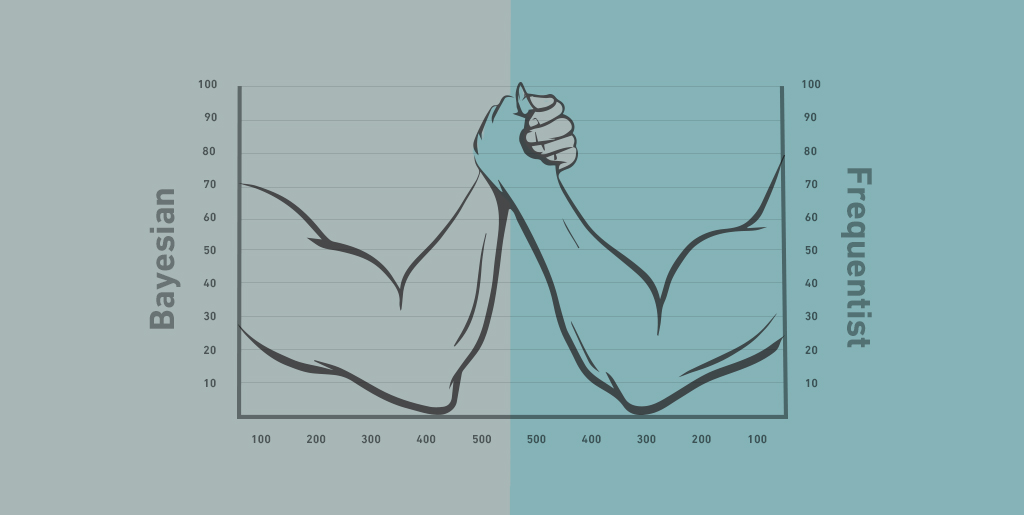

There are two types of statistical tests:

- Frequentist tests. The chi-square method, or Frequentist method, is objective. It allows for analysis of results only at the end of your test. The study is thus based on observation, with a reliability of 95%.

- Bayesian tests. The Bayesian method is deductive. By taking from the laws of probability, it lets you analyze results before the end of the test. Be sure, however, to correctly read the confidence interval. Check out our dedicated article to see all there is to know about the advantages of Bayesian statistics for A/B testing.

Lastly, although site traffic makes it possible to quickly obtain a sufficiently sized sample, it is recommended that you leave the test active for several days to take into account differences in behavior observed by weekday, or even by time of day. A minimum duration of one week is preferable, ideally two weeks. In some cases, this period can even be longer, particularly if the conversion concerns products for which the buying cycle requires time (complex products/services or B2B). As such, there is no standard duration for a test.

Other forms of A/B testing

A/B testing is not limited to modifications to your site’s pages. You can apply the concept to all your marketing activities, such as traffic acquisition via e-mail marketing campaigns, AdWords campaigns, Facebook Ads, and much more.

Resources for going further with A/B testing:

- A Beginner’s Guide to A/B Testing Your Email Campaigns

- 7 Tips for Implementing A/B Testing in Your Social Media Campaigns

- A Crash Course on A/B Testing Facebook Ad Campaigns

- How to Improve Your Click Rates with AdWords A/B Testing

The best resources on A/B testing and CRO

We recommend you read our very own blog on A/B testing, but other experts in international optimization also publish very pertinent articles on the subject of A/B testing and conversion more generally. Here is our selection to stay up to date with the world of CRO.

Blogs to bookmark: