Note: This article was written by Hubert Wassner, Chief Data Scientist at AB Tasty.

Some of you may have noticed Google’s recent release of a free version of Google Optimize and asked yourselves if it will change the market for SaaS A/B testing tools, such as AB Tasty?

Well, history tells us that when Google enters a market, the effects are often disruptive – especially when the tool is free, like with Google Analytics or Google Tag Manager. To be clear, this new offer will be a free version of Google Optimise, with the premium version starting at around $150,000 per year. Also, note that neither the free nor the paid-for version of Google Optimize offer multi-page testing (i.e. test consistency across a funnel for example) and that Google Optimise is not compatible with native applications.

Before going any further, a disclaimer: I’m the chief data scientist at AB Tasty, the leading European solution for A/B testing and personalization and, therefore, in direct competition with Google Optimize. Nevertheless, I’ll do my best to be fair in the following comparison. I’m not going to list and compare all features offered by the two tools. Rather, I’d like to focus on the data side of things – I’m a data scientist after all..!

Let’s dig into it:

To me, Google Optimize’s first and main limitation is that it is based on Google Analytics’ infrastructure and thus doesn’t take the notion of visitor unicity into account. Google looks at sessions. By default, a session duration is fixed to 30 minutes and can be extended to up to 4 hours only. This means that if a visitor visits a website twice with one day between, or visits first in the morning and a second time in the evening, Google Analytics will log 2 different visitors.

This way of counting has two immediate consequences:

- Conversion rates are much lower than they should be. Perhaps, a little annoying, but we can deal with it

- Gains are much more difficult to measure. Now, this is a real issue!

Let’s have a closer look…

Conversion rates are much lower

People will normally visit a website several times before converting. For one conversion, Google Analytics (and by extension Google Optimize) records several different sessions. Only the visit during which the visitor converted is recorded as a ‘success’. All the others are considered ‘failures’. Consequently, the success rate is lower as the denominator grows. For Google, conversion rate is based on visits instead of visitors.

You can put up with this limitation if you make decisions based on relative values instead of absolute values. After all, the objective of testing is first and foremost to gauge the difference, whatever the exact value. The Bayesian model for statistics used by Google Optimize (and AB Tasty) does this very well.

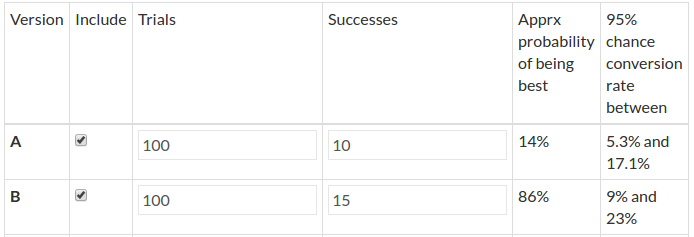

Say 100 visitors saw each variation, 10 converted on A and 15 on B.

Based on these hypotheses, variation A is 14% more likely to be best. The rate reaches 86% for variation B.

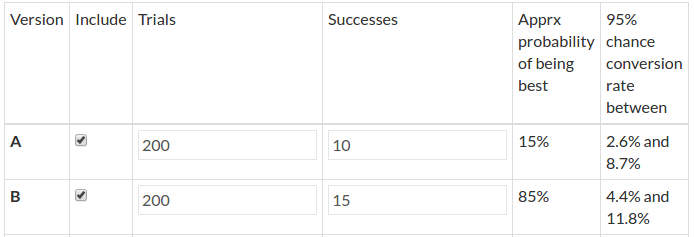

Now say that the above conversions occur after 2 visits on average. It doubles the number of trials and simulates a conversion rate by session instead of visitor.

Results are very similar as there is just a 1% difference between the two experiments. So, if the goal is to see if there is a significant difference between two variations (but not the size of the difference), then taking the session as reference value works just fine.

NB: This conclusion stays true as long as the number of visits per unique visitor is stable across all variations – which is not certain.

It’s impossible to measure confidence intervals for gain with the session approach

Confidence intervals for gain are crucial when interpreting results and in making sound decisions. They predict worst and best case scenarios that could occur once changes are no longer in a test environment.

Here is another tool, also based on Bayesian statistics, that illustrates potential gain distribution: https://making.lyst.com/bayesian-calculator/

See results below for the same sample as previously:

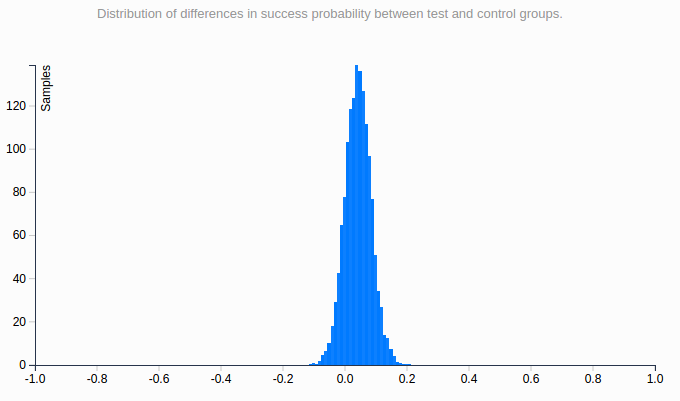

- 100 visits, 10 successes on variation A

- 100 visits, 15 successes on variation B

This curve shows the probability distribution of the real value of the gain linked to variation B.

The 95% confidence interval is [ – 0.05; +0.15 ], which means that with a 95% confidence rate, the actual value of the gain is above -0.05 and below +0.15.

The interval being globally positive, we can draw the same conclusion as previously: B is probably the winning variation but there are doubts.

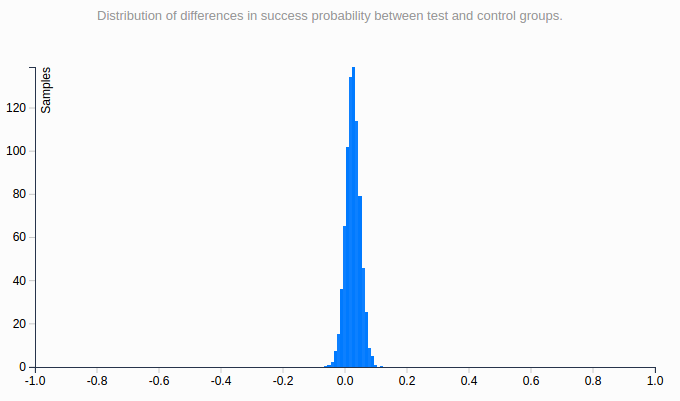

Now let’s say that there are 2 visits before conversion on average. The number of trials is doubled, like previously – this is the kind of data Google Optimize would have.

Here is the curve showing the probability distribution of the real value of the gain.

This distribution is much narrower than the other, and the confidence interval is much smaller: [ – 0.025; 0.08 ]. It gives the impression that it’s more precise – but as the sample is the exact same, it’s not! The bigger the number of sessions before conversion, the more striking this effect would be.

The root of the problem is that the number of sessions for a unique visitor is unknown and varies between segments, business models and industries. Calculating a confidence interval is, therefore, impossible – although it’s essential we draw accurate conclusions.

To conclude, the session-based approach promises to identify which variation is best but doesn’t help estimate gain. To me, this is highly limiting.

Then, why has Google made this (bad) choice?

To track a visitor over multiple sessions, Google would have to store the information server-side, and it would represent a huge amount of data. Given that Google Analytics is free, it is very likely that they try to save as much storage space as they can. Google Optimize is based on Google Analytics, so it’s no surprise they made the same decision for Google Optimize. We shouldn’t expect this to change anytime soon.

I’d say Google Optimize is very likely to gain substantial market share with small websites. Just as they chose Google Analytics, they will go for Google Optimize and gratuity. More mature websites tend to see conversion rate optimization as a game changer and generally prefer technology that can provide more accuracy – results based on unique visitors, real customers.

Overall, the introduction of Google Optimize represents a great opportunity for the market as a whole. As the tool is free, it will likely speed up awareness and optimization skills across the digital industry. Perhaps even the general understanding of statistics will increase! As marketers put tests in place and realize results don’t always follow outside the testing environment, they may very well look for more advanced and precise solutions.