Understanding your customers’ paths is no easy task. Each user has their own unique reason for visiting your site and an individual route that they take as they explore your pages.

How can you gain insights about your customers to improve your website’s usability and understand buying trends?

The answer is simple: build a customer journey map.

In this blog, we’ll dive into a few things: what is a customer journey, a customer journey map, how to map the customer journey visually, templates of different customer journeys, a step by step guide for how to create them, and examples of customer journeys in action. Let’s get started

What is a customer journey?

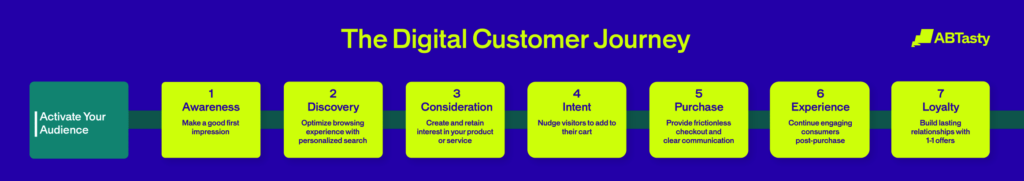

A customer journey is a combination of all the interactions customers have with your brand before reaching a specific goal.

Creating a compelling journey helps you stand out and shows customers that you care about their experience. An enjoyable customer journey promotes positive engagement, making for more satisfied customers that are more likely to return for repeat purchases.

By better understanding your customers, you’ll be able to provide them with the best possible user experience every time they visit your online store. The best way to do this is by creating visual customer journey maps that present all this information about customers at a glance.

What is a customer journey map?

A customer journey map is a visual representation of a customer’s interaction with your business or website. It’s used to define which parts of this process might not be working as smoothly as they should be, thus improving the customer’s experience.

The customer journey map is a (mostly) visual tool that helps businesses understand what a customer goes through when buying a product or service from them. It maps out in clear, concise, visual terms, the journey each customer is likely to experience through buyer personas and user data.

The best customer journey map is a story, brought to life visually, of the customer’s experience. In essence, the best customer journey map is a story, brought to life visually, of the customer’s experience. It should be noted, however, that more complex information on the map may require text.

The map itself highlights “touchpoints, which are specific elements of the customer’s interaction with a business. Each of these touchpoints – for example, seeking a product, researching its content, buying the product, waiting for delivery, and returning it if unsatisfied – can be judged as negative, neutral, or positive from the customer’s perspective.

Customer journey maps require various research techniques that include hard data, customer feedback, and creative thinking. As such, no two maps are the same and each one will depend on many different factors that can’t be simplified or stereotyped as a matter of course.

The heart of customer journey maps: Buyer personas

Buyer personas are at the heart of a customer journey map tool and are broad representations, presented as fictional characters, based on real-life data and customer feedback. Typically, each project will create between three and seven buyer personas, each of which will require its own customer journey map.

The point of the customer journey map is to understand, as clearly as possible, what a customer will encounter when using your service. It will also help you improve the elements that are not functioning properly, are not easy to navigate, and show you how to make the entire experience more satisfying.

Each persona, and therefore the journey map itself, is not meant to be a perfect illustration of actual interactions. Rather, it’s a broad representation of the experience from the persona’s perspective.

Who Can Benefit From A Customer Journey Map?

There are many reasons why a customer journey map can be useful to a business. Customer satisfaction is more important than ever to a business, and it’s tied to loyalty to an extent that has not previously existed. Customers are more demanding, aware of their options, and willing to shop around.

By mapping each of the previously mentioned touchpoints, a well-designed customer journey map template can highlight any problems that clients might experience in the process of interacting with a business and help foster a relationship with an organization, product, service, or brand. This can occur across multiple channels and over a long period of time.

Once a customer journey map template has been designed, the entire enterprise can keep the customer at the forefront of the decision-making process. With a focus on the customer and their experience, or user experience (UX), any kinks, holes, or brick walls within the timeline’s touchpoints can be ironed out.

Bringing Together All Aspects Of The Business

Customer journey maps can help a business by bringing together departments with a focus on customer experience. To begin with, all departments can be engaged to discuss issues that customers may face when dealing with them. This is no small thing as many departments may not be used to dealing with customers, yet the decisions they take may have a profound effect on UX. By creating an understanding of how each touchpoint affects UX across the entire business, decisions can be made from an empathetic perspective.

Traditional marketing stops at the point of purchase, but customer experience does not necessarily end there. For example, perhaps the purchase was not to their satisfaction and they want to return the goods. Departments that might not typically be involved in touchpoints before purchase now have a central role to play. How easy is it for the customer to find the return information on a website? If they need information on delivery or collection times, how likely are they to get a response that will satisfy them? This all requires forethought and a policy that keeps customer experience central to design and organization.

How to map the customer journey visually

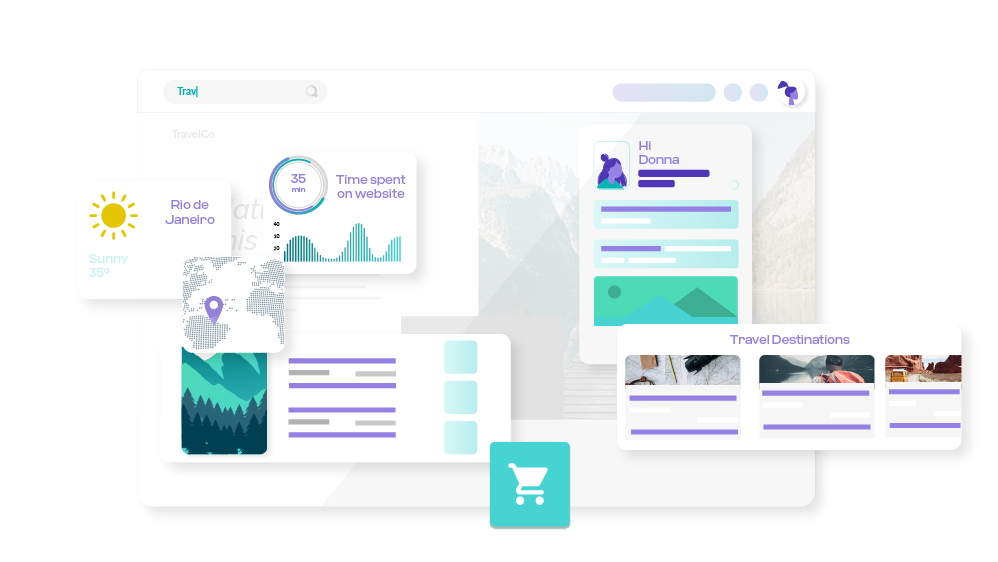

A customer journey map is a visual representation that helps you gain better insight into your customers’ experiences (from start to finish) from their point of view.

There are two vital elements to creating a customer journey map:

- Defining your customers’ goals

- Understanding how to map their nonlinear journey

By mapping out a customer’s digital journey, you are outlining every possible opportunity that you have to produce customer delight. You can then use these touchpoints to craft engagement strategies.

According to Aberdeen Group (via Internet Retailer), 89% of companies with multi-channel engagement strategies were able to retain their customers, compared to 33% of those who didn’t.

To visually map every point of interaction and follow your customer on their journey, you can use Excel sheets, infographics, illustrations, or diagrams to help you better understand.

Customer journey maps also help brands with:

- Retargeting goals with an inbound viewpoint

- Targeting a new customer group

- Forming a customer-centric mindset

All of these lead to better customer experiences, which lead to more conversions and an increase in revenue.

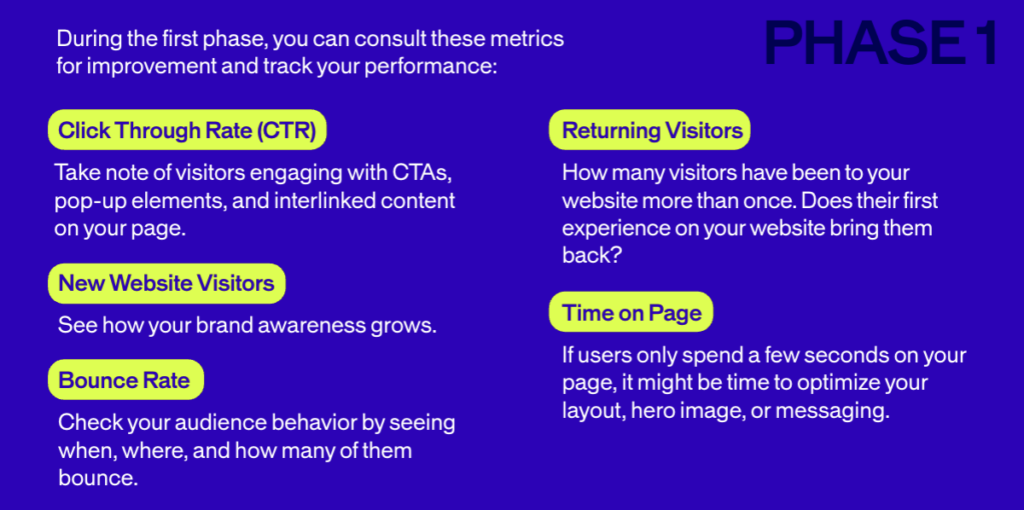

Want more information on the digital customer journey? Check out our digital customer journey resource kit for a detailed e-book, an editable workbook, a use case booklet, and an infographic.

Examples of Customer Journey Map Templates and Which to Choose

There are four different types of customer journey maps to choose from. Each map type highlights different customer behaviors as they interact with your business at different points in time. Choosing the right template is essential based on your goals.

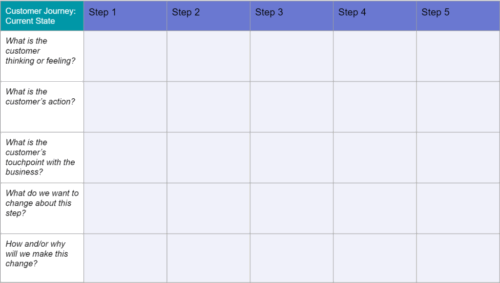

Current state template

The current state template is the most commonly used journey map that focuses on what customers currently do, their way of thinking, and how they feel during interactions.

It’s great for highlighting existing pain points and works best for implementing incremental changes to customer experiences.

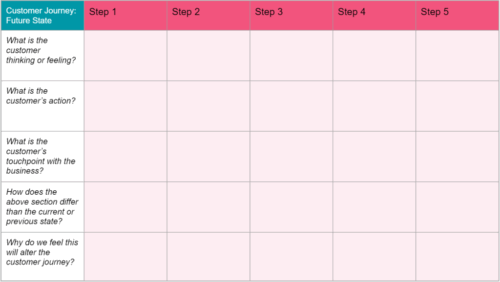

2. Future state template

The future state template focuses on what customers will do, think, and feel during future encounters. It’s useful for conveying a picture of how customers will respond to new products, services, and experiences.

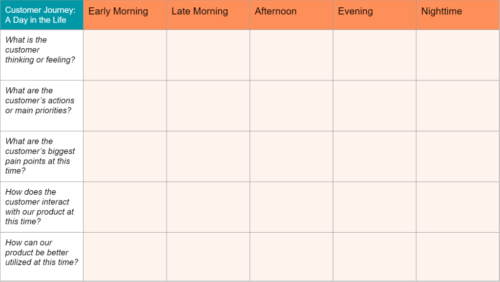

3. Day in the Life Template

This template is similar to the current state template because it visualizes present-day customer behaviors, thoughts, and feelings. However, this template assesses how customers behave both with your organization and with peers in your area.

This type of journey map works best for spurring new initiatives by examining unfulfilled needs in the market.

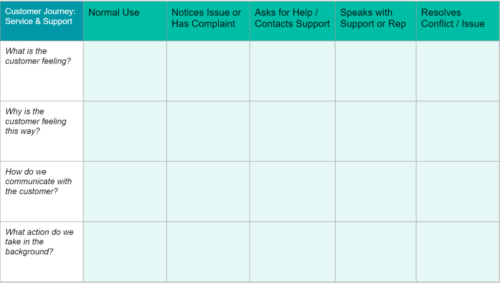

4. Service Blueprint Template

When creating a service blueprint template, you typically begin with an abridged version of a current or future state journey map. Then you add a network of people, methods, procedures, and technologies responsible for giving a simplified customer experience, either in the present or in the future.

Current state blueprint maps are beneficial for recognizing the source of current pain points, whereas future state blueprint maps help create an environment that will be necessary for providing a planned experience.

How to Create a Customer Journey Map (7 Steps)

Creating customer journey maps may feel repetitive, but the design and application you choose will vary from map to map. Remember: customer journeys are as unique as your individual customers.

Step 1: Create Buyer Personas

Before creating a journey map, it’s important to identify a clear objective so you know who you’re making the map for and why. Building personas is the most time-consuming part of the process. It requires detailed research, including qualitative and quantitative data, and is the foundation of the entire process. A persona is a highly relatable and rounded fictional character, generalized, but not stereotyped.

Buyer personas help define customer goals, providing a deeper understanding of their needs and topics of interest. More detail makes for more realistic personas, which means you’ll need to do a fair amount of market research to acquire this data.

Start by creating a rough outline of your buyer’s persona with demographics like age, gender, occupation, education, income, and geography. When you have that in place, you’ll need to get psychographic data on your customers. This kind of information may be harder to collect compared to demographic data, but it is worthwhile to understand customer preferences, needs and wants.

In short, demographics tell you who your customers are and psychographics provide insights into the why behind their behavior. Collecting concrete data on your customers helps you serve them better and deliver a more personalized user experience.

Collecting concrete data on your customers helps you serve them better and deliver a more personalized user experience.

Step 2: Select Your Target Customer

After making several customer personas, it’s time to do a “deep dive” into each to build a more accurate reflection of their experience.

Start by analyzing their first interaction with your brand and mapping out their movements from there.

What questions are they trying to answer? What is their biggest priority?

Step 3: List Customer Touchpoints

Any interaction or engagement between your brand and the customer is a touchpoint.

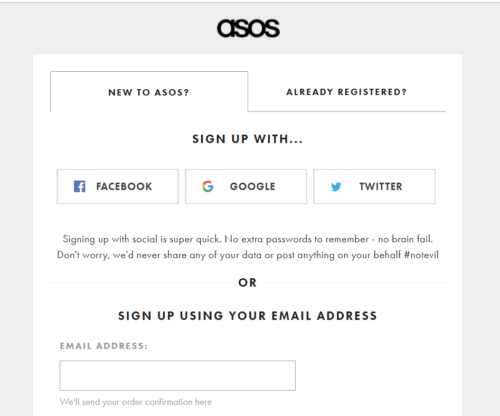

List all the touchpoints in the customer journey, considering everything from the website to social channels, paid advertisements, email marketing, third-party reviews, or mentions.

Which touchpoints have higher engagement? Which touchpoints need to be optimized?

All customer journey mapping examples are unique. Therefore, touchpoints on one map are unlikely to work for another. In fact, every business needs to update its buyer personas and customer journey maps as their business changes. Even quite subtle changes can have profound effects on the customer journey map template.

Step 4: Identify Customer Actions

Once you have identified all your customer touchpoints, identify common actions your customers make at each step. By dividing the journey into individual actions, it becomes easier for you to improve each micro-engagement and move them forward along the funnel.

Think of how many steps a customer needs to reach the end of their journey. Look for opportunities to reduce or streamline that number so customers can reach their goals sooner. One way to do this is by identifying obstacles or pain points in the process and creating solutions that remove them.

This is a great time to use the personas you created. Understanding the customer will help you troubleshoot problem areas.

Anticipating what your customer will do is another important part of mapping the customer journey. Accurate predictions lead to you providing better experiences, which ultimately leads to more conversions.

Step 5: Understand your available resources

Creating customer journey maps presents a picture of your entire business and highlights every resource being used to build the customer experience.

Use your plan to assess which touchpoints need more support, such as customer service. Determine whether these resources are enough to give the best customer experience possible. Additionally, you can correctly anticipate how existing or new resources will affect your sales and increase ROI.

Step 6: Analyzing the Customer Journey

An essential part of creating a customer journey map is analyzing the results.

Now you have your data, customer journey mapping template, touchpoints, and goals, it’s time to put it all together and define where the UX is meeting expectations and where things can be improved. It is important to note that mapping where things are going well is almost as important as defining what isn’t. Some elements of the journey can be spread to other areas.

As you assess the data, look for touchpoints that might drive customers to leave before making a purchase or areas where they may need more support. Analyzing your finished customer journey map should help you address places that aren’t meeting customers’ needs and find solutions for them.

Take the journey yourself and see if there’s something you missed or if there is still room for improvement. Doing so will provide a detailed view of the journey your customer will take.

Follow your map with each persona and examine their journeys through social media, email, and online browsing so you can get a better idea of how you can create a smoother, more value-filled experience.

One of the best ways of pinpointing where things are not going to plan is through customer feedback. This is typically done through surveys and customer support transcripts.

Step 7: Take Business Action

Having a visualization of what the journey looks like ensures that you continuously meet customer needs at every point while giving your business a clear direction for the changes they will respond to best.

Any variations you make from then on will promote a smoother journey since they will address customer pain points.

A great way to test your variations to find out what better serves your customers throughout their user journey is by leveraging A/B testing.

AB Tasty is a best-in-class A/B testing solution that helps you convert more customers by leveraging experimentation to create a richer digital experience – fast. This experience optimization platform embedded with AI and automation can help you achieve the perfect digital experience with ease.

Analyzing the data from your customer journey map will give you a better perspective on changes you should make to your site to reach your objective.

Once you implement your map, review and revise it regularly. This way, you will continue to streamline the journey. Use analytics and feedback from users to monitor obstacles.

Customer Journey Map Examples

Customer journey map templates are varied, some appear like works of art, while others are the work of a child, but as long as they are clear and concise, they can be effective.

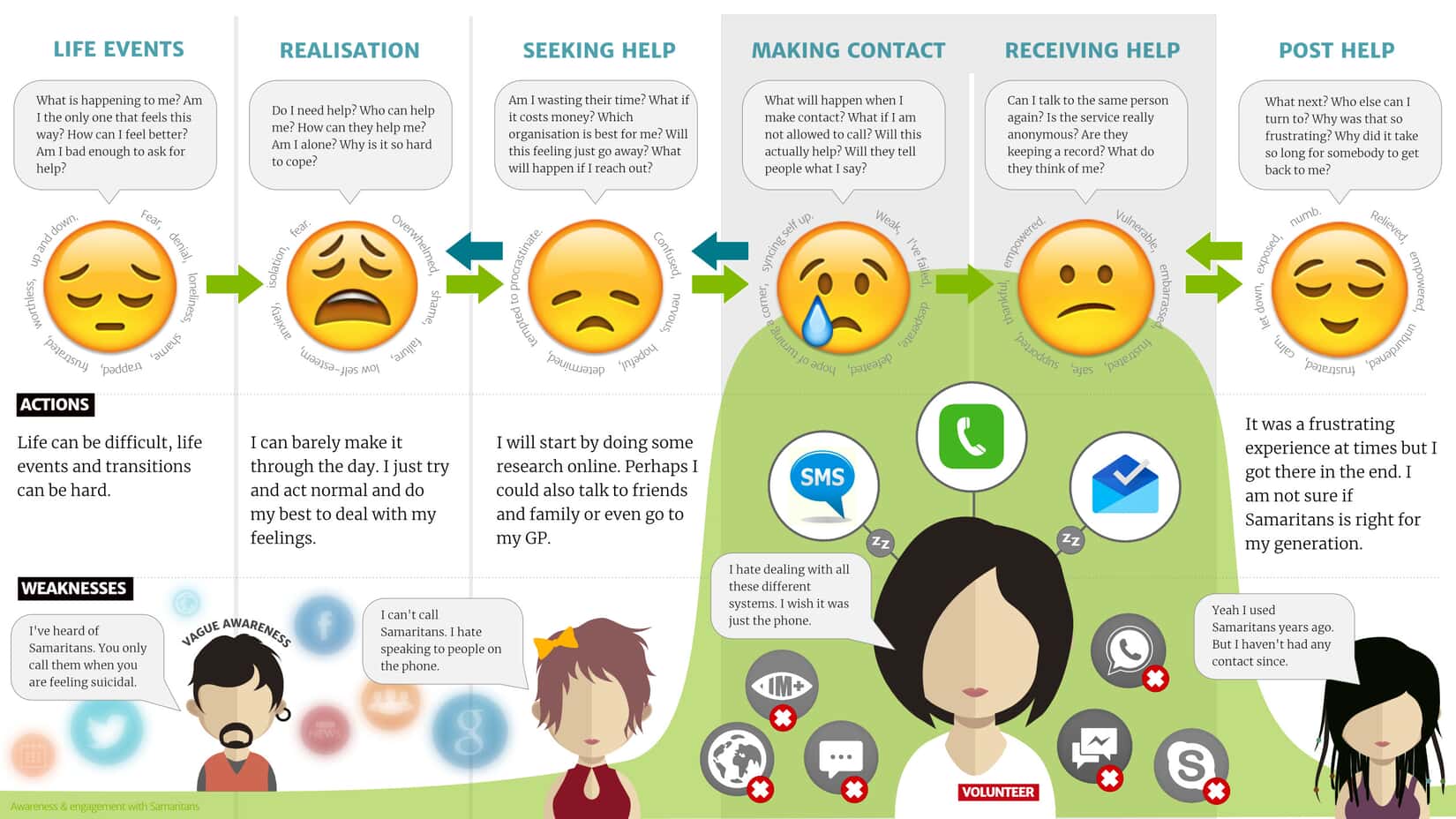

This customer journey map for the charity ‘The Samaritans’ is a highly empathetic map, focused on the purpose of the charity itself. Note how the text is highly visual and therefore makes it easy to relate to the image of the map itself.

This is an example of a map that gives the impression of a journey, rather than a linear UX. This can help push home the point that customer experience is rarely easy to define as a journey from A to B.

The Truth about Customer Journeys

Customer journeys are ever-changing. Journey maps help businesses stay close to their customers and continuously address their needs and pain points. They provide a visual of different customers which helps to understand the nuances of their audience and stay customer-focused.

Customer journey maps can vary widely, but all maps share the same steps. With regular updates and the proactive removal of roadblocks, your brand can stand out, provide meaningful engagement, improve customer experiences, and see positive business growth.