The future of digital experience optimization has arrived and it’s driven by AI.

Are you ready for it?

AI can often be a sensitive subject, as loud voices in the room will boast how AI can replace people, careers, or even entire sectors of society. We’re scaling back the dystopian imagery and instead finding ways where AI can be your sidekick, not a supervillain.

There are two sides to the coin with AI: it can help optimize your time and boost conversions, but it can also be risky if not used properly. We’ll dig into the ways AI can be a helpful tool, as well as some considerations to take.

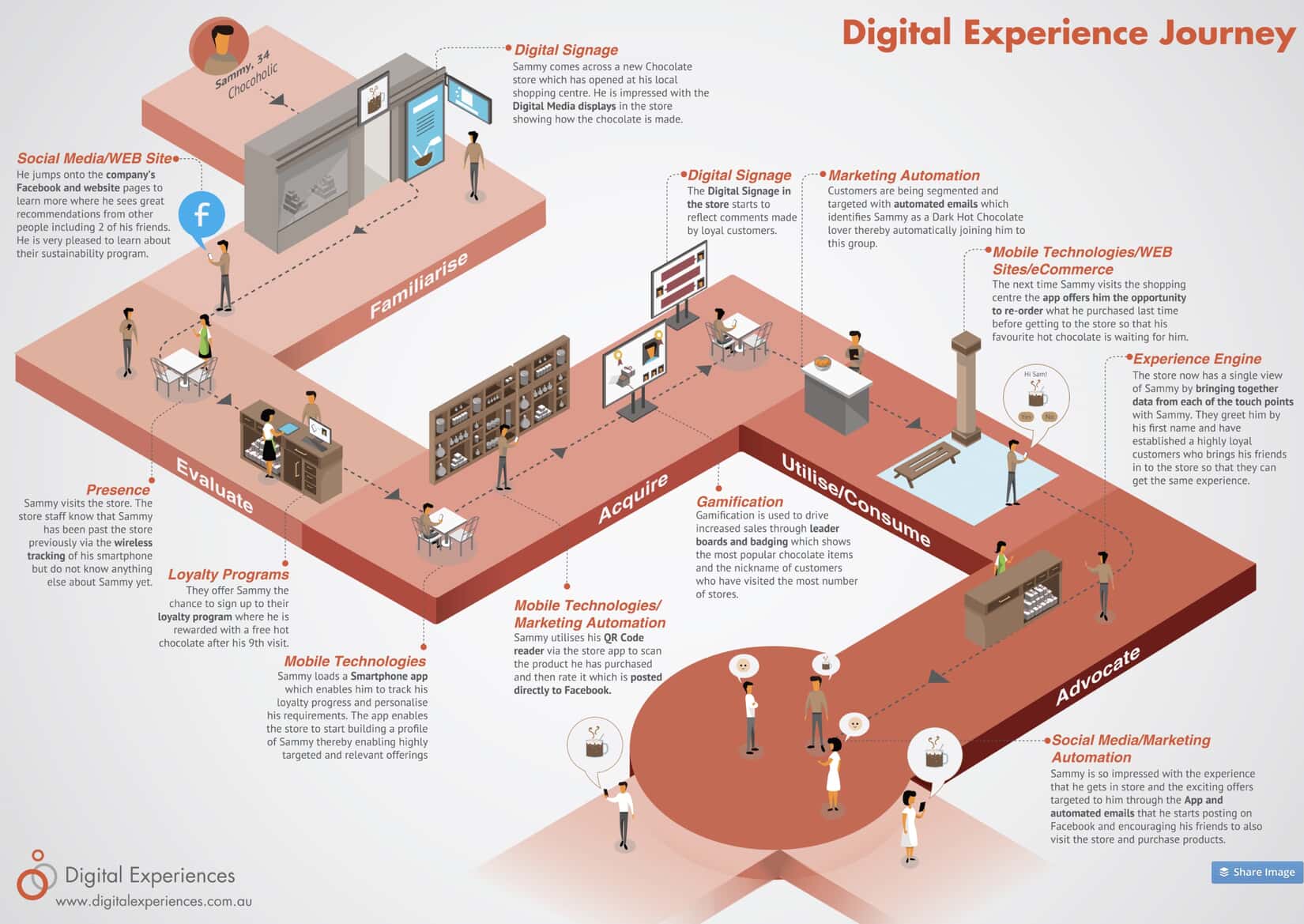

The positive impact of AI on your customer experience roadmap

In one of our last pieces about AI in the CRO world, we discussed 10 generative AI ideas for your experimentation roadmap. Since the publication of this article, we’re back with even more ideas and concrete examples of successful campaigns.

1. Display reassurance messages to visitors who value it

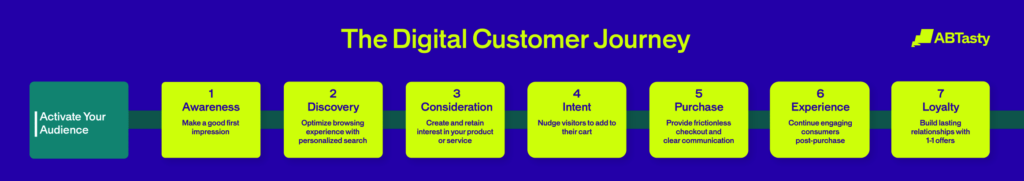

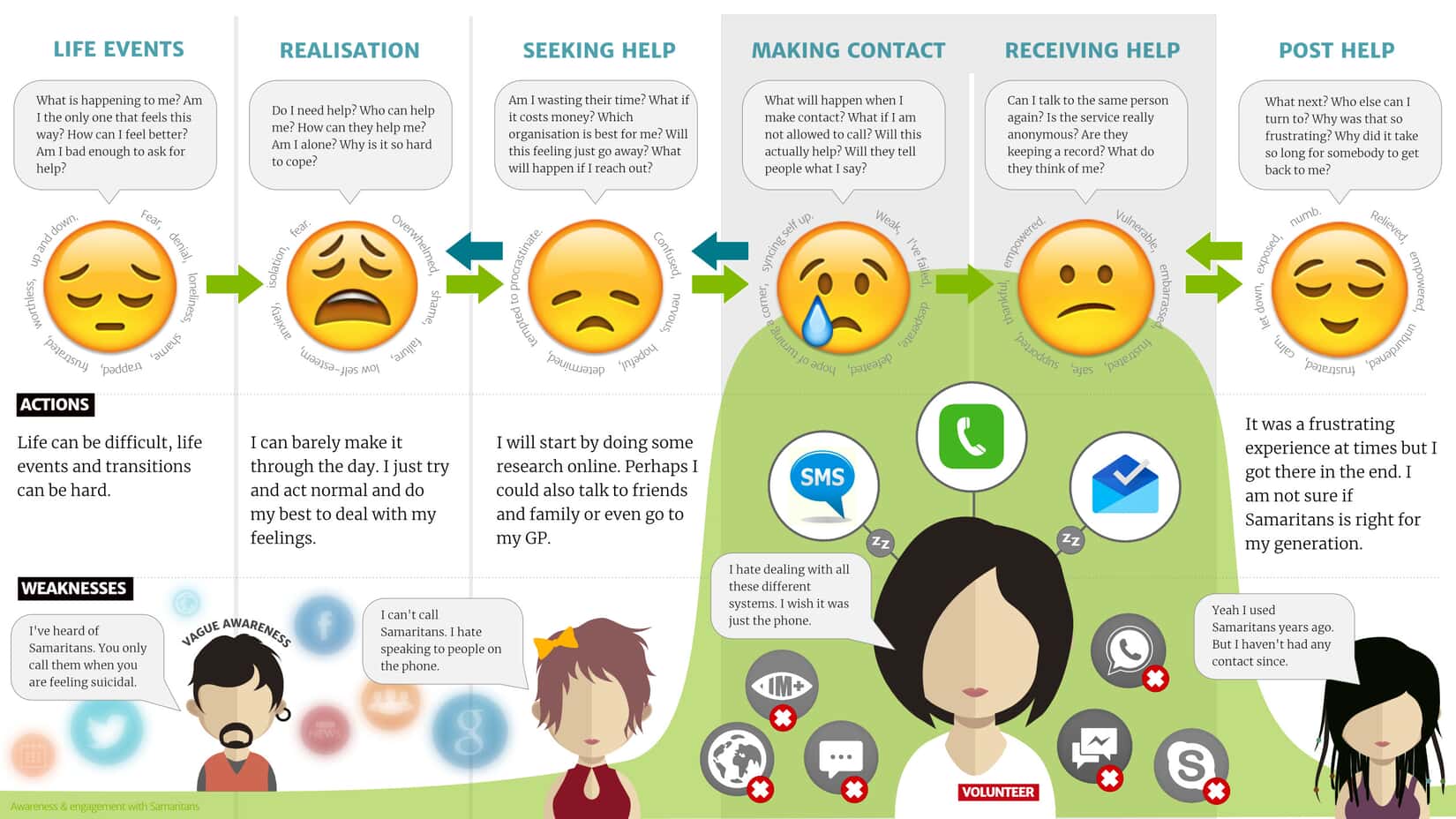

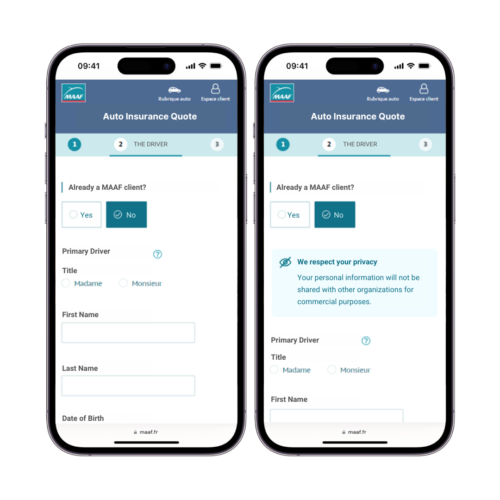

Some shoppers value their privacy and data safety above all else. How can you comfort these visitors while they’re shopping on your website without interfering with other visitors’ journeys? While salespeople can easily gauge these preferences in face-to-face interactions, online shoppers deserve the same personalized experience when they shop independently.

Let’s see an example below of how you can enhance the digital customer experience for different shoppers at the same time:

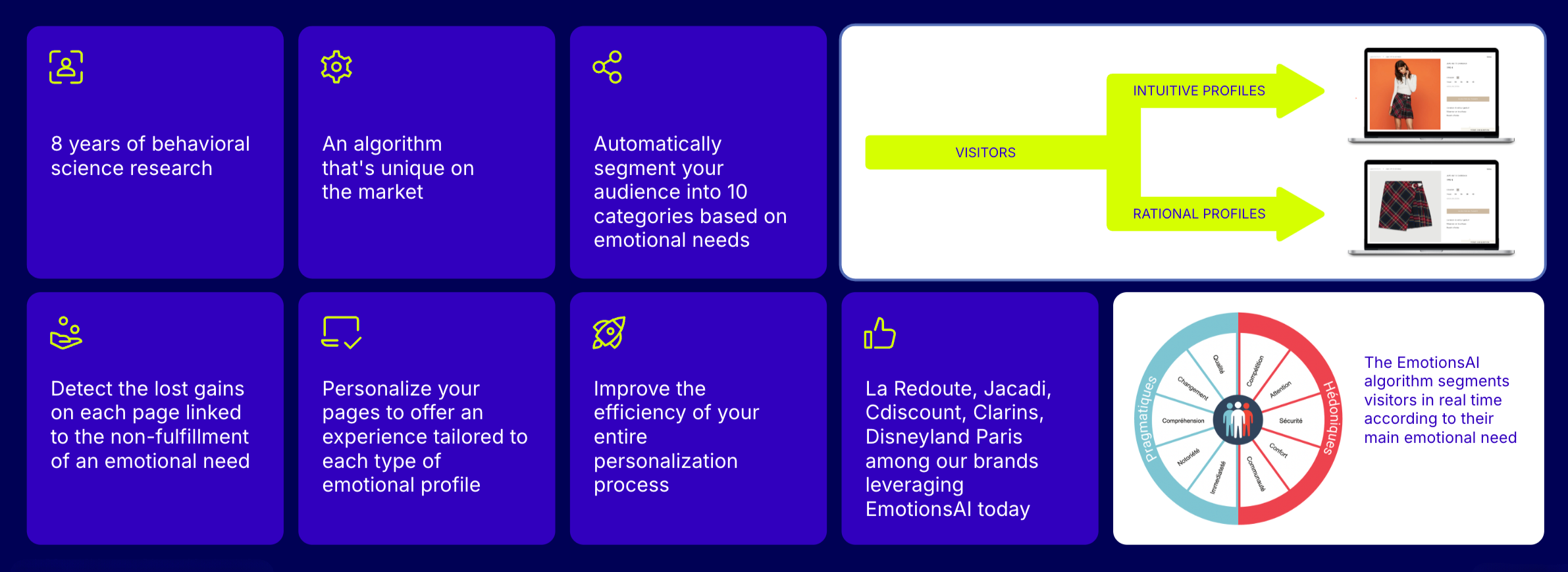

MAAF, a French insurance provider, knows just how complex buying auto insurance can be for visitors. Some shoppers prioritize safety and reassurance messages, while others don’t. With AI systems that segment visitors based on emotional buying preferences, you can detect and cater to this type of profile without deferring to other shoppers. “Intuitive” profiles are receptive to reassurance messages, while “rational” profiles tend to see these extra messages as a distraction.

The team at MAAF used advanced AI technology to overcome this exact challenge. Once the “intuitive profiles” were identified, they were able to implement personalized messages ensuring their commitment to their customers’ data protection. As a result, they saw an increase of 4% in quote rates for those directed to the intuitive segmentation, and other profiles continued on their journey without extra messaging.

2. Segment your audience based on their shopping behavior

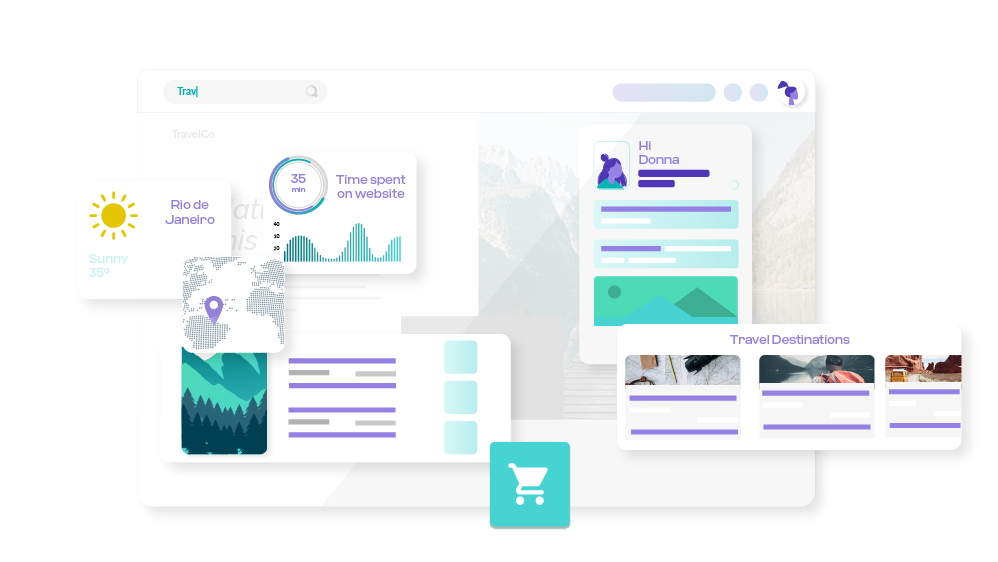

With so many online shoppers, how can you possibly personalize your website to give each shopper the best user experience? With AI-powered personalization software.

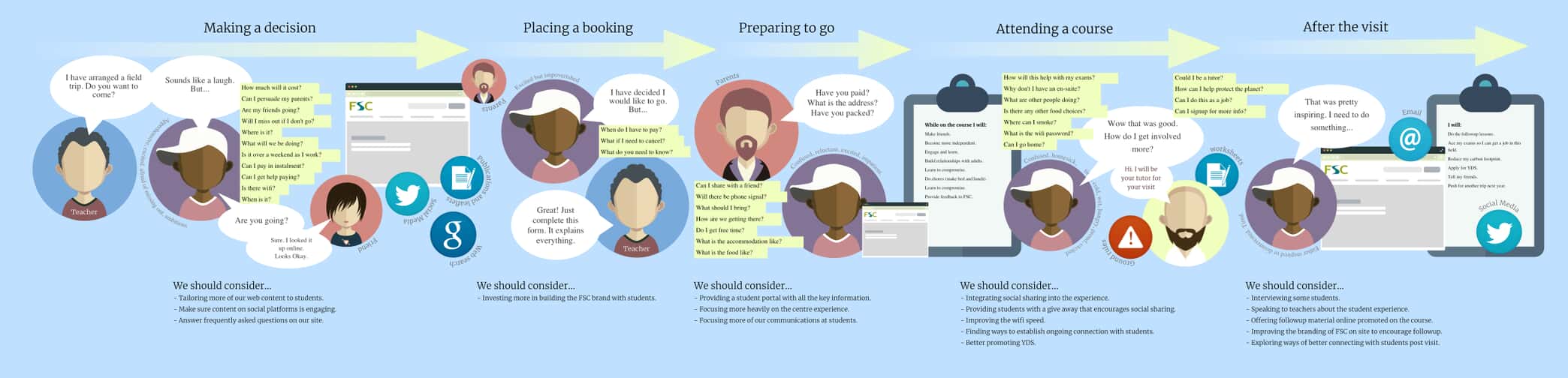

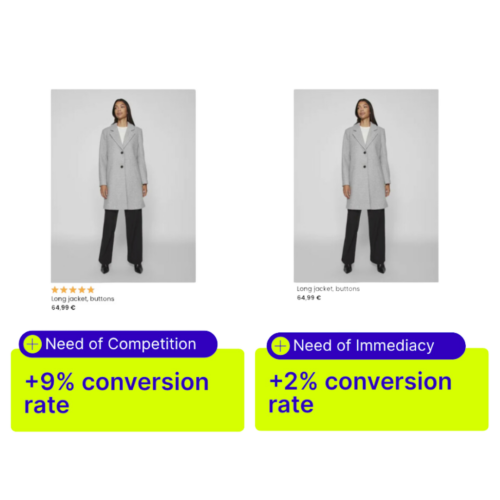

Some online shoppers have a need for competition. Don’t we all know someone who loves to turn everything into a competition? These “competitive” shoppers are susceptible to social-proof messaging and are influenced by the opinions of other customers while searching for the best product. One of the best ways to personalize a listing page for competitive shoppers is to show ratings from their peers.

Meanwhile, what works for competitive shoppers, will not work effectively for speedy shoppers. Shoppers with a need for immediacy will appreciate a clear, no-frills browsing experience. In other words, they don’t want to get distracted. Let’s look at the example below.

This website implemented two different segments targeting online shoppers with a need for “competition” and “immediacy.” These two segments brought in a 9% increase in conversion rates and a 2% increase respectively. The campaign was a success, but how did it work?

Using AB Tasty’s AI personalization engine, EmotionsAI, this online shop identified its visitors’ main emotional needs and directed them toward a product listing page best suited for them. EmotionsAI turns buyer emotions into data-driven sales with actionable insights and targeted audiences.

Want to learn more about EmotionsAI? Get a demo to see how AI can impact your roadmap for the better!

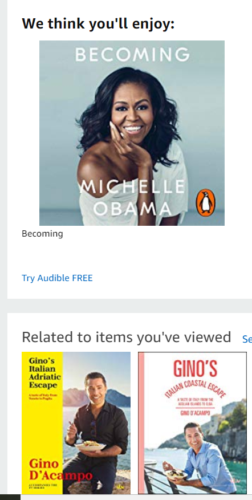

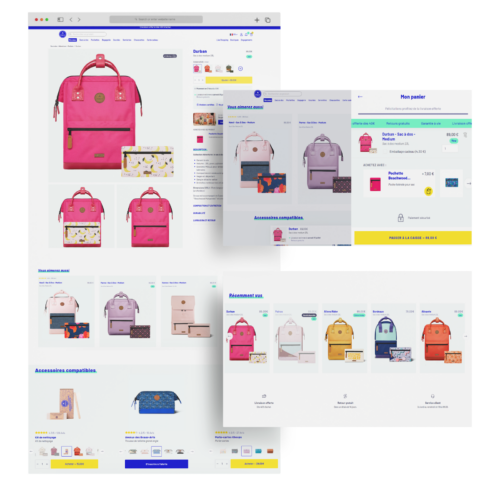

3. Automate and personalize your product recommendations

European backpack designer, Cabaïa, used an AI-powered recommendation engine to generate personalized recommendations for their website visitors based on user data collected. The team at Cabaïa previously managed product recommendations manually but wanted to shift their focus to improving the digital customer experience.

AI recommendation tools put the right product in front of the right person, helping boost conversions with a more tailored experience. Since implementing this AI-powered recommendation engine, they’ve had +13% revenue per visitor, increased conversions by 15%, and raised their visitor’s average cart size by 2.4%.

4. Innovate your testing strategy with emotional targeting

According to an online shopper study (2024), traditional personalization is no longer enough. Personalizing based on age, location, and demographics just isn’t as precise anymore.

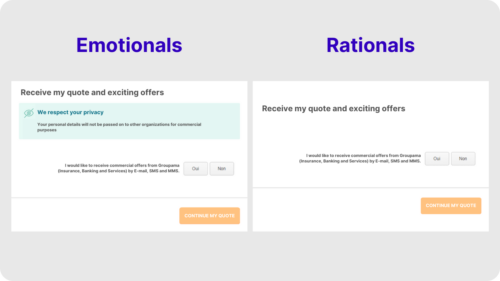

The team at Groupama, a multinational insurance group, wanted to take A/B testing a step further and better adapt their approach to fit their customers’ unique emotional needs. By using an AI-powered emotional personalization engine, they were able to identify two large groups of website visitors: emotionals and rationals.

They created an A/B test based on these customer profiles. One variation catered to the “emotional” buyers by showing reassuring messaging on the insurance quote to protect their data, and the other catered to “rationals” that displayed the insurance quote without any extra messaging that allowed them to have a distraction-free buyer journey. Within 2 weeks, Groupama saw an instant win with a 10% increase in quote submissions.

5. Simplify the customer journey and build buyer confidence

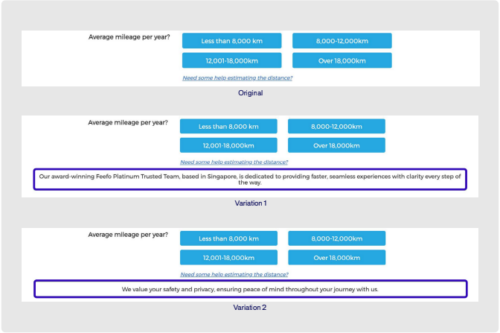

Like many financial services, purchasing insurance is inherently complex. Consumer behaviors and expectations in insurance are quickly changing.

As a leading insurer in Singapore, DirectAsia has embraced innovative technologies to better serve their customers. By pioneering new technology, Direct Asia was able to segment their visitors based on emotional needs.

The team at DirectAsia identified that the ‘safety’ segment (buyers needing reassurance) was the top unsatisfied emotional need for visitors on both desktop and mobile devices. With these insights, DirectAsia ran an experiment on ‘safety’ visitors, displaying two banners to reassure them and move them further down the form to the quote page.

The banners led to + 10.9% in access to the quote page for one, and +15% in access to the quote page for the other.

The potential risks of AI on your customer experience roadmap

Artificial intelligence has been evolving (very quickly!) over the past few years and it can be tempting to run full speed ahead. However, it’s important to find the right AI that works for you and helps you achieve your goals. Is AI powering something you need, impacting your business, or is it just there to impress?

With that in mind, let’s consider some precautions to take while using AI:

- Unfactual or biased information on data reports, website copy, etc.

When researching or asking for data sources, it’s important to keep in mind that artificial intelligence can get it wrong. Just as humans can make mistakes and have biased opinions, AI can do the same. Since AI systems are trained to produce information following patterns, AI can unintentionally amplify bias or discrimination.

- Lack of creativity, dependence, and over-reliance

Excessive reliance on AI can reduce decision-making skills, creativity, and proactive thinking. In competitive industries, you need creativity to stand out in the market to capture your audience’s attention. Your roadmap could suffer if you put too much faith in your tool. After all, you are the expert in your own field.

- Data and privacy risks

Protecting your data should always be a top concern, especially in the digital experience world. You will want a trusted partner who uses AI with safeguards in place and a good history of data privacy. With the fast-developing capabilities of AI, handling your data correctly and safely becomes a hurdle. As a general best practice, it’s best not to upload any sensitive data into any AI system – even if it seems trustworthy. As these systems often require larger quantities of data to generate results, this can lead to privacy concerns if your data is misused or stored inappropriately.

- Hallucinations

According to IBM, AI hallucinations happen when a large language model (LLM) thinks it recognizes patterns that aren’t really there, leading to random or inaccurate results. AI models are incapable of knowing that their response can be hallucinogenic since they lack understanding of the world around us. It’s important to be aware of this possibility because these systems are trained to present their conclusions as factual.

Conclusion: Using AI in the Digital Customer Experience

As with any tool or software, AI is a powerful tool that can enhance your team – not attempt to replace it. Embracing the use of AI in your digital customer experience can lead to incredible results. The key is to be aware of risks and limitations, and understand how to use it effectively to achieve your business goals.