What is the Halo Effect?

The Halo Effect is our tendency to make an overall impression of a person based on a single trait. If X is good-looking, X is also a duty-bound citizen and will follow the law to the letter. Illogical, but that’s how it works.

Let’s go back in time and surprise ourselves a bit.

A 1974 study found that when it came to sentencing defendants in the court of law, jurors showed leniency towards physically attractive individuals over unattractive ones, even though they had committed exactly the same crime. Another study revealed that social workers found it hard to digest that a beautiful person can commit a crime.

Can you believe it? A more important question: Do people continue to take good looks this seriously?

Very much so. Even when there’s enough information available to make accurate judgments, our brain prefers taking shortcuts. The short cut, in this case, is a cognitive bias called the Halo Effect.

To an extent, the Halo Effect is a type of confirmation bias, since we judge people in a manner that confirms our first impression of them or what we already believe about them. We turn to it to fill the gaps in our understanding of a person, who we don’t know yet. In that sense, it can also backfire because if we don’t like them then it will lead to negative biases. X criticizes, X is arrogant.

Tracing Its Origins

It was Edward Thorndike who first introduced the term ‘Halo Effect’ in a 1920 paper, A Constant Error in Psychological Ratings. In his research, military commanding officers were asked to assess a series of traits, including intellect, leadership skills, personal qualities (intelligence, loyalty, responsibility, selflessness, and cooperation), and physical qualities (voice, neatness, physique, etc.) of their subordinate soldiers. The researchers found that high ratings of one quality correlated with high ratings of other traits, whereas negative ratings of one quality pulled down other trait scores.

Although this theory was proposed in reference to people, the metaphor extends to brands, website design, marketing strategies, and advertising.

In each case, it reiterates one fact: We are not always as objective as we believe we are. Even our most rational decisions are sometimes flawed and misguided.

We are not always as objective as we believe we are. Even our most rational decisions are sometimes flawed and misguided.

How to Create a Halo Effect

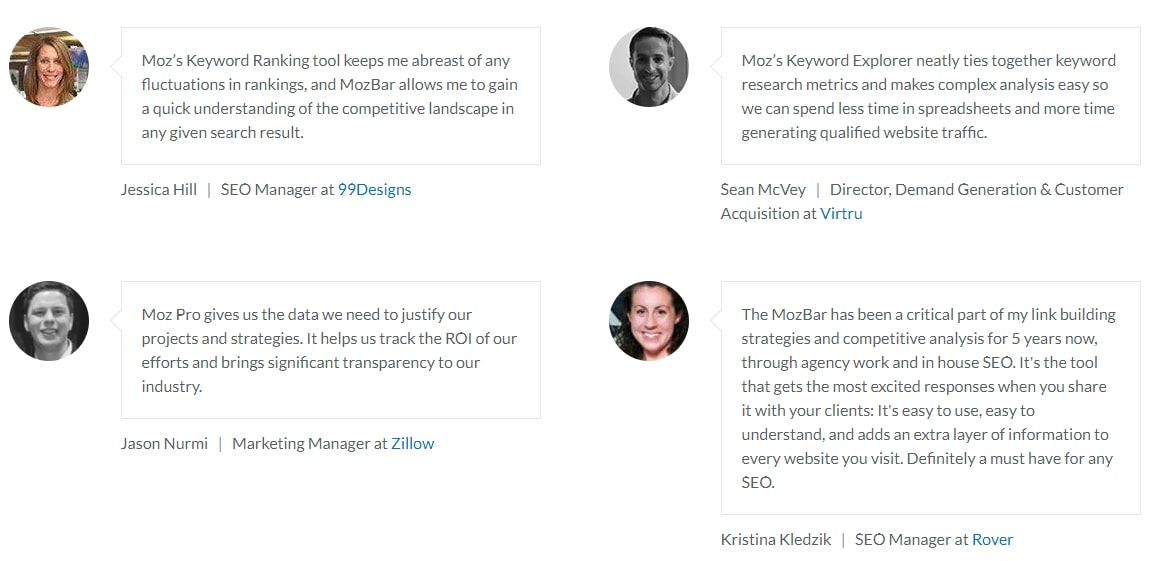

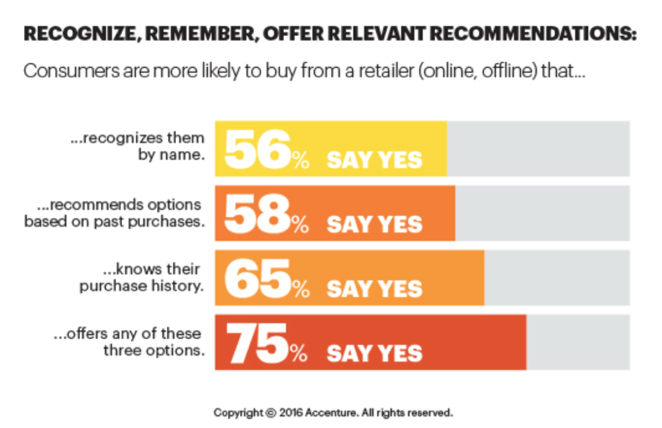

Marketers have actually been benefiting from creating a Halo Effect on their websites and brands for a long time. To get ahead of the game, the key is to know where the limit is – consumers aren’t blind to these efforts, and overdoing it can backfire.

Now, if you’re ready, here’s how to pull all the right strings to create a positive, first impression.

Put Attractiveness to Good Use

When someone good-looking becomes the face of your brand, the perceived value of your product increases. It’s called the attractiveness halo effect. And marketers dig this strategy because it pays off. What really transpires? Scientists claim that, when seeing an attractive man or a woman in an advertisement, people bypass their rational faculty and buy on impulse. They assume ‘what is beautiful is good’ and transfer the model’s attractiveness (easily observed characteristic) to the product. Though, what’s interesting is that this phenomenon is mostly restricted to products related to beauty.

Now if you’re a beauty brand, it’s worth noting that some researchers have recorded negative responses (pg.22) toward attractive models, especially among female consumers. This, in turn, had a negative impact on the ad’s effectiveness. One likely explanation is social comparison with models. That said, even men’s self-esteem takes a blow seeing stereotypical male models.

Other studies demonstrate positive consumer responses to less stereotypical depictions, like the Dove “Real Women” campaign. This has encouraged many brands to feature real, un-photoshopped photos of models to keep it as real as possible. Needless to say, the Halo Effect in such cases is phenomenal!

Bottom line: Use attractive models if they are relevant to the products and services you offer.

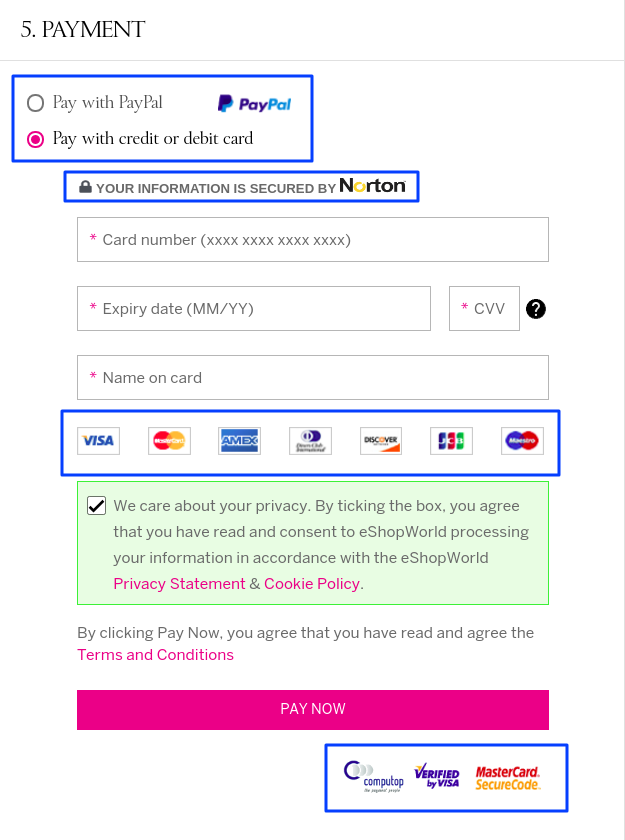

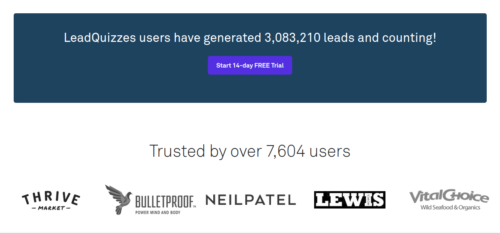

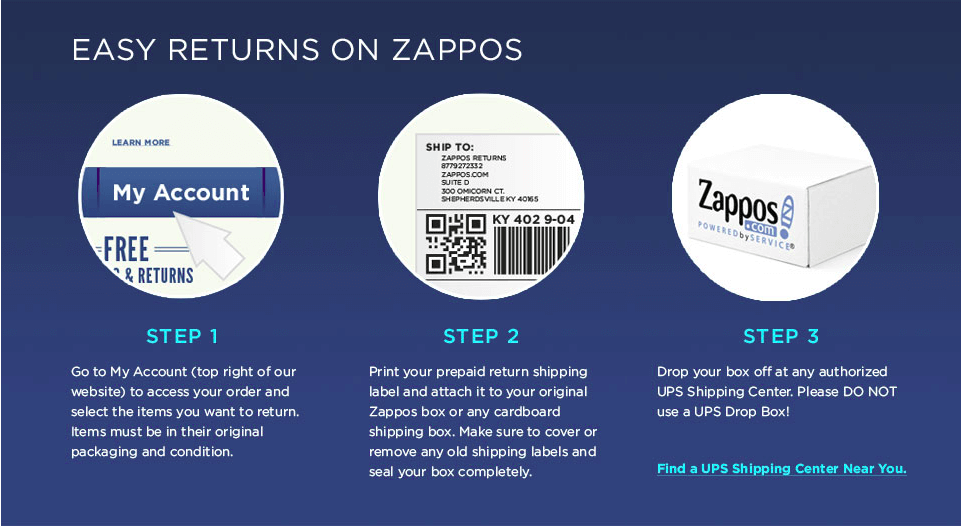

Pleasing Website Design

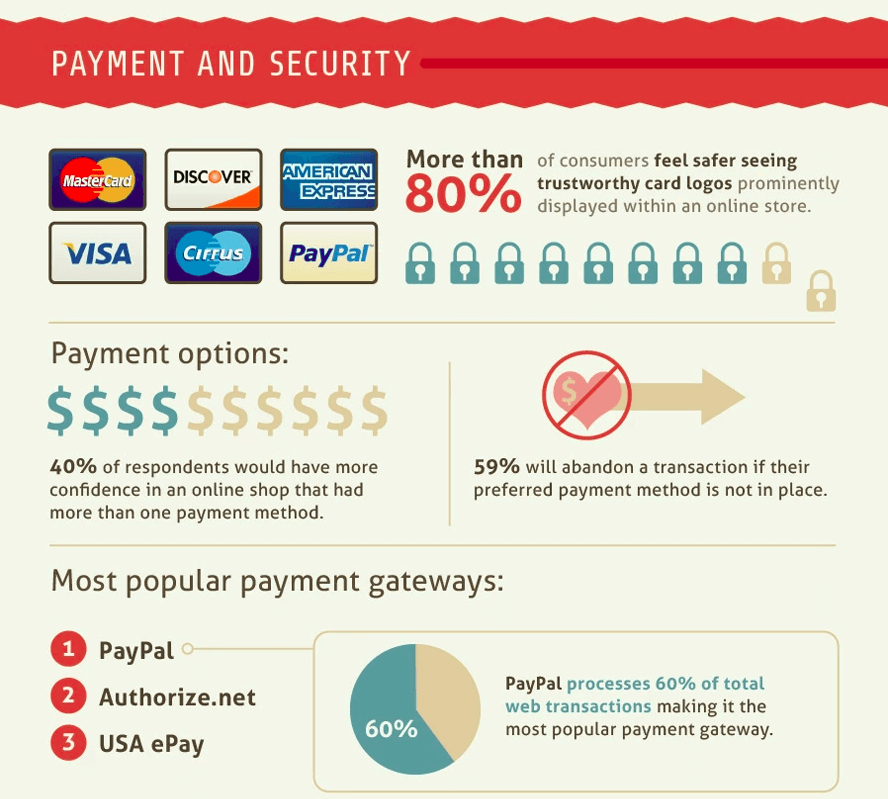

From people to websites, we do prefer anything that is remotely good-looking. The logic goes: Beautiful = Trustworthy.

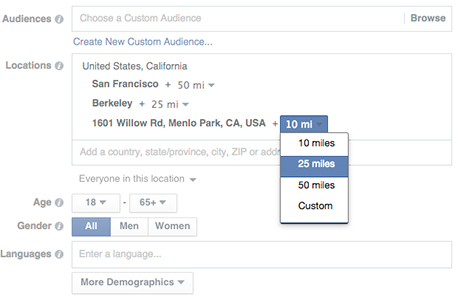

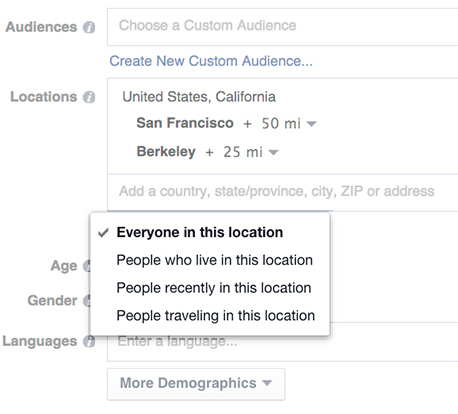

Findings published by ConversionXL Institute confirm: People look at design-related elements to determine whether they should trust a website or not.

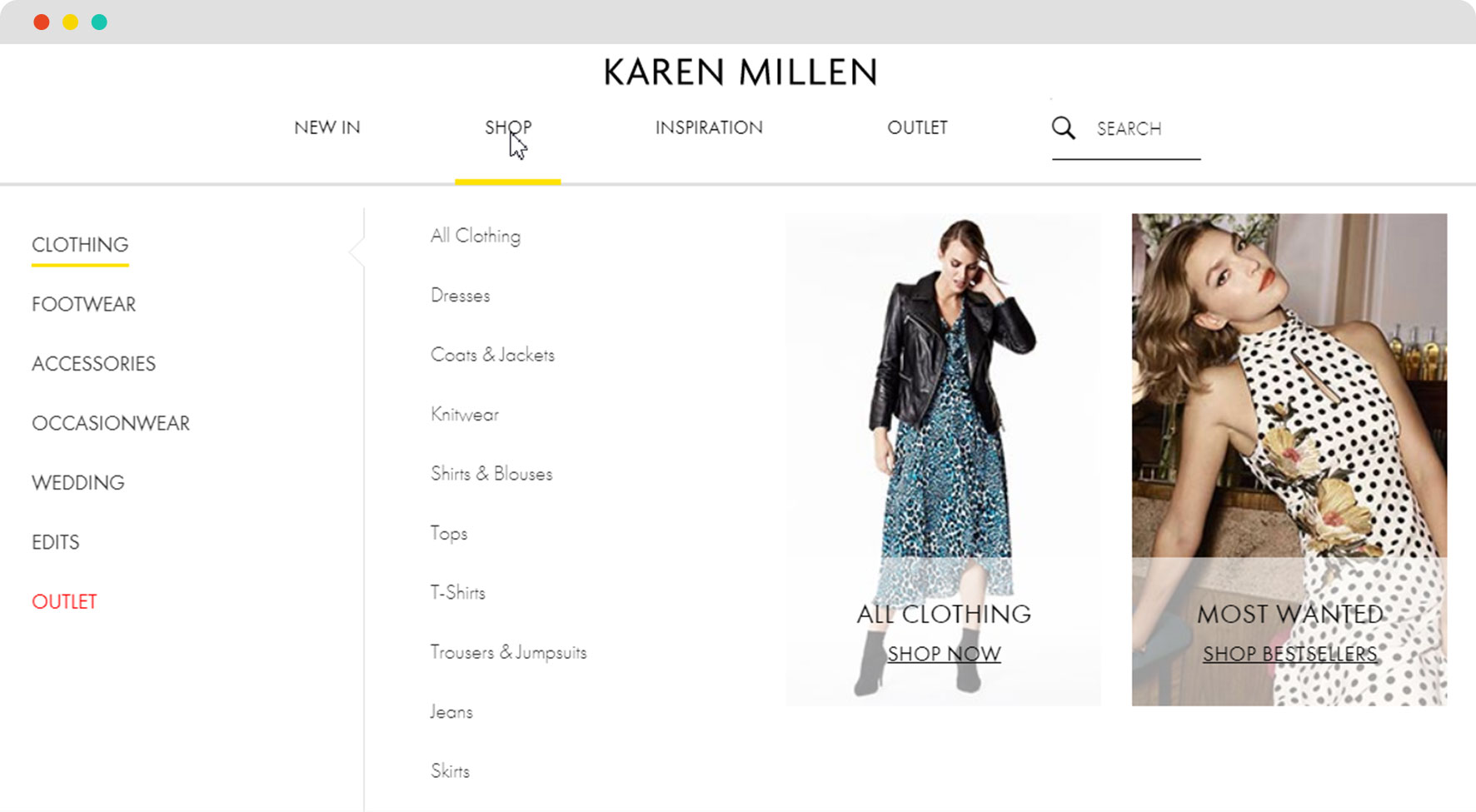

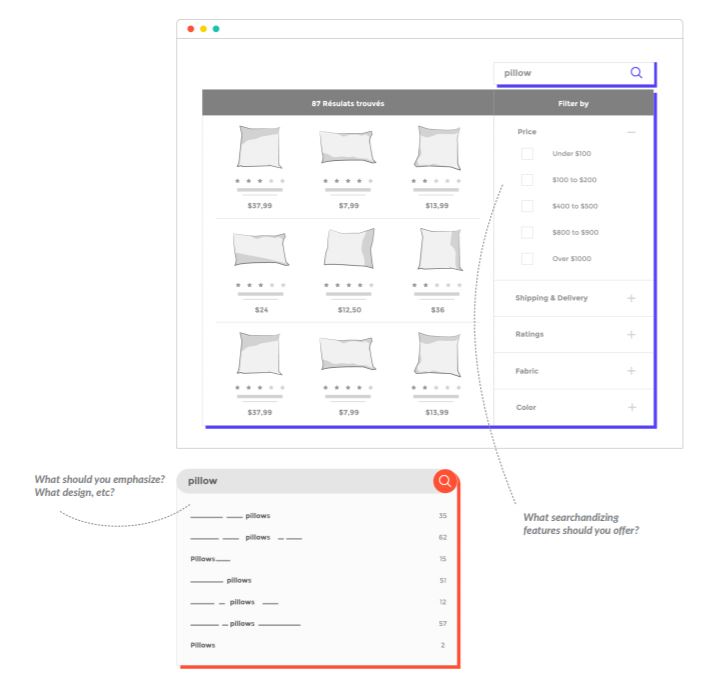

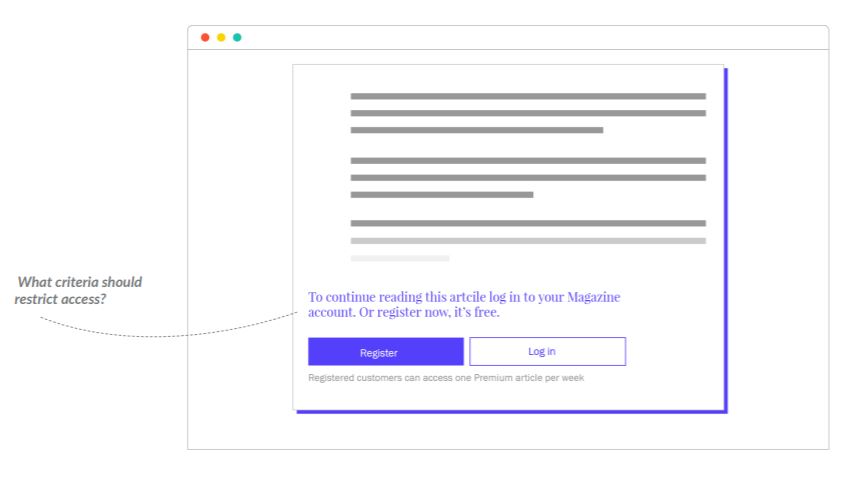

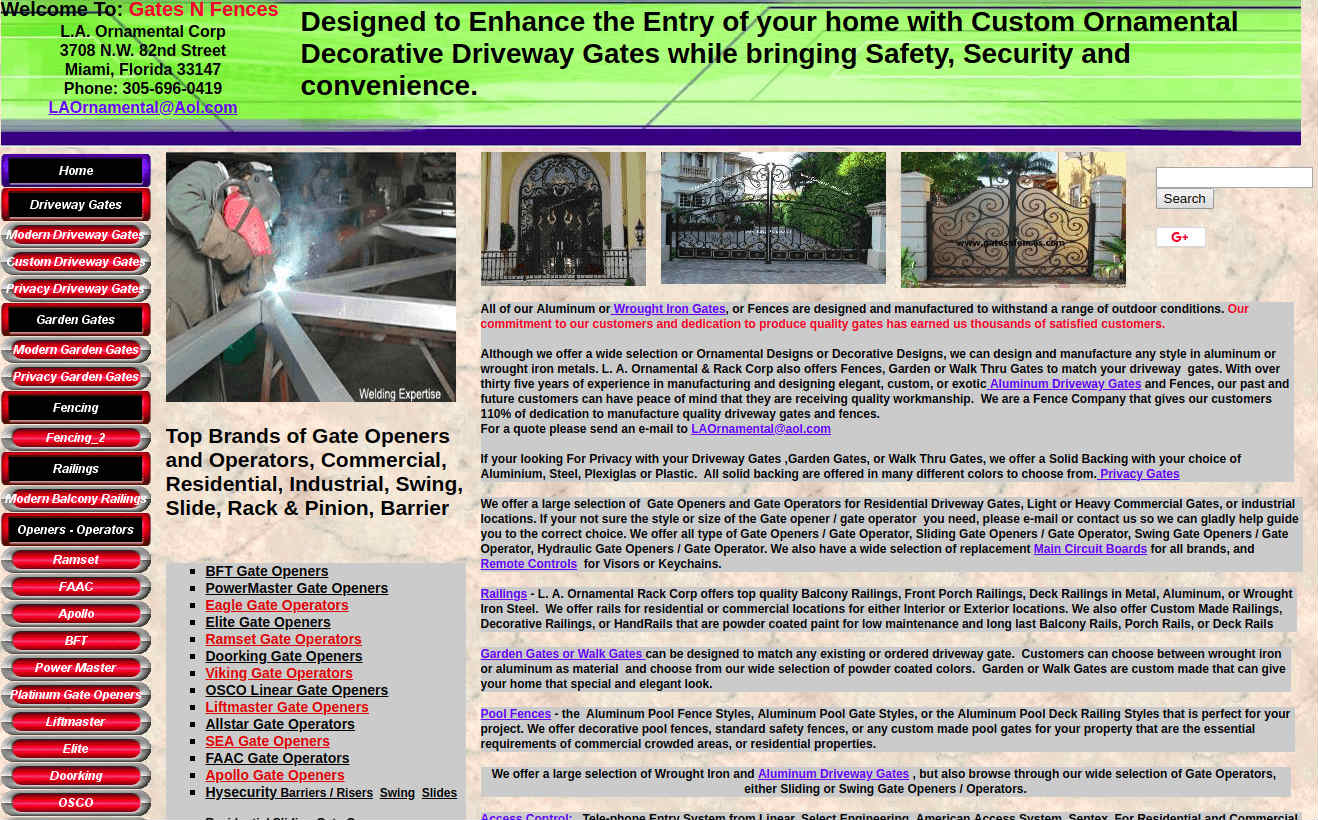

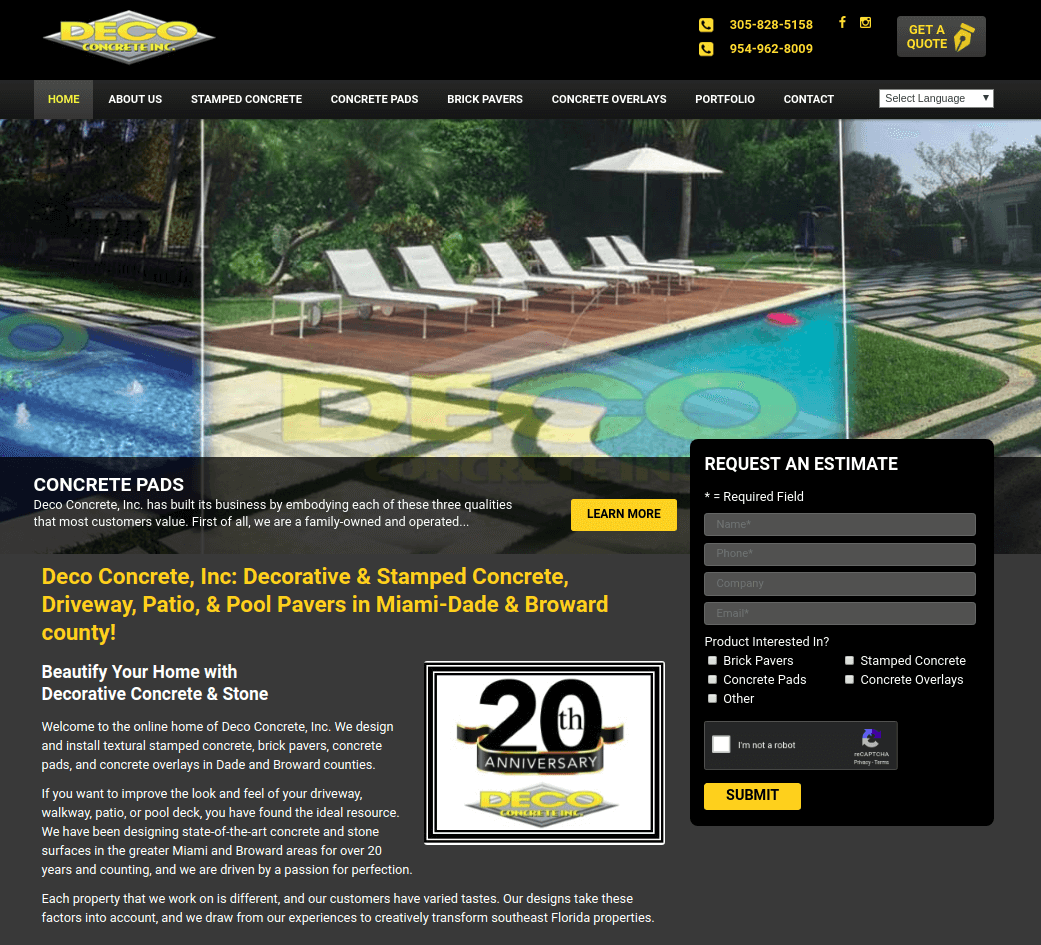

See for yourself. Of the two screenshots below, which website do you think you trust more?

The second one, because it looks better design-wise? Correct. It’s less cluttered and more purpose-driven.

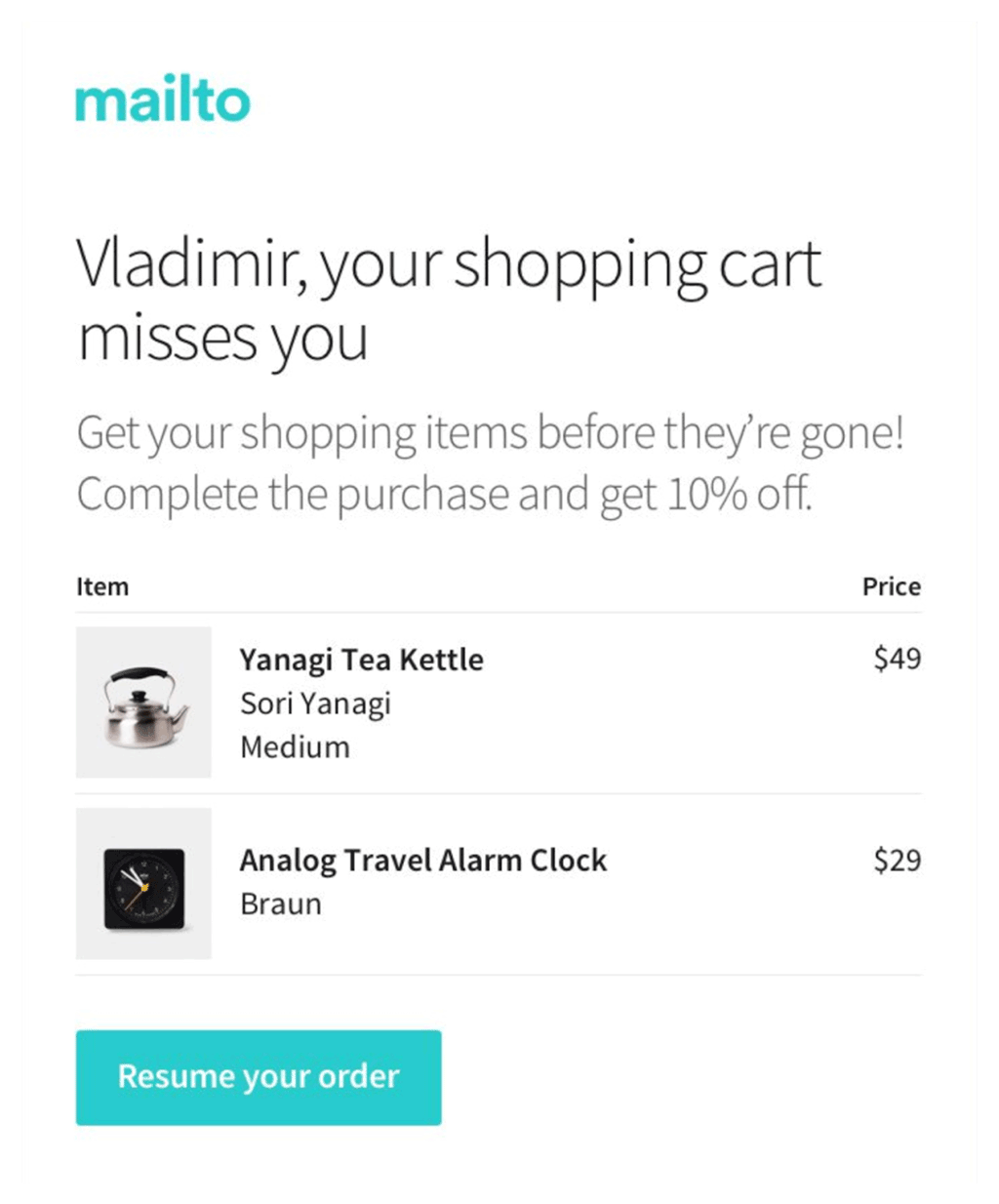

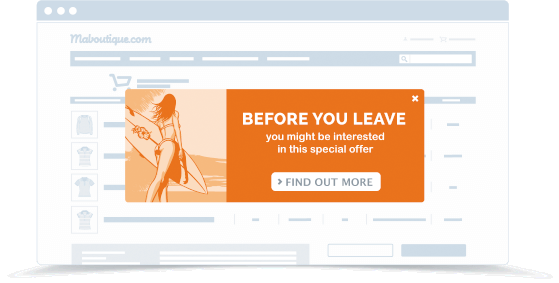

This brings us to the next point: Creating an eye-candy of a website is a job half-done. Unless it is easy for users to find what they are looking and carry out necessary functions, they won’t care to stop by later.

The question to now ask is: What do you do?

You identify user experience issues because even one bad experience can hurt your company’s reputation and no amount of cosmetic changes can fix that. Marks & Spencer tried and failed. Their redesigned website had several usability and performance issues, which caused an 8.1% of drop in sales.

Bottom line: Aesthetic web design and usability go hand-in-hand.

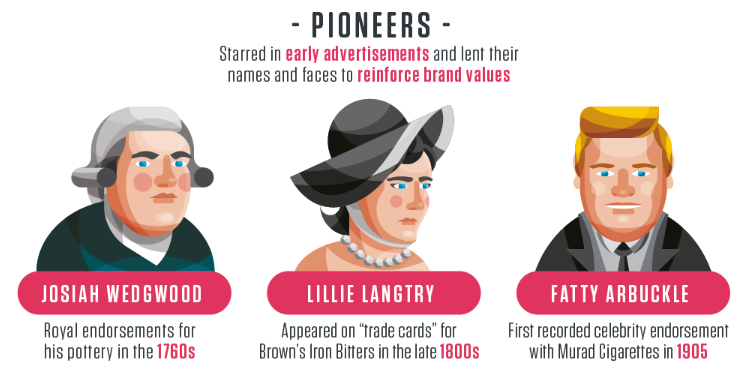

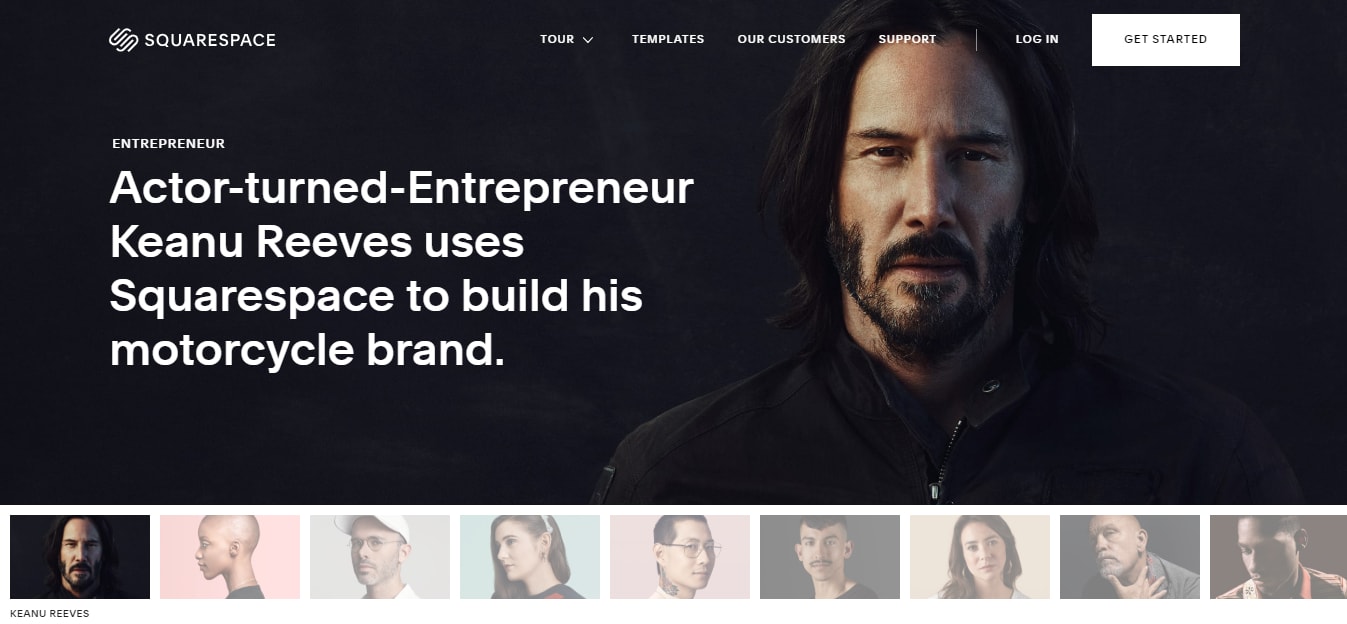

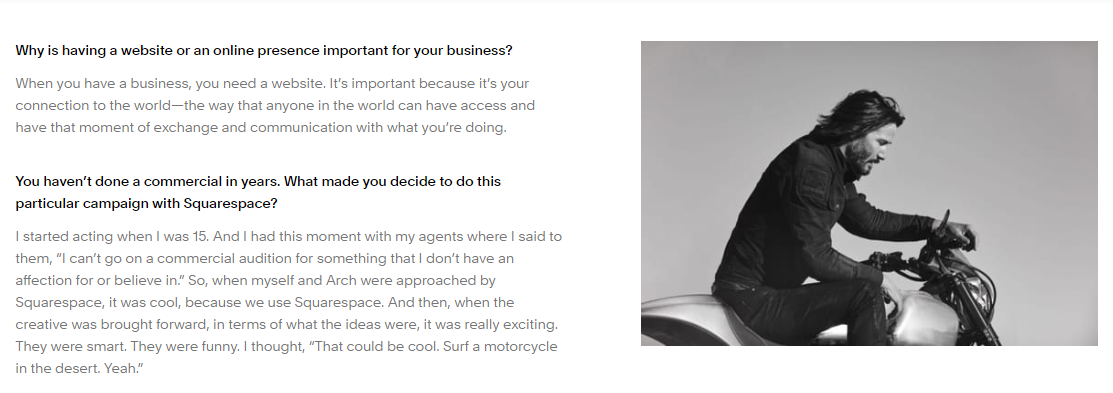

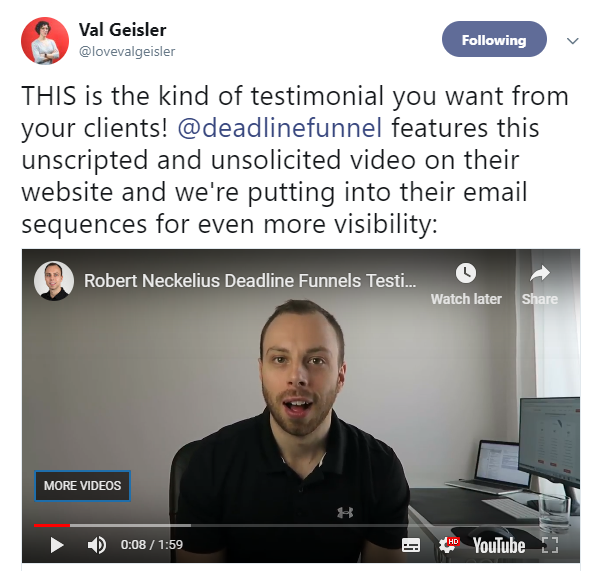

Borrow Celebrity Influence

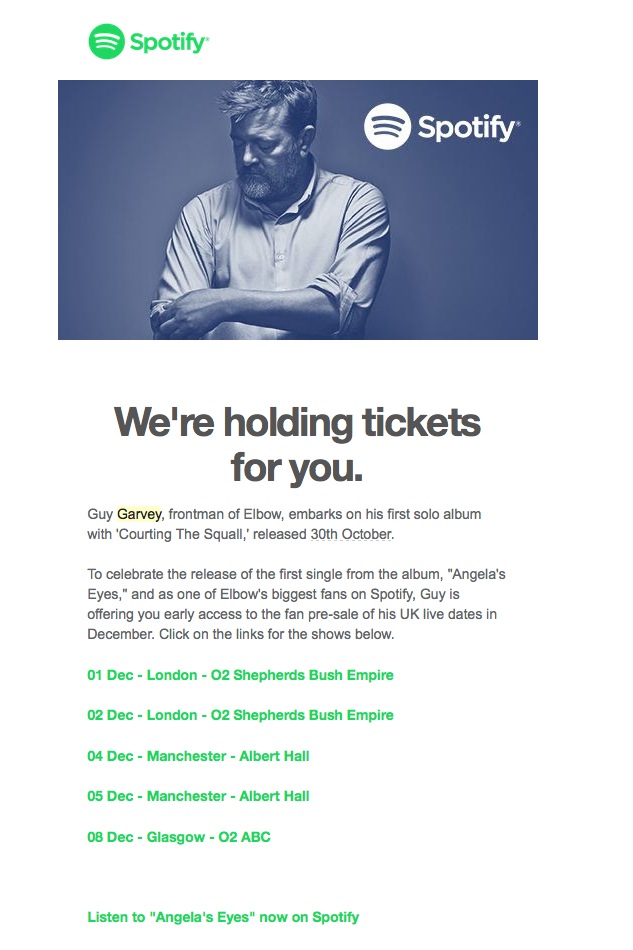

Signing-up celebrities to endorse you is the fastest way to amplify trustworthiness of your brand. How does it work? Well, fans admire their favorite celeb and by extension, they trust the brands they represent.

That said, getting a celeb onboard is a complicated process. There are a few things to take care of:

- Celeb’s public image and your existing and intended brand image should be a natural fit.

- Their celebrated status shouldn’t overshadow your brand and products.

- They shouldn’t endorse multiple or your competitor’s products. It dilutes your ad’s recall value.

But even when all the necessary safety precautions are taken, companies face backlash when a celeb goofs up. This can, of course, never be predicted, which is why it’s always better to monitor what the celebrity has been up to.

Bottom line: Celebs are a means to an end; use their popularity such that they don’t overshadow your brand.

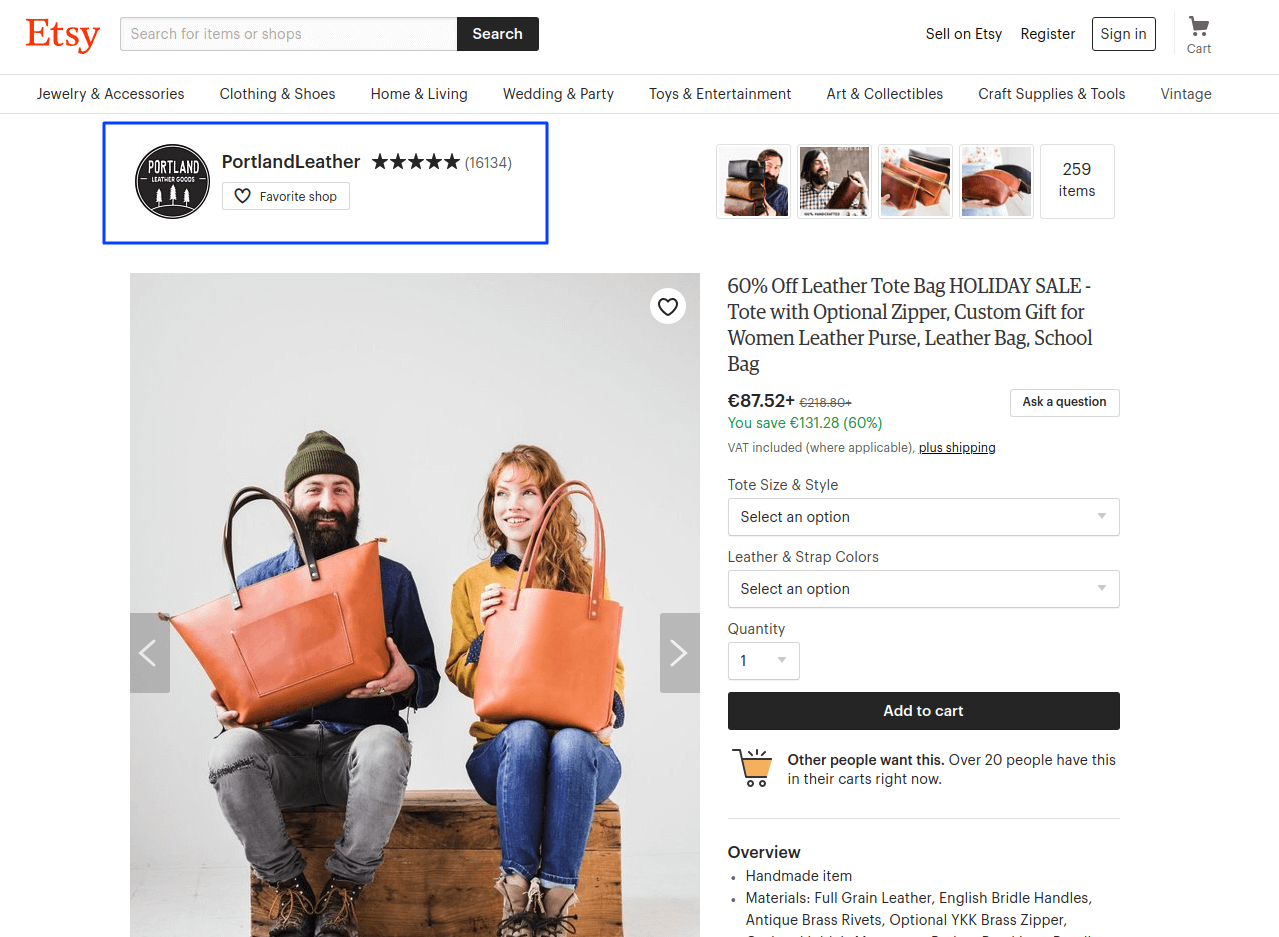

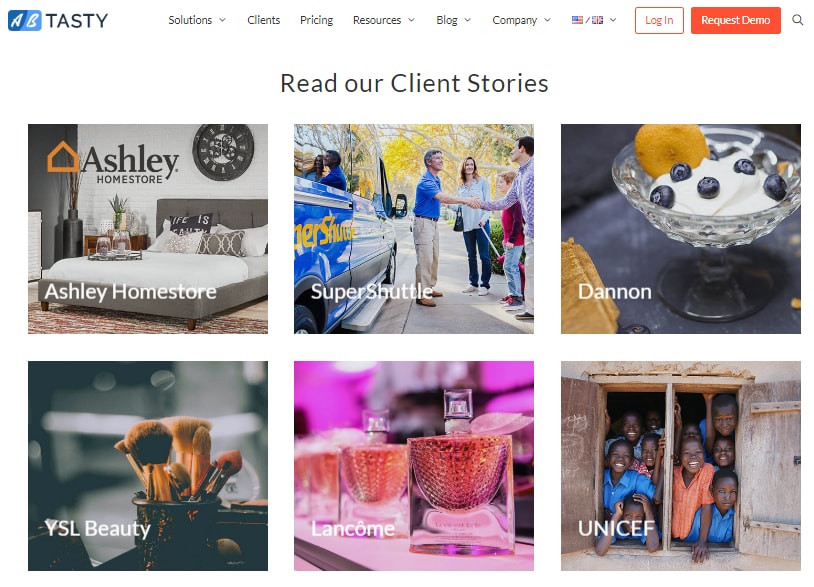

Create A Solid Brand Equity

Moving on from your website to your brand.

Your brand equity or value hinges on your customer’s perception of + preference and experience with your brand. If you want them to think highly of your brand, focus on the quality and innovation of your products and services. In the end, both must exceed their expectations.

Once you become a household name, your products will start selling owing to the Halo Effect. This happens since people positively associate with the rest of your product line-up. A classic example is when in 2003, Nike used its brand recognition to enter a competitive golf equipment market.

The opposite is also true: One negative experience and your sales figures will plummet. Back in 2017, for example, Samsung’s brand equity declined when Galaxy Note 7 was recalled. Surprisingly, though, it maintained a strong emotional connection with consumers at the master brand level over Apple. Would you be as fortunate as them? Honestly, there’s a one in a billion chance!

Bottom Line: Working your way up the popularity charts is an ongoing process.

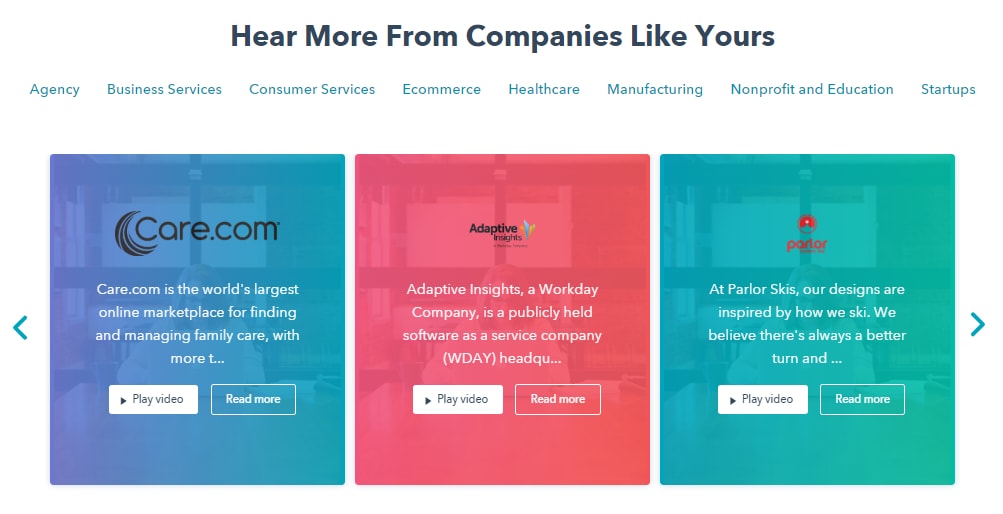

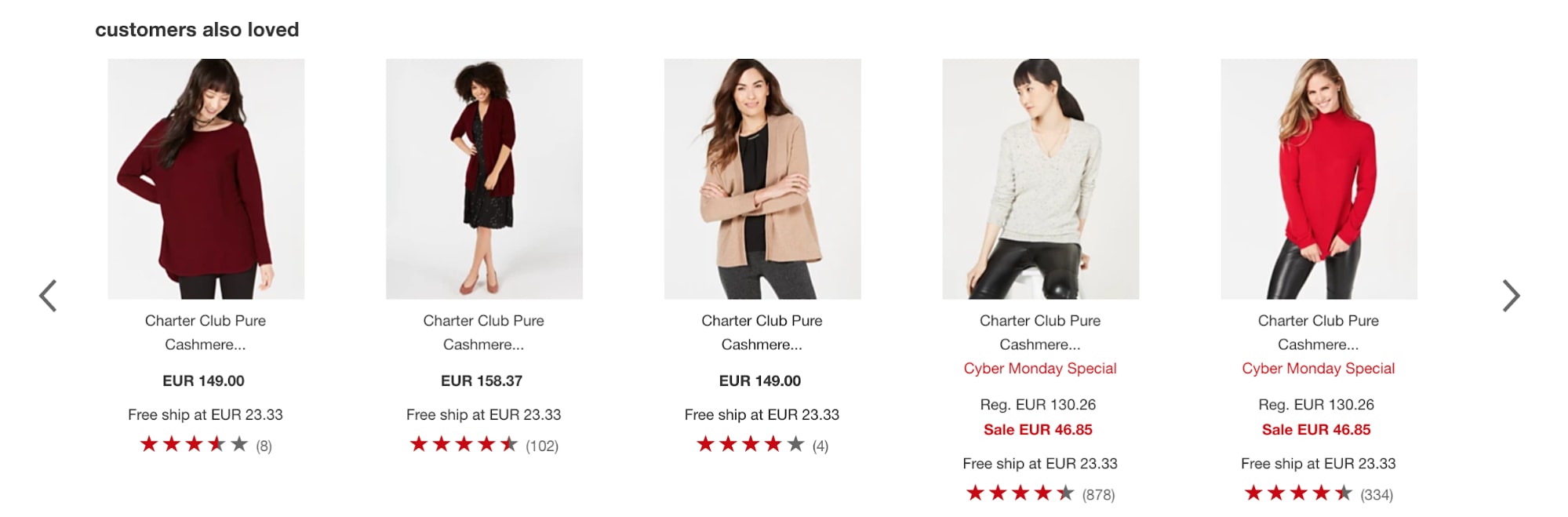

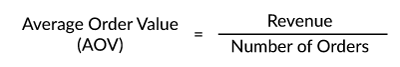

Cash In On The Popularity Of A Star Product

A lot of companies benefit from a Halo Effect that stems from the popularity of one of their products. Not only do their other, old products start selling like hot cakes, but even the future products are expected to be well-received.

Here’s how iPod became a halo for the rest of Apple products:

In 2004, Apple focused solely on bombarding the public with ‘TV advertising, print ads and billboards touting its iPod’. The iPod brand took over the world by storm and ‘Apple computer and related businesses were up 27% in fiscal 2005 over the previous year. And, according to industry reports, Apple increased its share of the personal computer market from 3% to 4%.’

Bottom Line: Create a star product and let it work its magic on your catalog.

Ready With A Plan?

Obviously, you are!

Go on and use the Halo Effect to make a genuine connection with your target audience. Get them to like you right from the start, because you never really get a second chance to create an incredible, first impression!

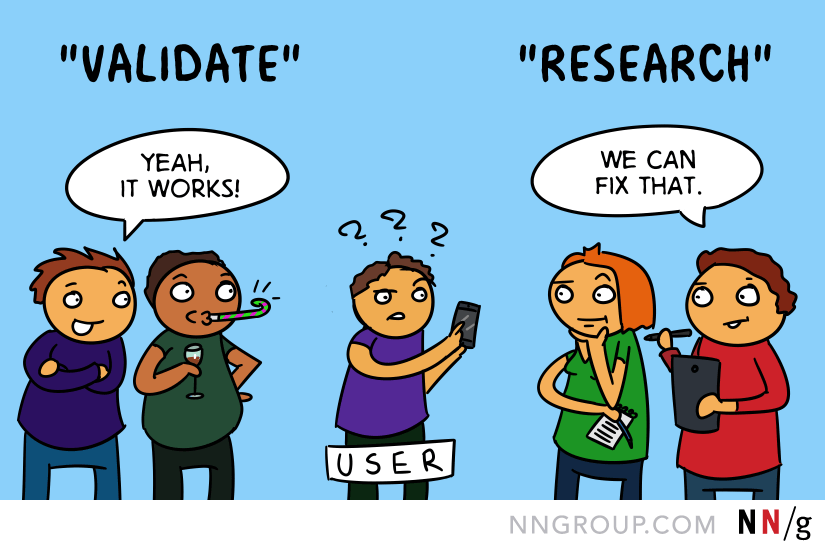

At the same time, remember to conduct usability tests to gather enough data. You’ll ultimately learn what’s working, identify new opportunities to optimize conversion and know where the leaks need repairing.