Can one single factor such as page speed make a big difference when it comes to conversions?

More than you’d have expected.

In fact, according to research led by Akamai, a two-second delay in page load time can increase bounce rate by more than 100%.

That’s right, your bounce rate can actually double, meaning you could be losing an enormous amount of visitors, right from the start.

As if it wasn’t enough, research led by the same source pointed out that more than 40% of visitors who had a bad experience with a website’s performance would tell a friend or a family member.

In short, slow loading time doesn’t only affect your incoming visitors, it also potentially deters future visitors from shopping on your website.

The main problem that marketers face with loading time is that most of them can’t actually grasp the importance of improving page speed by a few milliseconds: it’s just not tangible enough.

In consequence, most online retailers often overlook page loading time in their annual priorities because they focus on other more “tangible” enhancements such as design, SEO, social media, paid ads…

And that’s a mistake. Research and data from industry giants and experts have all shown that page loading time directly affects conversions, page views, CPC, SEO, and direct revenues.

To help you sort it out and quantify the impact of page loading time over conversions, we prepared some additional insights that should help you better understand the stakes involved in improving page speed.

Keep reading, it’s worth it.

Page load time impacts users experience

Did we mention that online shoppers hate long loading time?

If not, here’s an interesting fact: according to stress-testing specialist Dotcom-Monitor, “75% of all users will typically bounce as page load time passes the 3-second mark.”

Aside from bounce rates, page speed also matters when it comes to satisfying your customers: Aberdeen points out that an extra second in loading time results in a staggering 16% decrease in customer satisfaction. That is just ridiculous.

Knowing this, adding just a few extra seconds to your loading time yields unbelievably negative results on your ability to engage your visitors and commit to good customer service while around 45% of all online shoppers expect a website to load within 2 seconds.

If page speed does affect how customers interact with your website: it also has a direct impact on their likeliness to purchase.

How website speed directly affects your sales

If your site is plagued with speed issues, there’s no doubt you’re losing tons and tons of potential customers on your website and thus many sales.

Adding to that point, let’s not forget what we said about dissatisfied customers: 40% of them won’t hesitate to talk poorly about their online shopping experiences.

About those same dissatisfied customers, HubSpot mentions that 80% of them are less likely to buy again from the same website: that is a huge loss in potential returning visitors.

And ecommerce players need returning visitors: it’s a key element of the Customer Lifetime Value as they represent up to 48% of all transactions according to Business Insider.

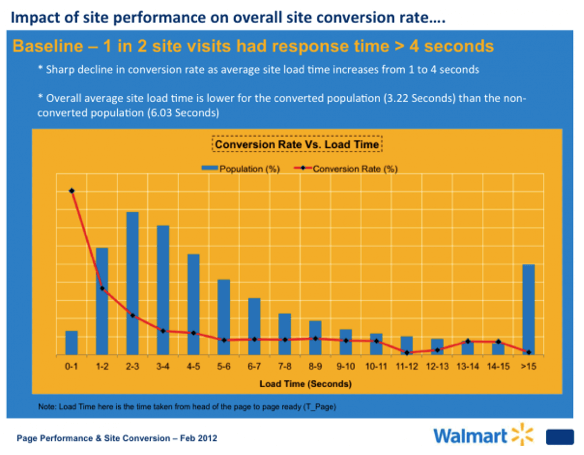

To quantify how important page speed is regarding conversions, Walmart came up with an impressive analysis regarding their online sales and how customers behaved in comparison to page loading times.

Here’s the main takeaway: they noticed a “sharp decline in conversion rate as average site load time increases from 1 to 4 seconds.”

While their conversion rates remain stable between 5 and 8 seconds loading times; notice how big of a difference it makes for users that experienced loading times under 3.5 seconds.

Of course, not all websites can match Walmart’s volume of sales and that’s totally fine.

There are still big financial benefits to draw from page speed improvements; regardless of your revenues.

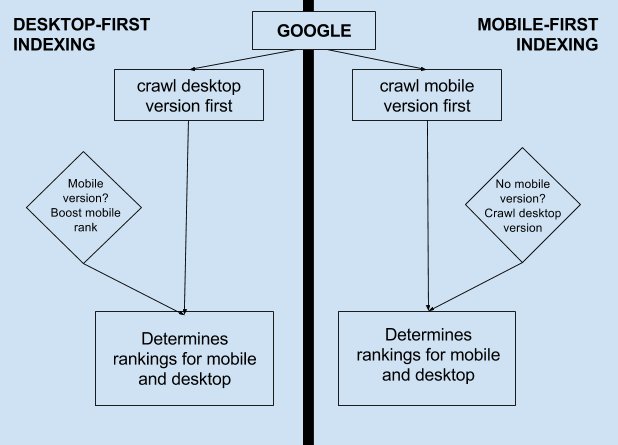

Your mobile website speed influences your online visibility (SEO)

Breaking news in the small world of SEO: mobile page speed will effectively become an official ranking factor in search results, starting July 2018 (source: Search Engine Land).

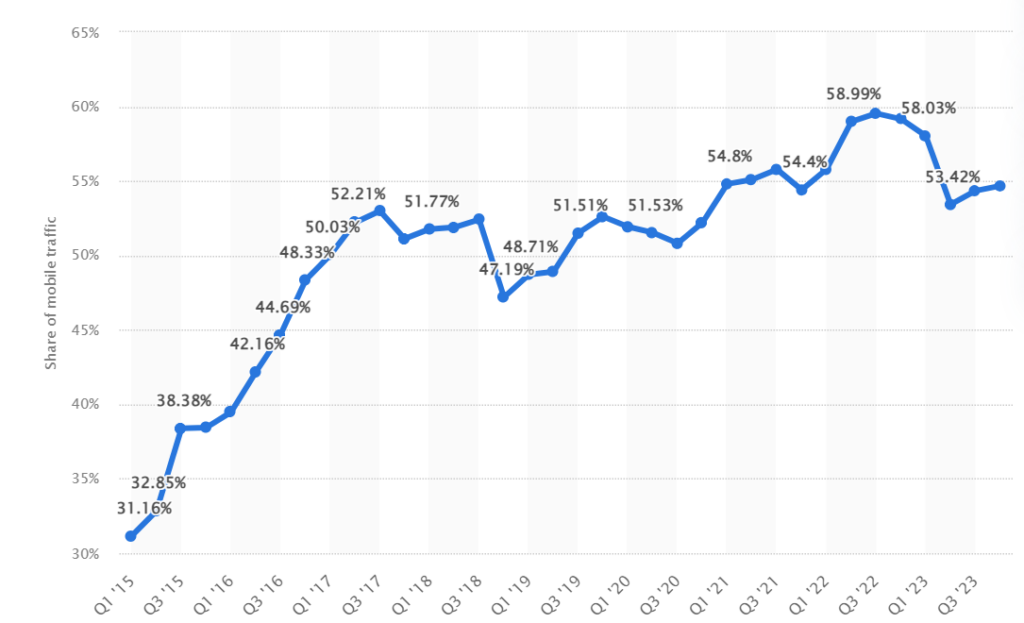

Because more than 50% of all worldwide traffic was generated through mobile phones in 2024, it’s easy to grasp how important mobile page loading times are becoming for all online businesses.

Well aware of the meteoric rise of mobile traffic, Google rolled out its Mobile-First Index in 2017, meaning that the search engine now ranks websites primarily based on mobile performance factors rather than desktop performances.

Simply put, this means that your mobile page speed now directly affects your SEO game: it could potentially hurt your rankings in the next months.

Additional resources: Mobile Optimization Guide

What’s an ideal website load time?

When it comes to reaching a perfect loading time, Google says that 53% of mobile visitors leave a site that takes longer than 3 seconds to load: this is what you should aim for.

Behind the curtain, the ugly truth is rather alarming: Google points out that the average time it takes for a mobile landing page to fully load is in the 20 seconds range: millions of websites are miles away from the ideal loading time.

3 free tools to measure your website page speed

If you’ve kept reading with us to this point, you’ll probably want a quick overview of your website speed and that’s totally fine.

To help you find out the stumbling blocks that curb your website’s loading speed, here are 3 free tools that will help you improve your page speed:

These 3 tools are free and user-friendly: just put in your URL and you’re good for a quick analysis.

If you’re looking for further page speed insights, take a look at Google’s PageSpeed Rules so that you can take action now.

Did you like this article? Feel free to share and check out our other in-depth articles on how to optimize your website and our complete guide to conversion rate optimization.

Using the same quantitative approach, surveys and online questionnaires are a cheap, quick and semi-reliable way to gather feedback on your product.

Using the same quantitative approach, surveys and online questionnaires are a cheap, quick and semi-reliable way to gather feedback on your product.