Breaking news: according to CopyBlogger, 80% of all readers never make it past the headline.

If you read this, you’re among our happy 20% and you shall not be disappointed.

The truth is: it’s a pretty serious issue for all publishers.

Similarly, the Washington Post reported that 6 in 10 Americans acknowledge that they don’t read past the headlines regarding any type of news.

So, should we just stop writing?

Obviously not.

In 2018, the written content is still one of the most consumed media (in competition with videos) and remains a powerful tool to:

- Build brand awareness

- Generate B2B Leads

- Report news

- Drive sales

- Grow your audience

- Rank on search engines

Knowing that most readers won’t spend more than 15 seconds reading an average article (source: Buffer), crafting powerful and catchy headlines has never been more important to ensure that your audience will stick around for a while and that you don’t produce content in vain.

But how do you make sure that your headlines really work?

It’s simple: you need to run some headline A/B testing.

What is headline testing?

Definition: headline testing consists of creating several title variations for the same article (or online media piece) in order to find out which one performs the best.

Based on your objectives, headline testing can be used to track several metrics such as:

- Number of shares

- Average Reading Time

- Click-Through-Rate

- Bounce Rate

- Conversions

How to conduct headline testing

Headline testing requires you to define a title as the “control version” in order to compare it with one or more variant.

While choosing the number of variants, bear in mind that the more variants you wish to test, the larger sample you will need in order to obtain statistically relevant results.

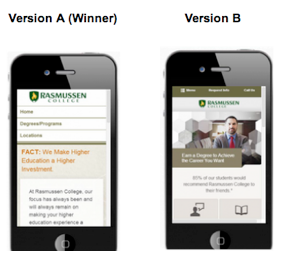

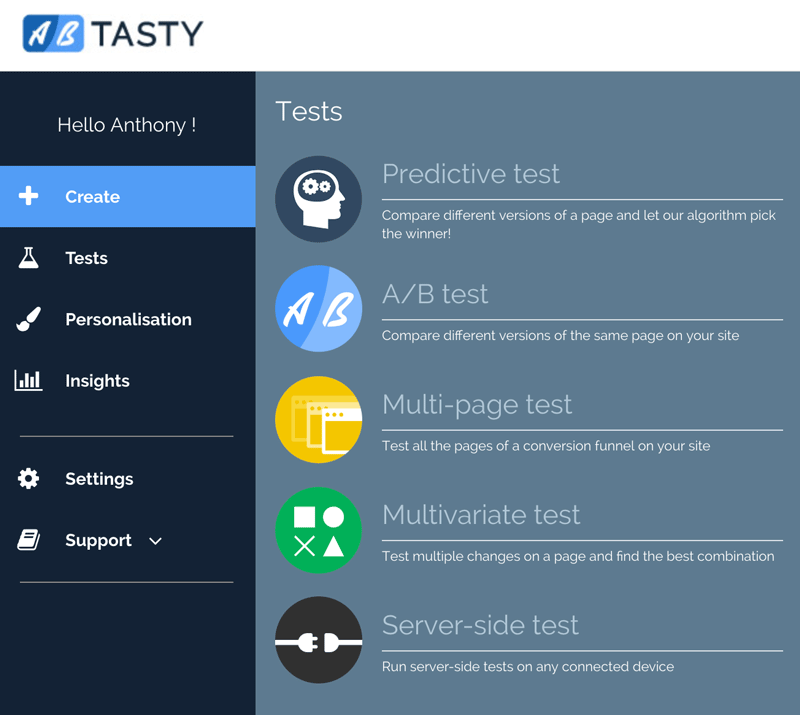

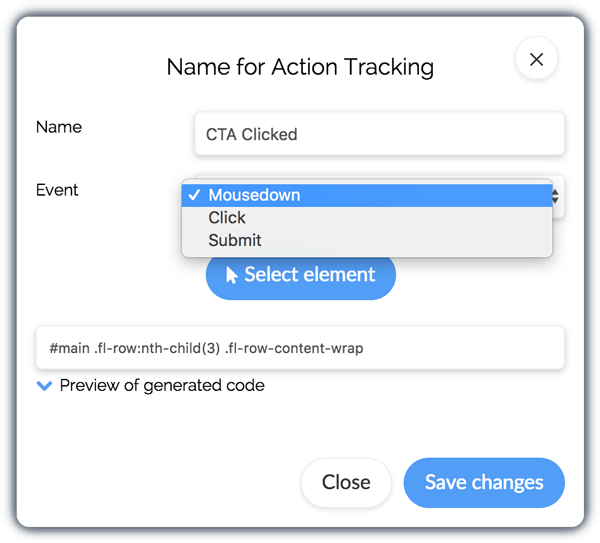

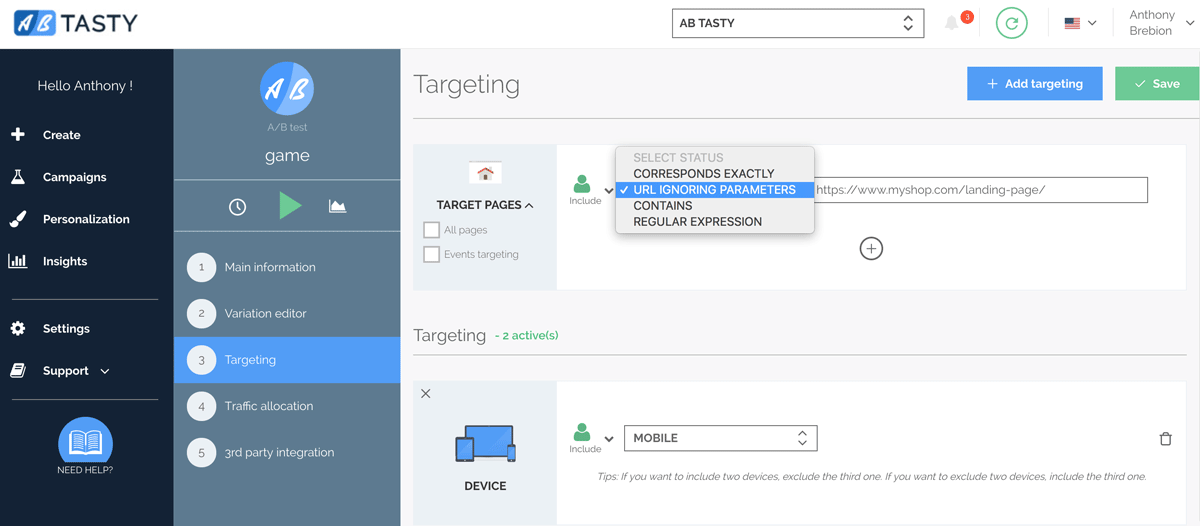

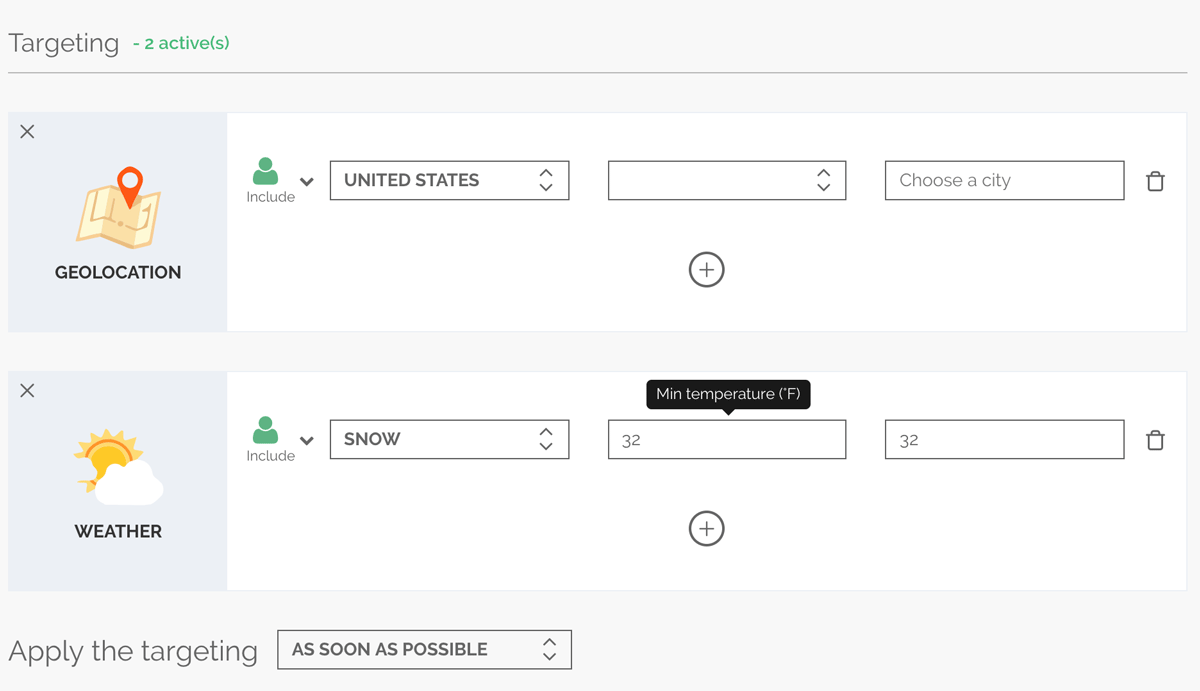

Once you’ve chosen your variants, you will use an A/B testing tool to run your tests and see which headline outperforms the others.

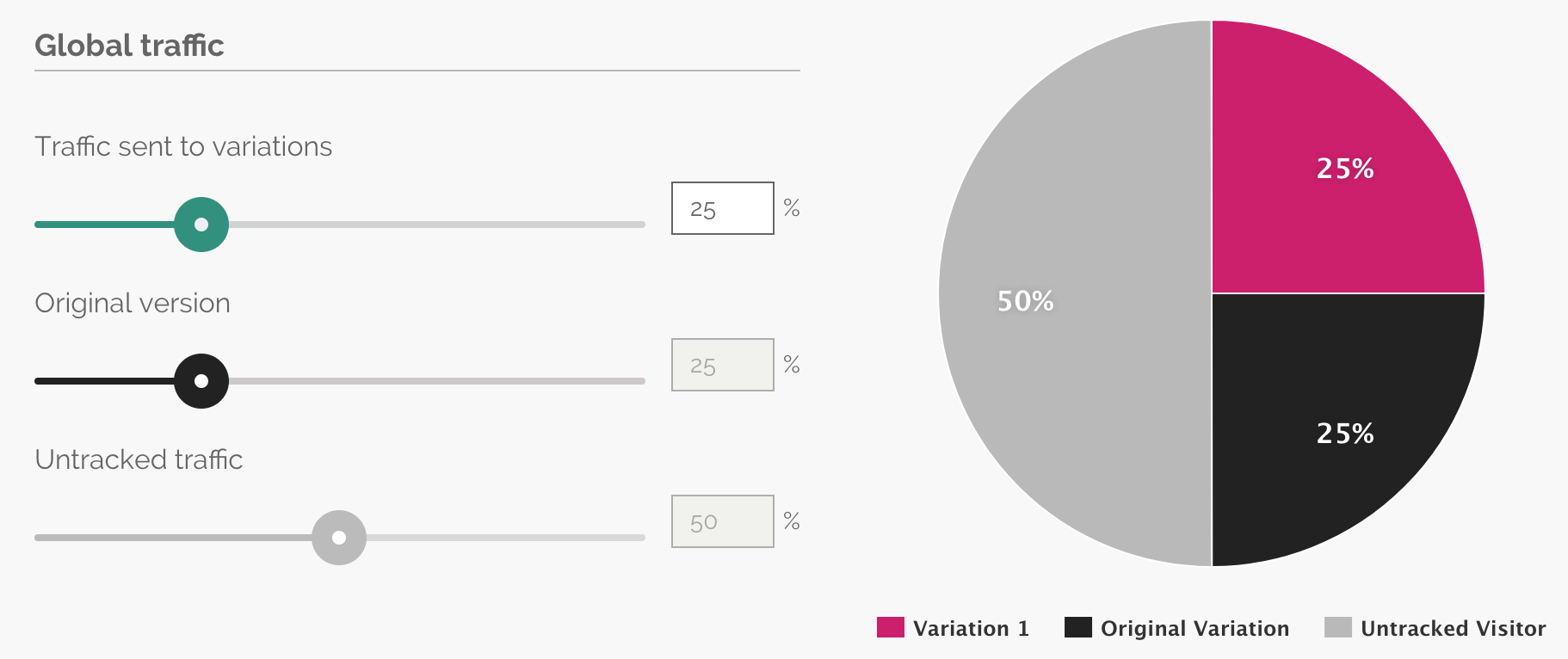

Typically, an A/B testing tool will send a percentage of your page’s traffic to each variant until it identifies a winner.

From there, the tool will allocate 100% of the traffic to the “winner” in order to maximize your page’s performance.

Sound good?

Let’s see how to come up with brilliant headline ideas that you will be able to A/B test later on.

How to brainstorm headline ideas

Headlines come in many forms depending on whether you’re writing an article, a landing page or even a product description.

Given this variety of headlines, we’ll try to help you craft various headlines through general guidelines to meet your business objectives.

Include real data and numbers

Numbers act like candies for the brain: we just love to see them because they give us facts and figures to work on.

In 2013, Conductor came up with a study that showed the impact of adding numbers to your headlines: it appears that readers do prefer headlines that include numbers.

Craft a strong value proposition

Creating a value proposition for your readers means that you need to work on including a real benefit inside your headline.

Working on your value proposition is the cornerstone of every headline creation process: it helps you address your core audience while promising something in exchange for their attention.

Depending on the content you’re working on, crafting your value proposition is a process that basically sells your content: it will determine whether or not your potential readers will click on your content.

Here are some formulations commonly used to craft a strong value proposition:

- Recipes for success

- Expert opinions

- Special offers and discounts

- Tips and advice

- Guides, ebooks

- Facts, studies

- Ideas, strategies

Trigger your readers’ curiosity

Capturing your readers’ attention is no easy task given the average level of online competition that most publishers encounter.

In order to grab your visitors’ attention from the beginning, try to avoid headlines that can easily be answered by “Yes” or “No”.

“Yes and No” headlines are dangerous because they force your visitors to form an opinion about your question or your statement; which will eventually lead to a significant share of visitors choosing not to click.

Here’s a list of formulations used to trigger curiosity:

- “How to …”

- “The 7 facts you didn’t know about …”

- “How [insert_name] managed to [action] in [days]”

- “The Complete Guide to …”

- “What every [target] should know about [subject]”

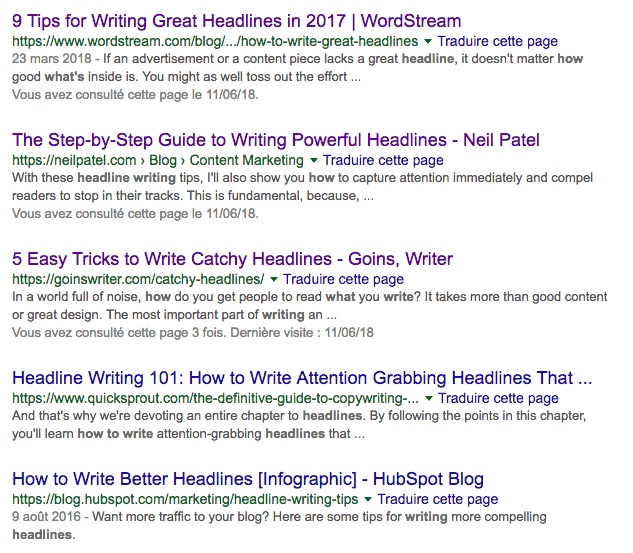

Watch your competition

There’s no secret for marketing success: practice makes perfect.

Because most businesses typically have dozens of competitors, you should pay attention to your competitors’ headline formulations.

From there, try to identify general trends and success formulas that you could apply to your own content.

Ideas for effective headlines from the competition can be found in:

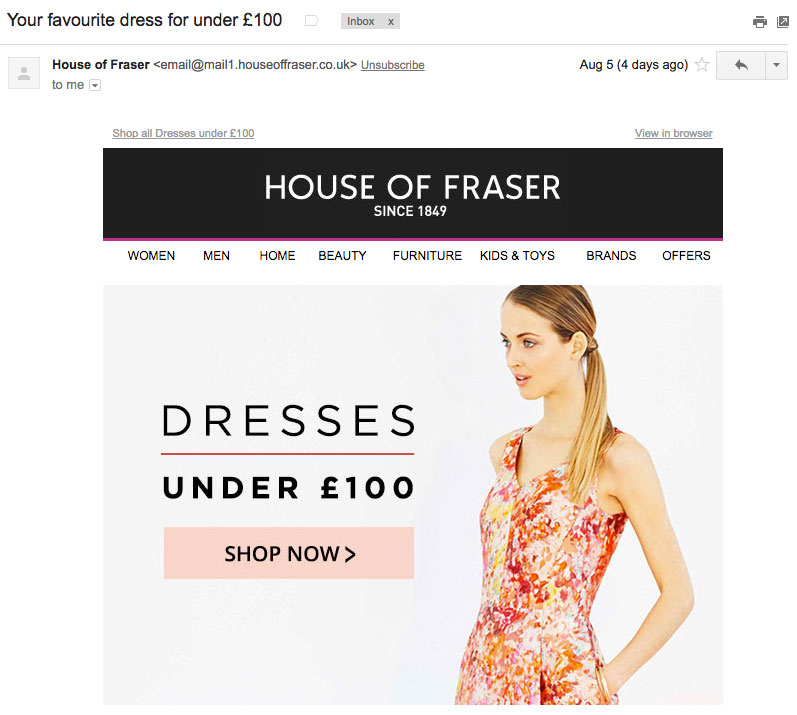

- Newsletters

- Websites pages and landing pages

- Product descriptions

- Ebooks

- SERPs (Search Engine Result Pages)

Keep them simple and clear

Online visitors and shoppers are over-exposed to marketing messages all day long.

Knowing this, it can be clever to keep your headlines short, simple and clear in order to deliver straightforward information to your potential readers.

Because marketers are always searching for new magic formulas, they sometimes come up with complex, tricky formulations that you should avoid.

Use a headline analyzer

Headlines analyzers are online tools that score your headlines based on a certain number of parameters.

Typically, these tools will grade your headlines on a 100 scale in order to help you craft catchier, better headlines.

They often measure the length and analyze your headline’s structure to determine optimal word order, keyword use, and formulation.

Here are 2 free tools you can use to analyze your headlines:

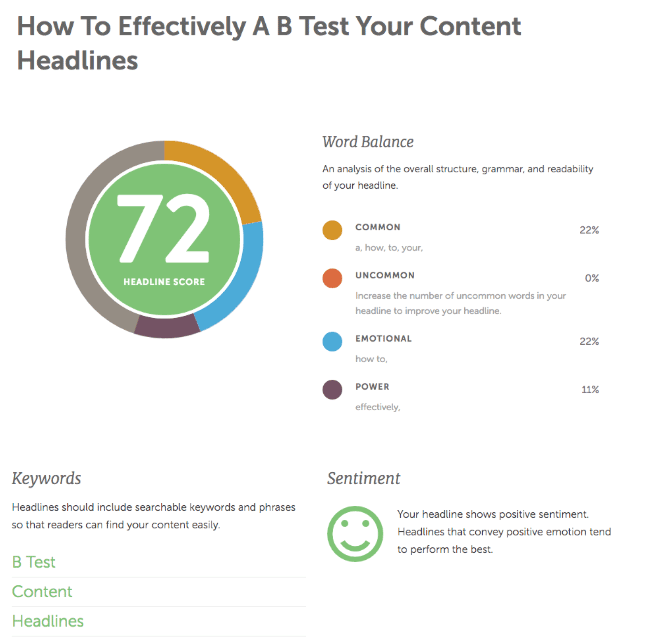

We’ve analyzed our own headline to see what type of results we would get.

Key Takeaway: our headline “How to Effectively A/B Test your Content Headlines” scored a reassuring 72/100 because it contains a power word “effectively” and an emotional element that triggers curiosity “How to…”.

The tool even identified our main keywords, which is a good starter for search engine optimization.

Run A/B tests and compare results

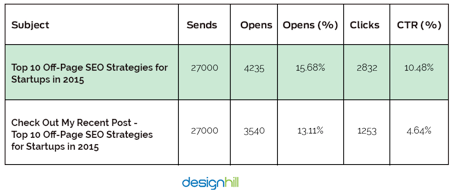

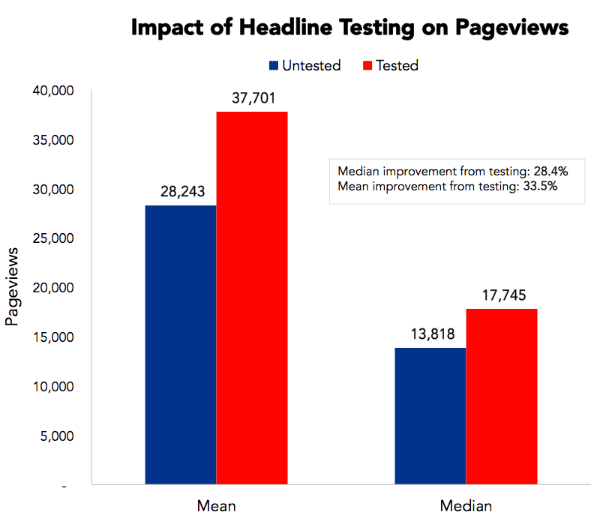

As you know, headline testing can bring tremendous benefits to your key metrics such as page views, CTR and conversions.

To prove this point, Priceonomics came with an analysis that showed a 33% improvement on pageviews following headline testing: a major improvement that could drastically improve the way visitors behave on your website.

Now that you’ve come across our best practices for headline creation, it’s high time you start testing your own headlines variations to find out the most effective ones.

In order to do so, here’s a little checklist you can follow:

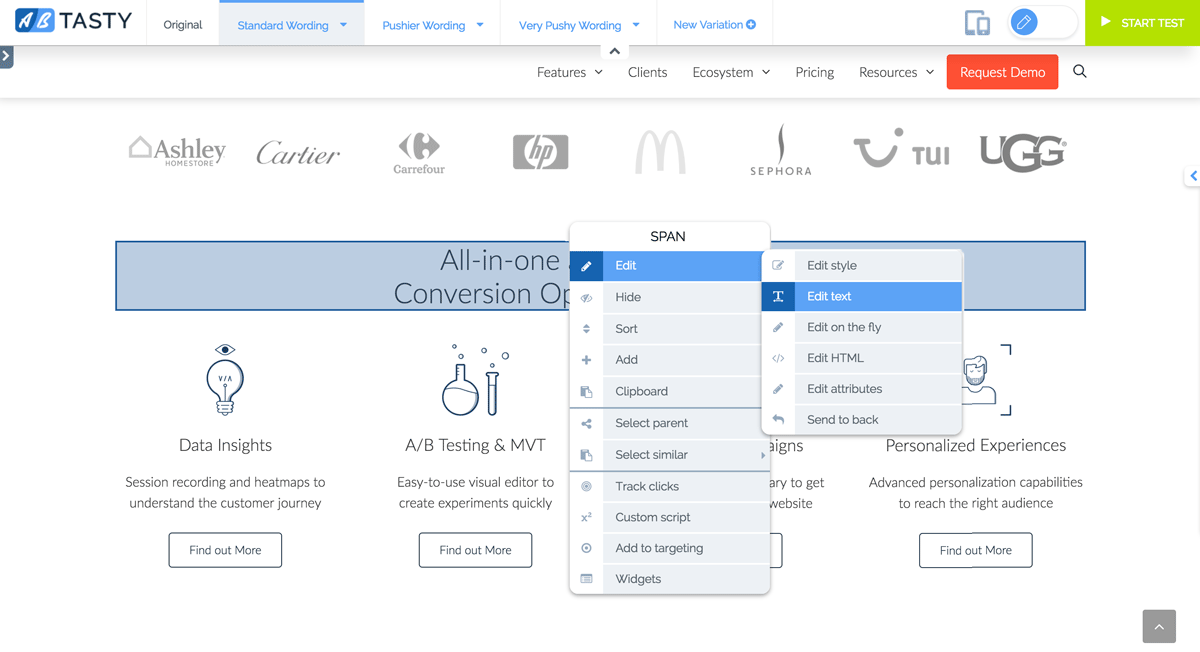

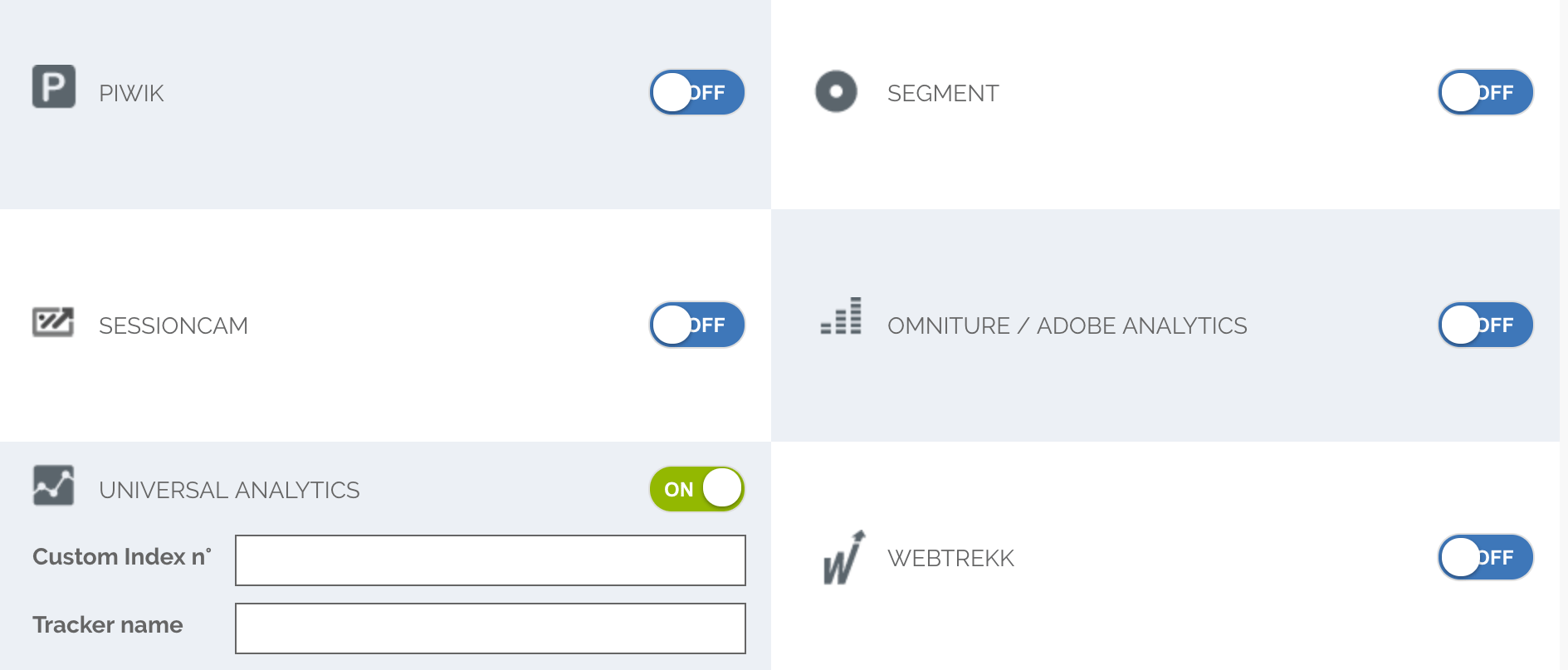

- Use our A/B Testing tool to set up your experimental environment

- Our WYSIWYG editor makes it easy to test headlines

- Start brainstorming headline ideas and formulate hypotheses

- Try to run some headline ideas through CoSchedule to measure your chances of success.

- Run your tests and collect results

- Measure your tests results and track important KPIs to monitor any change

Did you like this article? Feel free to share and check out our other in-depth articles on how to optimize your website, ecommerce and digital marketing.

A/A testing is little known and subject to strong discussions on its usefulness, but it brings added value for those who are looking to integrate an

A/A testing is little known and subject to strong discussions on its usefulness, but it brings added value for those who are looking to integrate an