We invited Holly Ingram from our partner REO Digital, an agency dedicated to customer experience, to talk us through the practical ways you can use experimentation when doing a website redesign.

Testing entire site redesigns at once is a huge risk. You can throw away years of incremental gains in UX and site performance if executed incorrectly. Not only do they commonly fail to achieve their goals, but they even fail to achieve parity with the old design. That’s why an incremental approach, where you can isolate changes and accurately measure their impact, is most commonly recommended. That being said, some scenarios warrant an entire redesign, in which case, you need a robust evidence-driven process to reduce this risk.

Step 1 – Generative research to inform your redesign

With the level of collaboration involved in a redesign, changes must be based on evidence over opinion. There’s usually a range of stakeholders who all have their own ideas about how the website should be improved and despite their best intentions, this process often leads to prioritizing what they feel is important, which doesn’t always align with customers goals. The first step in this process is to carry out research to see your site as your customers do and identify areas of struggle.

It’s important here to use a combination of quantitative research (to understand how your users behave) and qualitative research (to understand why). Start off broad using quantitative research to identify areas of the site that are performing the worst, looking for high drop-off rates and poor conversion. Now you have your areas of focus you can look at more granular metrics to gather more context on the points of friction.

- Scroll maps: Are users missing key information as it’s placed below the fold?

- Click maps: Where are people clicking? Where are they not clicking?

- Traffic analysis: What traffic source(s) are driving users to that page? What is the split between new and returning?

- Usability testing: What do users that fit your target audience think of these pages? What helps them? What doesn’t help?

- Competitor analysis: How do your competitors present themselves? How do they tackle the same issues you face?

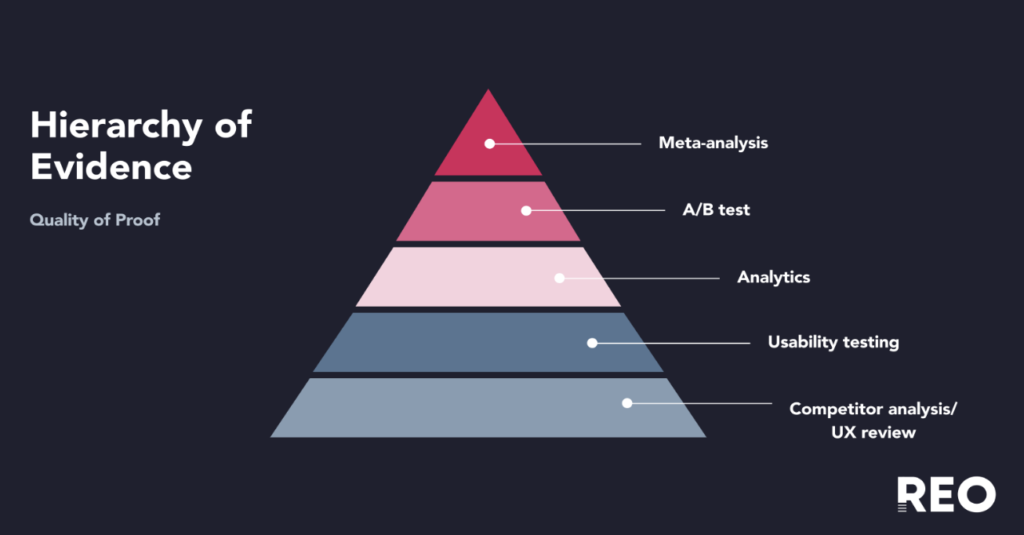

Each research method has its pros and cons. Keep in mind the hierarchy of evidence. The hierarchy is visually depicted as a pyramid, with the lowest-quality research methods (having the highest risk of bias) at the bottom of the pyramid and the highest-quality methods (with the lowest risk of bias) at the top. When reviewing your findings place more importance on findings that come from research methods at the top of the pyramid, e.g. previous A/B test findings, than research methods that come at the bottom (e.g. competitor analysis).

Step 2 – Prioritise areas that should be redesigned

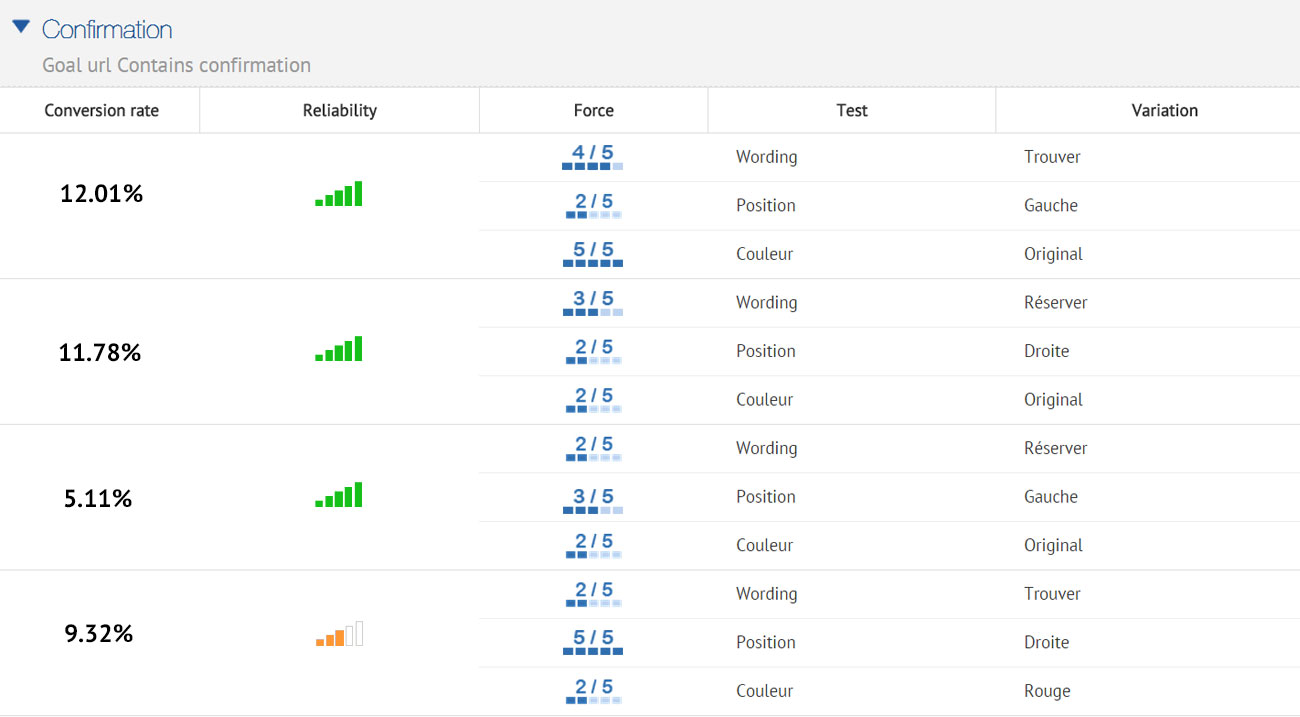

Once you have gathered your data and prioritised your findings based on quality of evidence you should be able to see which areas you should focus on first. You should also have an idea of how you might want to improve them. This is where the fun part comes in, and you can start brainstorming ideas. Collaboration is key here to ensure a range of potential solutions are considered. Try and get the perspective of designers, developers, and key stakeholders. Not only will you discover more ideas, but you will also save time as everyone will have context on the changes.

It’s not only about design. A common mistake people make when doing a redesign is purely focussing on design and making the page look ‘prettier’, and not changing the content. Through research, you should have identified content that performs well and content that could do with an update. Make sure you consider this when brainstorming.

Step 3 – Pilot your redesign through a prototype

It can be tempting once you’ve come up with great ideas to go ahead and launch it. Even if you are certain this new page will perform miles better than the original, you’d be surprised how often you’re wrong. Before you go ahead and invest a lot of time and money into building your new page, it’s a good idea to get some outside opinions from your target audience. The quickest way to do this is to build a prototype and get users to feedback on it through user testing. See what their attention is drawn to, if there’s anything on the page they don’t like or think is missing. It’s much quicker to make these changes before launching than after.

Step 4 – A/B test your redesign to know with statistical certainty whether your redesign performs better

Now you have done all this work conducting research, defining problem statements, coming up with hypotheses, ideating solutions and getting feedback, you want to see if your solution actually works better!

However, do not make the mistake of jumping straight into launching on your website. Yes it will be quicker, but you will never be able to quantify the difference all of that work has made to your key metrics. You may see conversion rate increase, but how do you know that is due to the redesign and nothing else (e.g. a marketing campaign or special offer deployed around the same time)? Or worse, you see conversion rate decrease and automatically assume it must be down to the redesign when in fact it’s not.

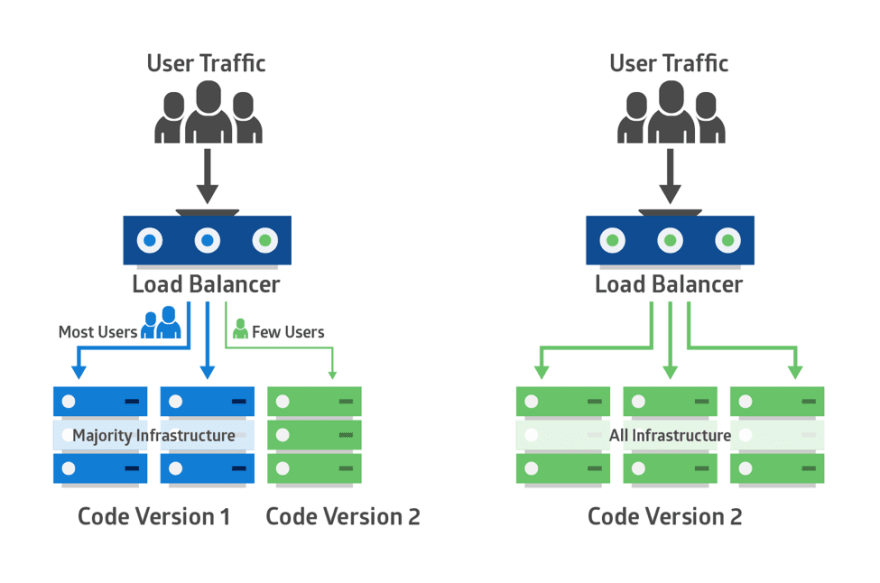

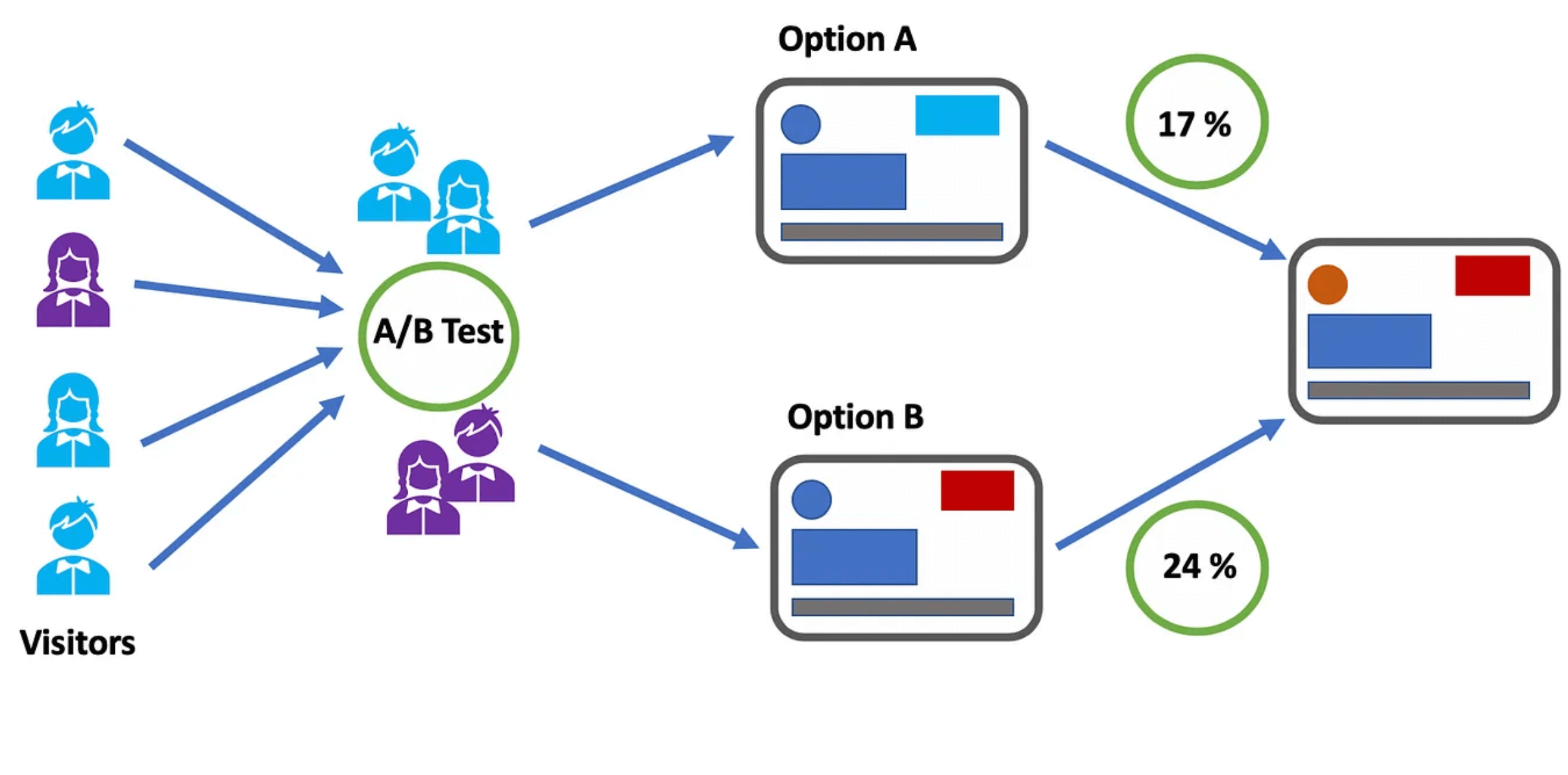

With an A/B test you can rule out outside noise. For simplicity, imagine the scenario where you have launched your redesign, in reality it made no difference, but due to a successful marketing campaign around the same time you saw an increase in conversion rate. If you had launched your redesign as an A/B test, you would see no difference between the control and the variant, as both would have been equally affected by the marketing campaign.

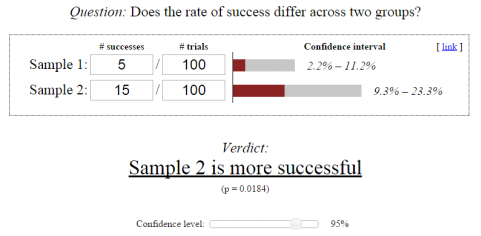

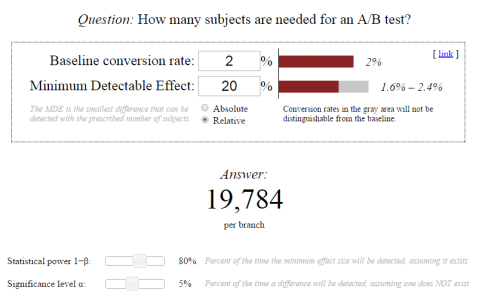

This is why it is crucial you A/B test your redesign. Not only will you be able to quantify the difference your redesign has made, you will be able to tell whether that change is statistically significant. This means you will know the probability that the change you have seen is due to the test rather than random chance. This can help minimize the risk that redesigns often bring.

Once you have your results you can then choose whether you want to launch the redesign to 100% of users, which you can do through the testing tool whilst you wait for the changes to be hardcoded. As the redesign has already been built for the A/B test, hardcoding it should be a lot quicker!

Step 5 – Evaluative research to validate how your redesign performs

Research shouldn’t stop once the redesign has been launched. We recommend conducting post-launch analysis to evaluate how it performs over time. This especially helps measure metrics that have a longer lead time, such as returns or cancellations.

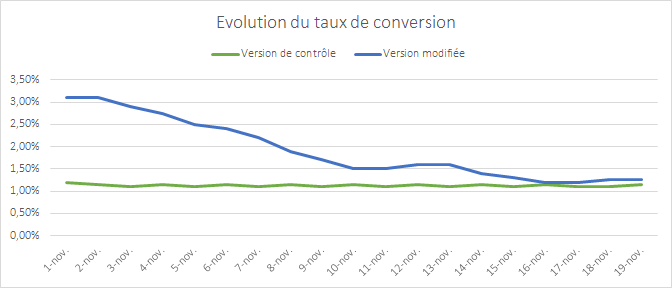

Redesigns are susceptible to visitor bias, as rolling out a completely different experience can be shocking and uncomfortable for your returning visitors. They are also susceptible to novelty effects, where users can react more positively just because something looks new and shiny. In either case, these effects will wear off with time. That’s why it’s important to monitor performance after it’s deployment.

Things to look out for:

- Bounce rate

- On-page metrics (scroll rate, click-throughs, heatmap, mouse tracking)

- Conversion rate

- Funnel progression

- Difference in performance for new vs. returning users

Redesigns are all about preparation. It may seem thorough, but it should be with such a big change. If you follow the right process you could dramatically increase sales and conversions, but if done wrong you may have wasted some serious time, effort and money. Don’t skimp on the research and keep a user-centred approach and you could create a website your audience loves.

If you want to find out more about how a redesign worked with a real customer of AB Tasty’s and REO – take a look at this webinar where La Redoute details how they tested the new redesign of their site and sought continuous improvement.