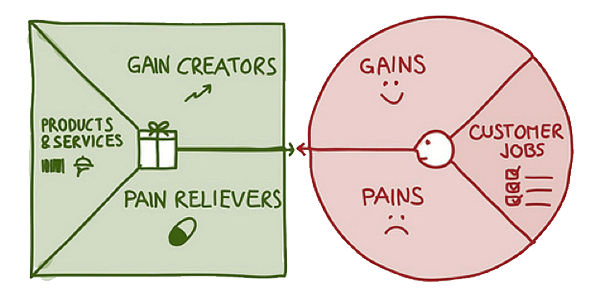

In the vast and competitive world of e-commerce, simply having great products isn’t enough. Your online store is like a stage, and how you present your products can make or break the show. Enter e-merchandising—the art and science of guiding your customers through a shopping journey that’s as smooth as silk and as engaging as a blockbuster movie.

Whether you’re looking to captivate first-time visitors or inspire returning customers, the right merchandising strategies can transform your site from a digital storefront into an experience that keeps customers coming back for more.

Ready to dive in? Here are five e-commerce merchandising strategies, with real-world examples, to help you create a shopping experience that truly shines.

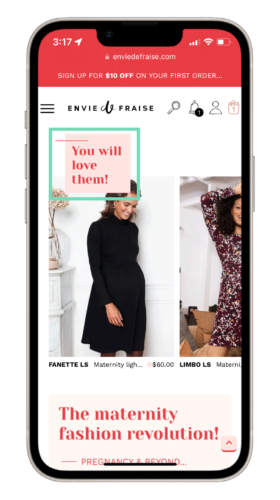

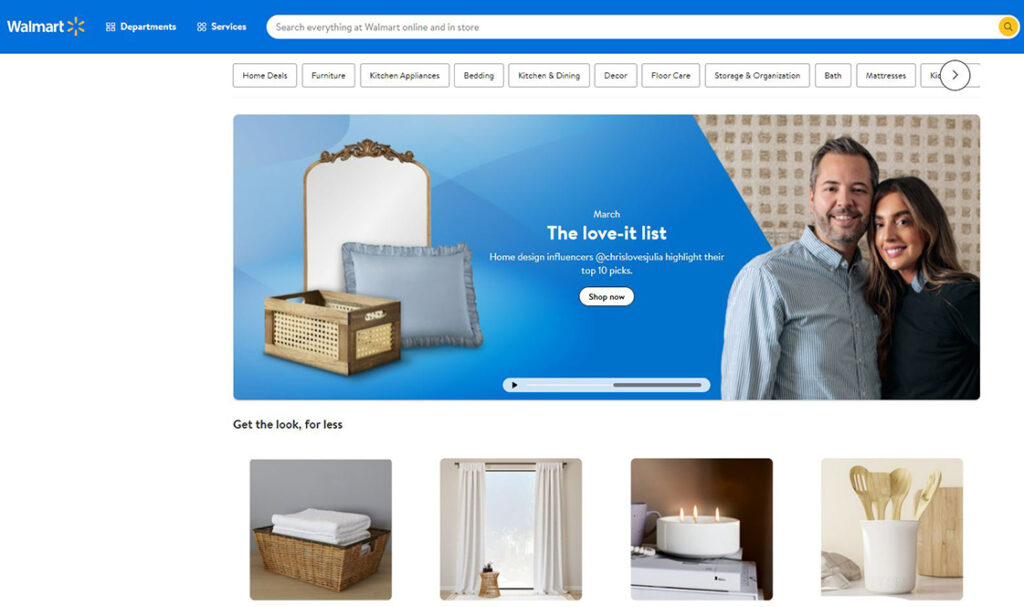

1. Branding and Homepage Messaging: Your Digital First Impression

“Don’t judge a book by its cover” is a great mantra to apply to our personal lives, however, this proverb doesn’t apply to the e-commerce world.

Your homepage is more than just a landing page—it’s the welcome mat to your online store, and it needs to speak volumes. From the moment someone lands on your site, they should know who you are, what you stand for, and how you can make their life better.

Why it matters:

- First impressions count. A compelling homepage can turn curious browsers into engaged shoppers.

- Returning visitors want to see something fresh and relevant, not the same old same old.

Pro tips:

- Tell your story boldly: Your brand story should be front and center. Use a powerful tagline or headline that captures your essence.

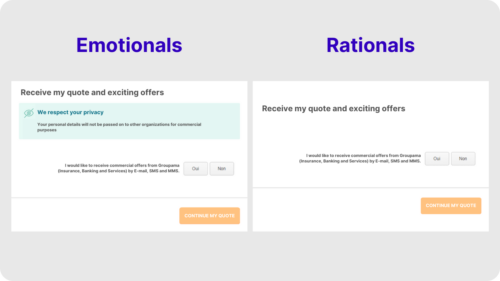

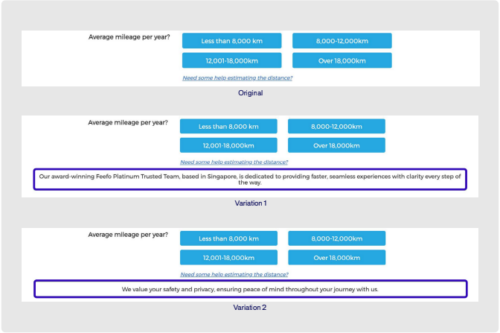

- Test, test, test: Use A/B testing to find out what messaging resonates most with your audience.

- Show, don’t tell: Include social proof like testimonials and customer reviews to build instant credibility.

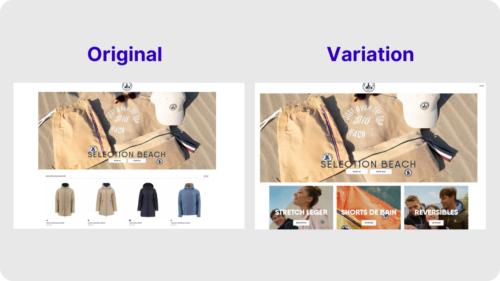

Real-world example: Homepage

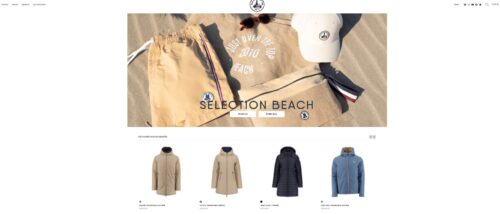

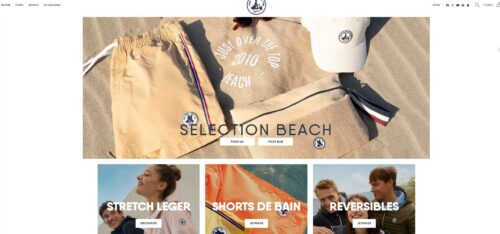

JOTT, a French clothing retailer, noticed that their homepage was experiencing a higher bounce rate than expected. Realizing that first impressions were crucial, they ran a no-code A/B test using AB Tasty’s experience platform to see if rearranging the homepage layout would improve engagement.

By moving product categories to the above-the-fold section and pushing individual product displays lower down, they achieved a 17.5% increase in clicks on the product category blocks. This optimization reduced bounce rates and guided more users deeper into their shopping journey, enhancing overall engagement.

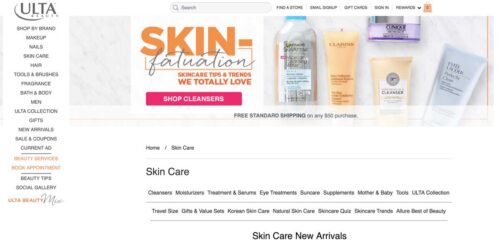

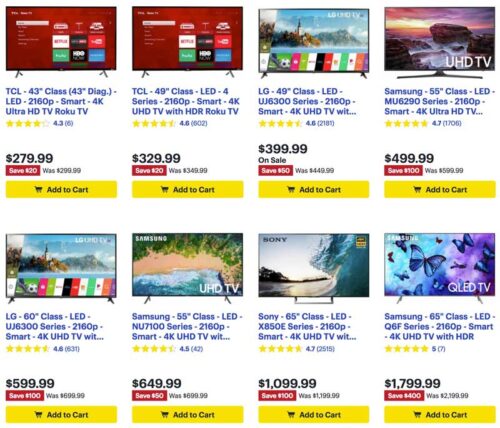

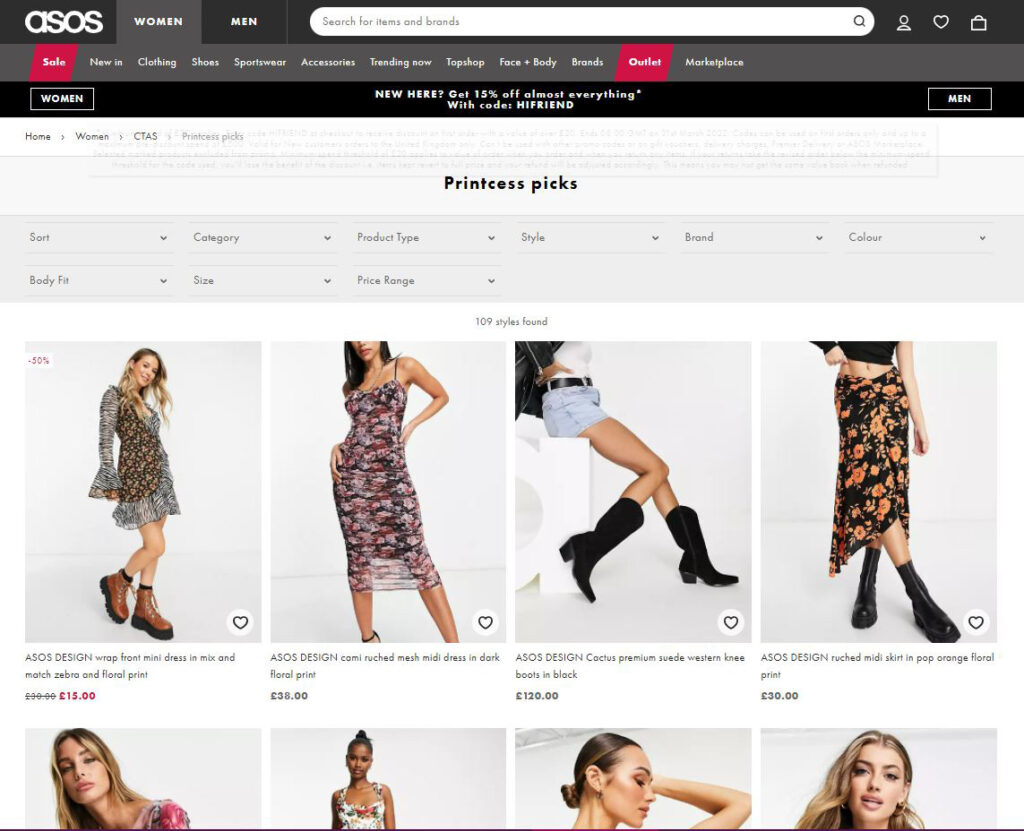

2. Group Merchandise into Collections: Curate Like a Pro

Ever walked into a store and felt overwhelmed by choice? The same thing can happen online. Grouping your products into well-thought-out collections can turn chaos into clarity, making it easier for customers to find what they’re looking for—and maybe even discover something they didn’t know they needed.

Why it matters:

- Curated collections simplify the shopping experience, helping customers quickly find what they’re after.

- They also encourage customers to explore more, potentially increasing their basket size.

Pro tips:

- Get creative with collections: Don’t just stick to the basics. Think outside the box—consider seasonal themes, trending items, or even influencer picks.

- Use data wisely: Analyze purchase patterns to create collections that reflect what customers are actually buying.

- Spotlight special collections: Use banners or pop-ups to draw attention to limited-time offers or new arrivals.

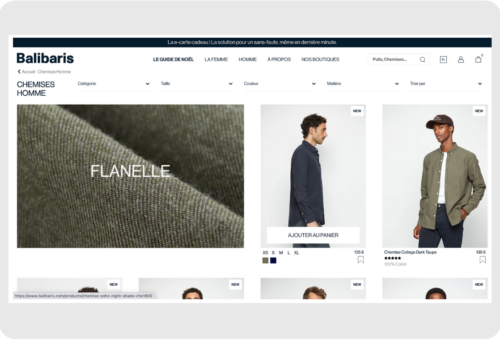

Real-world example: Collections

Balibaris, a leading French men’s fashion brand, revamped its e-commerce strategy by intelligently reorganizing its product displays to better match customer preferences and behavior. By dynamically sorting products and emphasizing best-sellers and seasonal items, Balibaris saw a significant increase in conversion rates compared to the previous year, even without special promotions. This strategic move not only enhanced the online shopping experience but also boosted overall sales while freeing up the digital team to focus on more impactful projects.

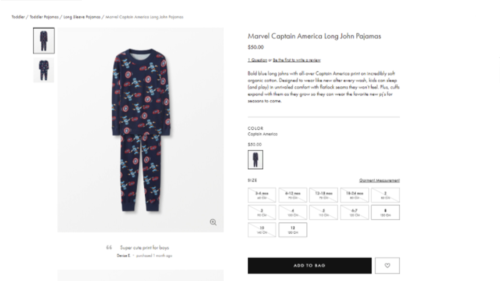

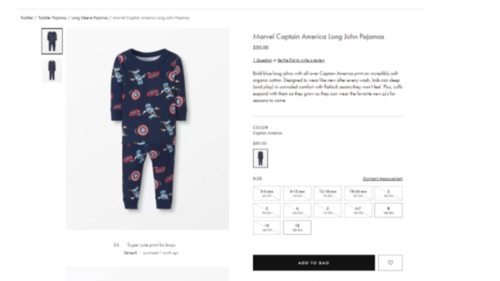

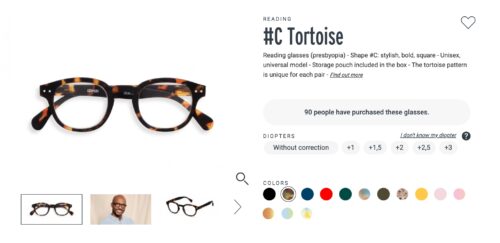

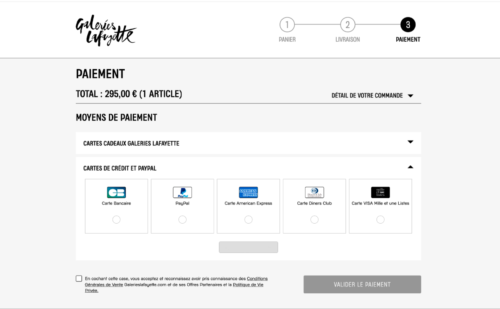

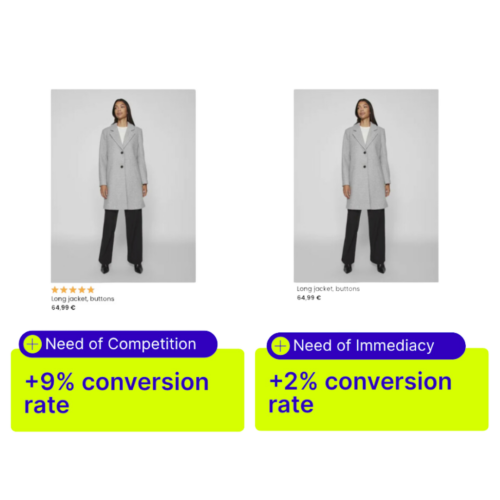

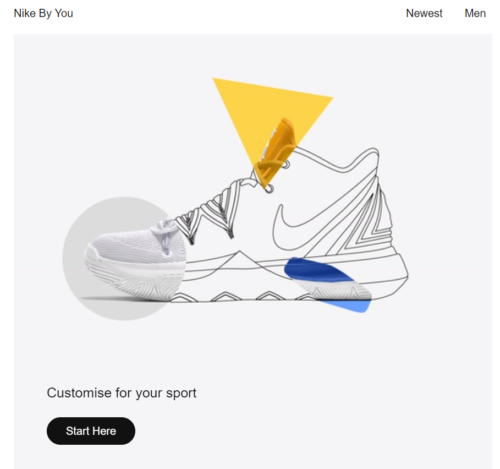

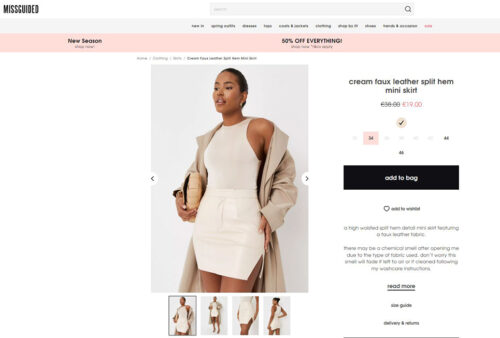

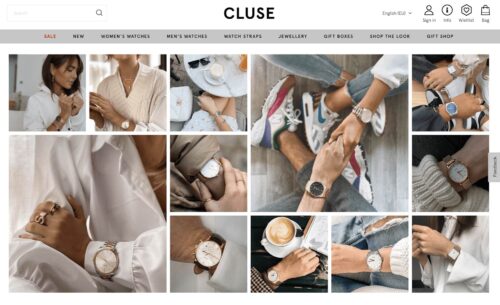

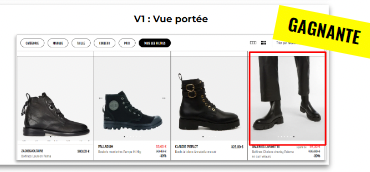

3. Showcase Products with Visual Merchandising: Let Your Images Do the Talking

In the world of e-commerce, a picture is worth a thousand clicks. Visual merchandising isn’t just about slapping up a few product photos; it’s about creating an emotional connection that makes customers want to reach through the screen and grab that item. High-quality images, videos, and even virtual try-ons can bring your products to life and help customers see how they’ll fit into their lives.

Why it matters:

- Stunning visuals can make or break a sale. They help customers imagine the product in their own lives.

- Lifestyle images and videos build an emotional connection, making customers more likely to hit “Add to Cart.”

Pro tips:

- Go high-def: Invest in top-notch photography that shows your products from every angle.

- Tell a story: Use lifestyle images or videos to show how your products can be used in real life.

- Mix it up: Consider adding videos or 360-degree views to give customers a more immersive experience.

Real-world example: Visual Merchandising

Galeries Lafayette, one of France’s most iconic department stores, sought to enhance the online shopping experience by testing the impact of different product image styles. They compared standard packshot images to premium images featuring models wearing the products.

The results were striking: the premium images not only increased clicks by 49% but also boosted the average order value (AOV) by €5.76, adding a potential €114,000 in profit. This shift towards higher-quality visuals resonated with customers, leading Galeries Lafayette to prioritize premium images across their site, significantly improving user engagement and sales.

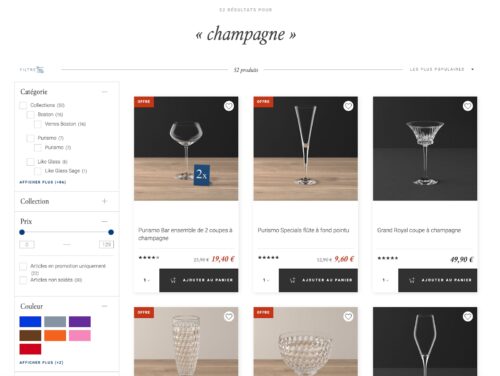

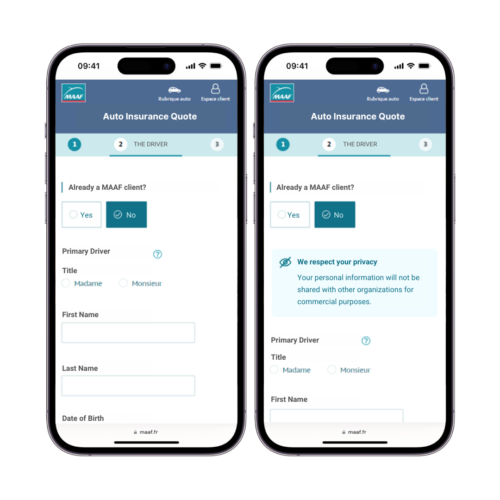

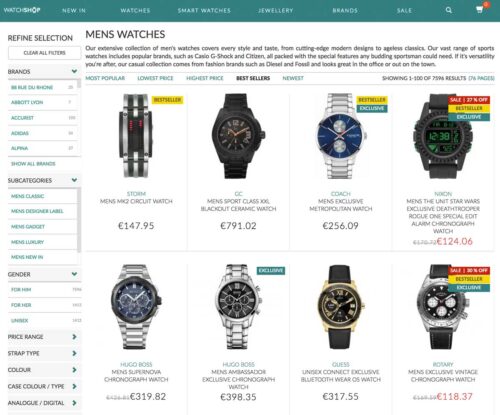

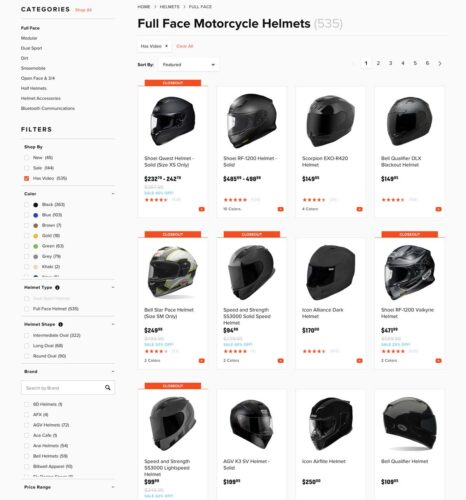

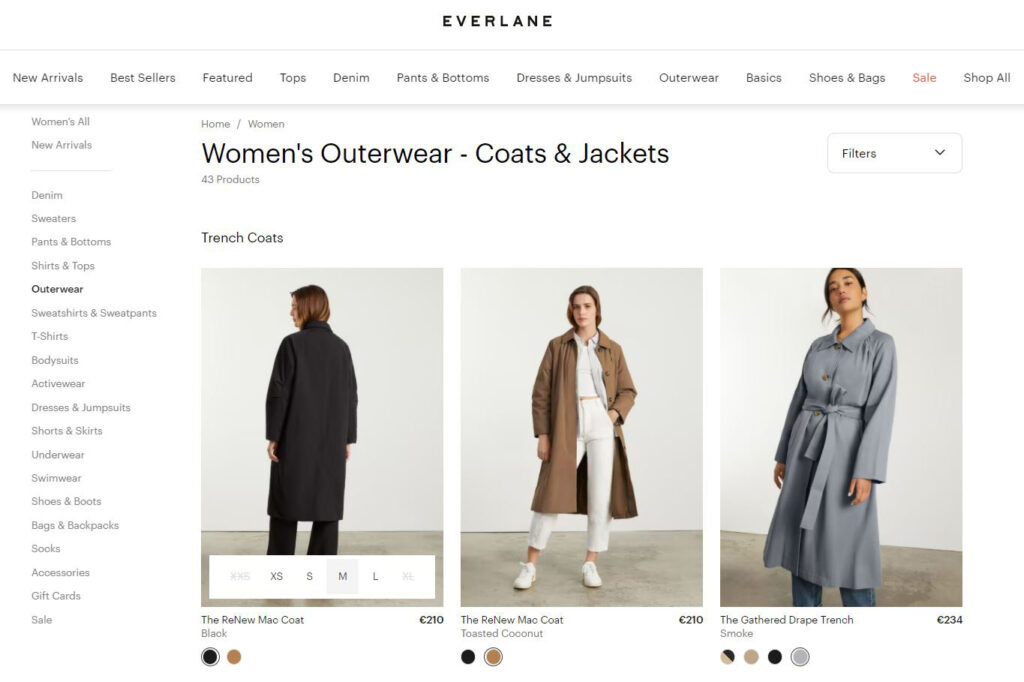

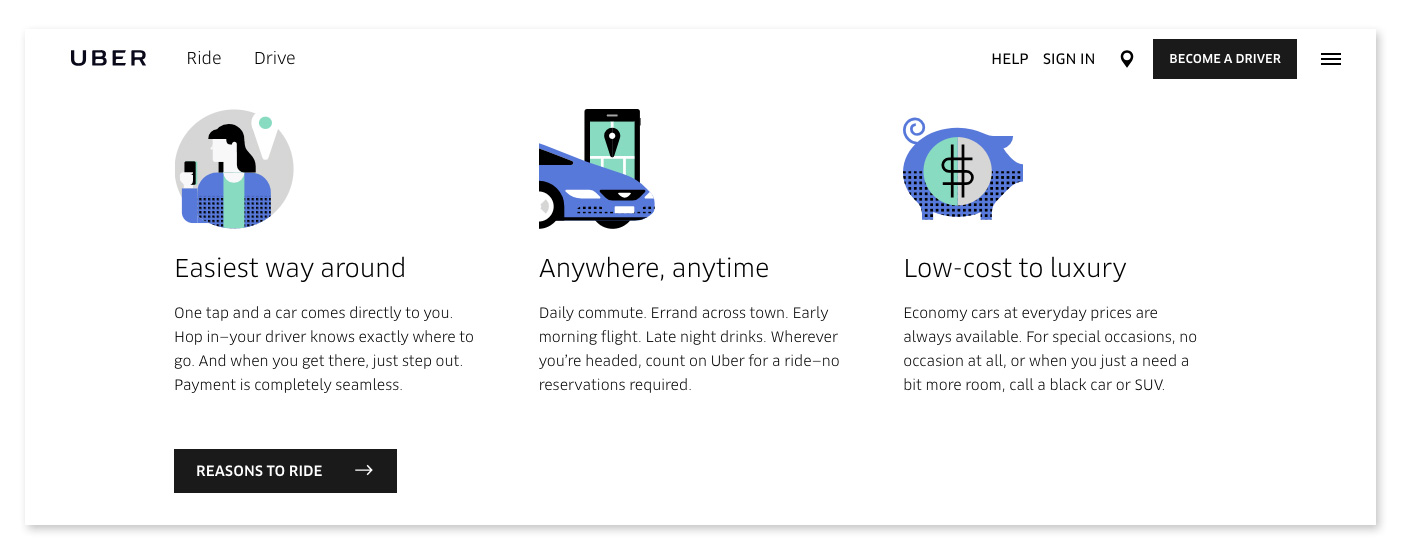

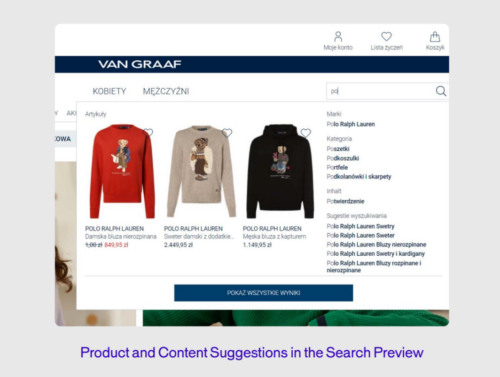

4. Implement Effective Site Search: Help Shoppers Find Their Perfect Match

When a customer knows what they want, nothing should stand in their way—especially not a clunky search function. A well-oiled site search is like a personal shopper, helping customers find exactly what they’re looking for, faster.

Why it matters:

- Customers who use search are often more ready to buy, so it’s crucial that they find what they’re looking for quickly and easily.

- An effective search can turn casual browsers into buyers by surfacing relevant products.

Pro tips:

- Optimize filters & facets: Let customers narrow down their search results with relevant filters like size, color, and price.

- Smart error detection: Make sure your search can handle typos and synonyms—because we all make mistakes.

- Autocomplete magic: Help customers out by suggesting popular search terms as they type.

- Never show a dead end: Avoid zero-results pages by offering suggestions or related products instead.

Real-world example: Site Search

VAN GRAAF, an international fashion retailer, recognized the need to elevate their online search functionality to meet the high standards of their physical stores. By integrating AB Tasty, VAN GRAAF significantly improved the customer journey on their e-commerce site. The results were impressive: online orders from search increased by 30%, conversion rates rose by 16%, and the average order value (AOV) saw a 5% boost. Additionally, the share of sales from search grew by 4.3%. This transformation not only enhanced the shopping experience but also reduced the time the team spent managing search functionalities, allowing them to focus on other critical optimizations.

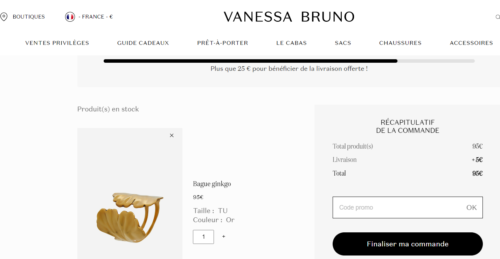

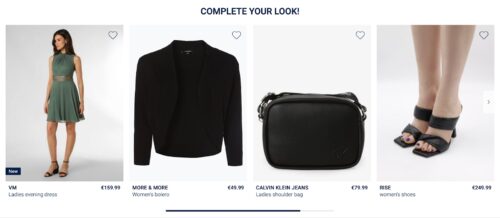

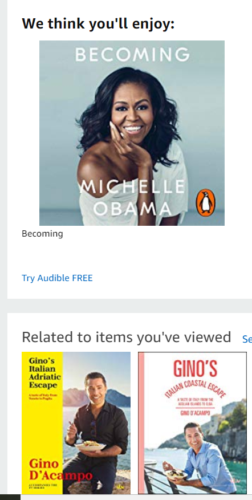

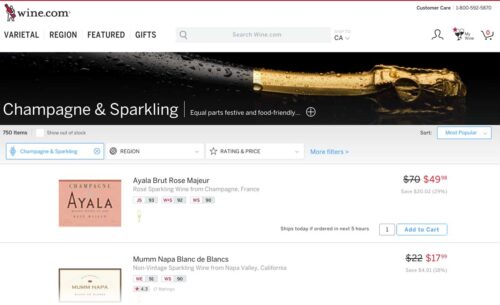

5. Cross-Sell and Up-Sell Products in Your Shopping Cart: The Power of Suggestion

You’ve done the hard work of getting a product into a customer’s cart—now’s your chance to suggest a few more. Cross-selling and up-selling are subtle yet powerful ways to increase the value of each sale by offering customers items that complement what they’ve already chosen.

Why it matters:

- Personalized recommendations can boost your average order value and make customers feel like you really “get” them.

- It’s a win-win—customers discover more great products, and you see a bump in sales.

Pro tips:

- Personalize everything: Use AI to suggest products based on what’s already in the cart or what similar customers have bought.

- Bundle it up: Show products that are frequently bought together as a bundle to encourage more sales.

- Test placement: Experiment with where you place these suggestions—product pages, the shopping cart, or even during checkout.

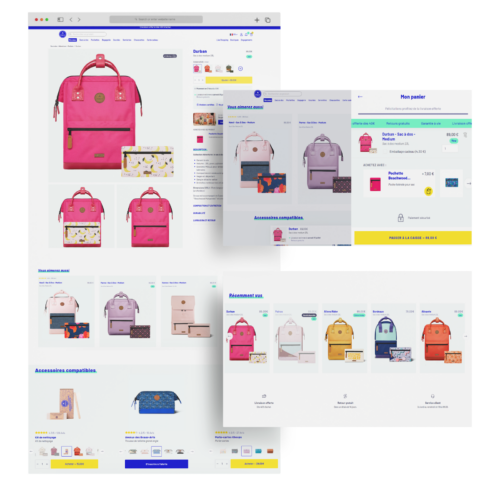

Real-world example: Cross-sell and Up-sell

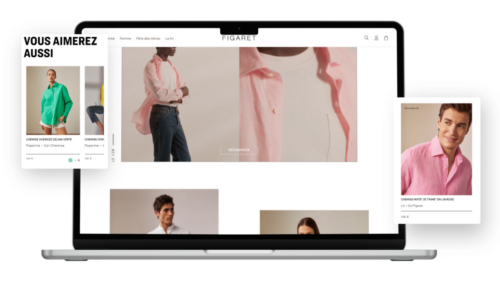

Figaret, a high-end French shirtmaker, significantly boosted its online sales by integrating personalized product recommendations. By strategically placing recommendation blocks on product pages and in the shopping cart, Figaret achieved remarkable results: 6% of visitors used these recommendations, contributing to 10% of the site’s total revenue. Additionally, these users spent on average 1.8 times more than those who didn’t engage with the recommendations. This approach not only enhanced customer engagement but also drove substantial revenue growth.

Measuring Success in E-merchandising: Are You Hitting the Mark?

You’ve put in the work, but how do you know if your e-merchandising strategies are actually working? Measuring success isn’t just about looking at sales numbers; it’s about understanding how each element of your strategy contributes to the bigger picture.

Key Metrics to Watch:

- Website traffic: Keep an eye on where your visitors are coming from and what they’re doing on your site.

- Conversion rate: This is the percentage of visitors who actually make a purchase—one of your most important metrics.

- Sales data: Analyze overall sales, average order value, and revenue from specific merchandising strategies.

- Average basket size: Track how many items customers are purchasing per transaction to gauge the effectiveness of your cross-selling and up-selling efforts.

Pro tips:

- Set benchmarks: Compare your metrics against industry standards to see where you stand.

- Use analytics tools: Platforms like Google Analytics or Matomo can give you insights into how visitors interact with your site.

- Keep iterating: Don’t settle for good—strive for better. Regularly review your data and tweak your strategies to keep improving.

Conclusion: Trial and Better—The Heart of E-Merchandising Strategies

E-commerce merchandising isn’t a “set it and forget it” task—it’s a continuous journey of trial, error, and improvement. The best strategies evolve over time as you learn more about your customers and the market. So don’t be afraid to experiment, take risks, and, most importantly, keep pushing for better. Every tweak, test, and change you make is a step towards creating an online store that not only meets but exceeds customer expectations.

Ready to take your e-commerce merchandising to the next level?

Download our comprehensive guide on e-merchandising best practices or schedule a free demo with AB Tasty today. Your journey to better starts now.