Building a culture of experimentation requires an appetite for iteration, a fearless approach to failure and a test-and-learn mindset. The 1000 Experiments Club podcast digs into all of that and more with some of the most influential voices in the industry.

From CEOs and Founders to CRO Managers and more, these experts share the lessons they’ve learned throughout their careers in experimentation at top tech companies and insights on where the optimization industry is heading.

Whether you’re an A/B testing novice or a seasoned pro, here are some of our favorite influencers in CRO and experimentation that you should follow:

Ronny Kohavi

Ronny Kohavi, a pioneer in the field of experimentation, brings over three decades of experience in machine learning, controlled experiments, AI, and personalization.

He was a Vice President and Technical Fellow at Airbnb. Prior to that, he was Technical Fellow and Corporate Vice President at Microsoft, where he led the analysis and experimentation team (ExP). Before that, he was Director of Personalization and Data Mining at Amazon.

Ronny teaches an online interactive course on Accelerating Innovation with A/B Testing, which was attended by over 800 students

Ronny’s work has helped lay the foundation for modern online experimentation, influencing how some of the world’s biggest companies approach testing and decision-making.

He advocates for a gradual rollout approach over the typical 50/50 split at launch:

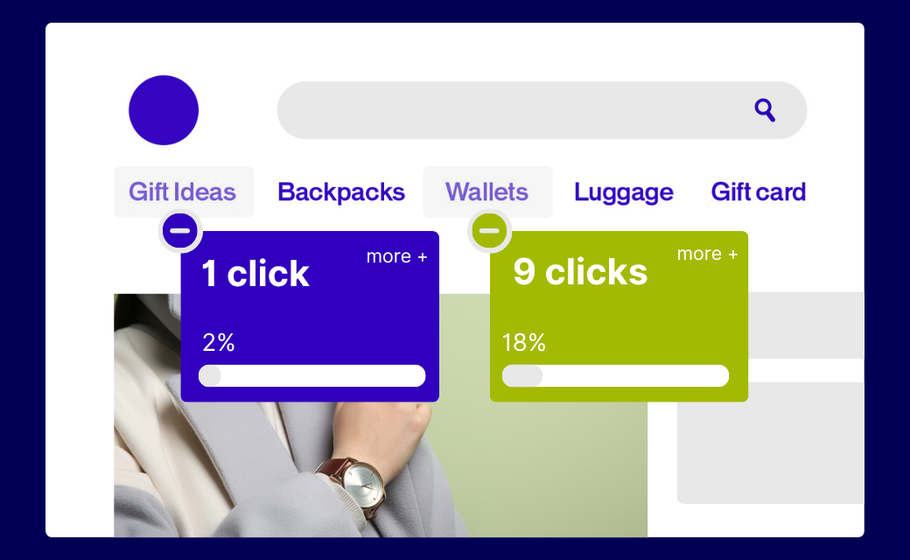

“One thing that turns out to be really useful is to start with a small ramp-up. Even if you plan to go to 50% control and 50% treatment, start at 2%. If something egregious happens—like a metric dropping by 10% instead of the 0.5% you’re monitoring for—you can detect it in near real time.”

This slow ramp-up helps teams catch critical issues early and protect user experience.

Talia Wolf

Talia Wolf is a conversion optimization specialist and founder & CEO of Getuplift, where she helps businesses boost revenue, leads, engagement, and sales through emotional targeting, persuasive design, and behavioral data.

She began her career at a social media agency, where she was introduced to CRO, then served as Marketing Director at monday.com before launching her first agency, Conversioner, in 2013.

Talia teaches companies to optimize their online presence using emotionally-driven strategies. She emphasizes that copy and visuals should address customers’ needs rather than focusing solely on the product.

For Talia, emotional marketing is inherently customer-centric and research-based. From there, experiments can be built into A/B testing platforms using a clear North Star metric—whether checkouts, sign-ups, or add-to-carts—to validate hypotheses and drive growth.

Elissa Quinby

Elissa Quinby is the Head of Product Marketing at e-commerce acceleration platform Pattern, with a career rooted in retail, marketing, and customer experience.

Before joining Pattern, she led retail marketing as Senior Director at Quantum Metric. She began her career as an Assistant Buyer at American Eagle Outfitters, then spent two years at Google as a Digital Marketing Strategist. Elissa went on to spend eight years at Amazon, holding roles across marketing, program management, and product.

Elissa emphasizes the importance of starting small to build trust with new customers. “The goal is to offer value in exchange for data,” she explains, pointing to first-party data as the “secret sauce” behind many successful companies.

She encourages brands to experiment with creative ways of gathering customer information—always with trust at the center—so they can personalize experiences and deepen customer understanding over time.

Lukas Vermeer

Lukas Vermeer, Director of Experimentation at Vista, is an expert in designing, implementing, and scaling experimentation programs. He previously spent over eight years at Booking.com, where he held roles as a product manager, data scientist, and ultimately Director of Experimentation.

With a background in machine learning and AI, Lukas specializes in building the infrastructure and processes needed to scale testing and drive business growth. He also consults with companies to help them launch and accelerate their experimentation efforts.

Given today’s fast-changing environment, Lukas believes that roadmaps should be treated as flexible guides rather than rigid plans:

“I think roadmaps aren’t necessarily bad, but they should acknowledge the fact that there is uncertainty. The deliverable should be clarifications of that uncertainty, rather than saying, ‘In two months, we’ll deliver feature XYZ.’”

Instead of promising final outcomes, Lukas emphasizes embracing uncertainty to make better, data-informed decisions.

Jonny Longden

Jonny Longden is the Chief Growth Officer at Speero, with over 17 years of experience improving websites through data and experimentation. He previously held senior roles at Boohoo Group, Journey Further, Sky, and Visa, where he led teams across experimentation, analytics, and digital product.

Jonny believes that smaller companies and startups—especially in their early, exploratory stages—stand to benefit the most from experimentation. Without testing, he argues, most ideas are unlikely to succeed.

“Without experimentation, your ideas are probably not going to work,” Jonny says. “The things that seem obvious often don’t deliver results, and the ideas that seem unlikely or even a bit silly can sometimes have the biggest impact.”

For Jonny, experimentation isn’t just a tactic—it’s the only reliable way to uncover what truly works and drive meaningful, data-backed progress.

Ruben de Boer

Ruben de Boer is a Lead CRO Manager at Online Dialogue and founder of Conversion Ideas, with over 14 years of experience in data and optimization.

At Online Dialogue, he leads the team of Conversion Managers—developing skills, maintaining quality, and setting strategy and goals. Through his company, Conversion Ideas, Ruben helps people launch their careers in CRO and experimentation by offering accessible, high-quality courses and resources.

Ruben believes experimentation shouldn’t be judged solely by outcomes. “Roughly 25% of A/B tests result in a winner, meaning 75% of what’s built doesn’t get released—and that can feel like failure if you’re only focused on output,” he explains.

Instead, he urges teams to shift their focus to customer-centric insights. When the goal becomes understanding the user—not just releasing features—the entire purpose of experimentation evolves.

David Mannheim

David Mannheim is a digital experience strategist with over 15 years of expertise helping brands like ASOS, Sports Direct, and Boots elevate their conversion strategies.

He is the CEO and founder of Made With Intent, focused on advancing innovative approaches to personalization through AI. Previously, he founded User Conversion, which became one of the UK’s largest independent CRO consultancies.

David recently authored a book exploring what he calls the missing element in modern personalization: the person. “Remember the first three syllables of personalization,” he says. “That often gets lost in data.”

He advocates for shifting focus from short-term gains to long-term customer value—emphasizing metrics like satisfaction, loyalty, and lifetime value over volume-based wins.

“More quality than quantity,” David explains, “and more recognition of the intangibles—not just the tangibles—puts brands in a much better place.”

Marianne Stjernvall

Marianne Stjernvall has over a decade of experience in CRO and experimentation, having executed more than 500 A/B tests and helped over 30 organizations grow their testing programs.

Marianne is the founder of Queen of CRO and co-founder of ConversionHub, Sweden’s most senior CRO agency. As an established CRO consultant, she helps organizations build experimentation-led cultures grounded in data and continuous learning.

Marianne also teaches regularly, sharing her expertise on the full spectrum of CRO, A/B testing, and experimentation execution.

She stresses the importance of a centralized testing approach:

“If each department runs experiments in isolation, you risk making decisions based on three different data sets, since teams will be analyzing different types of data. Having clear ownership and a unified framework ensures the organization works cohesively with tests.”

Ben Labay

Ben Labay is the CEO of Speero, blending academic rigor in statistics with deep expertise in customer experience and UX.

Holding degrees in Evolutionary Behavior and Conservation Research Science, Ben began his career as a staff researcher at the University of Texas, specializing in data modeling and research.

This foundation informs his work at Speero, where he helps organizations leverage customer data to make better decisions.

Ben emphasizes that insights should lead to action and reveal meaningful patterns. “Every agency and in-house team collects data and tests based on insights, but you can’t stop there.”

Passionate about advancing experimentation, Ben focuses on developing new models, applying game theory, and embracing bold innovation to uncover bigger, disruptive insights.

André Morys

André Morys, CEO and founder of konversionsKRAFT, has nearly three decades of experience in experimentation, digital growth, and e-commerce optimization.

Fueled by a deep fascination with user and customer experience, André guides clients through the experimentation process using a blend of data, behavioral economics, consumer psychology, and qualitative research.

He believes the most valuable insights lie beneath the surface. “Most people underestimate the value of experimentation because of the factors that are hard to measure,” André explains.

“You cannot measure the influence of experimentation on your company’s culture, yet that impact may be ten times more important than the immediate uplift you create.”

This philosophy is central to his “digital experimentation framework,” which features his signature “Iceberg Model” to capture both measurable and intangible effects of testing.

Jeremy Epperson

Jeremy Epperson is the founder of Thetamark and has dedicated 14 years to conversion rate optimization and startup growth. He has worked with some of the fastest-growing unicorn startups in the world, researching, building, and implementing CRO programs for more than 150 growth-stage companies.

By gathering insights from diverse businesses, Jeremy has developed a data-driven approach to identify testing roadblocks, allowing him to optimize CRO processes and avoid the steep learning curves often associated with new launches.

In his interview, Jeremy emphasizes focusing on customer experience to drive growth. He explains, “We will do better as a business when we give the customer a better experience, make their life easier, simplify conversion, and eliminate the roadblocks that frustrate them and cause abandonment.”

His ultimate goal with experimentation is to create a seamless process from start to finish.

Chad Sanderson

Chad Sanderson is the CEO and founder of Gable, a B2B data infrastructure SaaS company, and a renowned expert in digital experimentation and large-scale analysis.

He is also a product manager, public speaker, and writer who has lectured on topics such as the statistics of digital experimentation, advanced analysis techniques, and small-scale testing for small businesses.

Chad previously served as Senior Program Manager for Microsoft’s AI platform and was the Personalization Manager for Subway’s experimentation team.

He advises distinguishing between front-end (client-side) and back-end metrics before running experiments. Client-side metrics, such as revenue per transaction, are easier to track but may narrow focus to revenue growth alone.

“One set of metrics businesses mess up is relying only on client-side metrics like revenue per purchase,” Chad explains. “While revenue is important, focusing solely on it can drive decisions that overlook the overall impact of a feature.”

Carlos Gonzalez de Villaumbrosia

Carlos Gonzalez de Villaumbrosia has spent the past 12 years building global companies and digital products.

With a background in Global Business Management and Marketing, Computer Science, and Industrial Engineering, Carlos founded Floqq—Latin America’s largest online education marketplace.

In 2014, he founded Product School, now the global leader in Product Management training.

Carlos believes experimentation has become more accessible and essential for product managers. “You no longer need a background in data science or engineering to be effective,” he says.

He views product managers as central figures at the intersection of business, design, engineering, customer success, data, and sales. Success in this role requires skills in experimentation, roadmapping, data analysis, and prototyping—making experimentation a core competency in today’s product landscape.

Bhavik Patel

Bhavik Patel is the Data Director at Huel, an AB Tasty customer, and the founder of CRAP Talks, a meetup series connecting CRO professionals across Conversion Rate, Analytics, and Product.

Previously, he served as Product Analytics & Experimentation Director at Lean Convert, where he led testing and optimization strategies for top brands. With deep expertise in personalization, experimentation, and data-driven decision-making, Bhavik helps teams evolve from basic A/B testing to strategic, high-impact programs.

With a focus on experimentation, personalization, and data-driven strategy, Bhavik leads teams in creating better digital experiences and smarter testing programs.

His philosophy centers on disruptive testing—bold experiments aimed at breaking past local maximums to deliver statistically meaningful results. “Once you’ve nailed the fundamentals, it’s time to make bigger bets,” he says.

Bhavik also stresses the importance of identifying the right problem before jumping to solutions: “The best solution for the wrong problem isn’t going to have any impact.”

Rand Fishkin

Rand Fishkin is the co-founder and CEO of SparkToro, creators of audience research software designed to make audience insights accessible to all.

He also founded Moz and co-founded Inbound.org with Dharmesh Shah, which was later acquired by HubSpot in 2014. Rand is a frequent global keynote speaker on marketing and entrepreneurship, dedicated to helping people improve their marketing efforts.

Rand highlights the untapped potential in niche markets:

“Many founders don’t consider the power of serving a small, focused group of people—maybe only a few thousand—who truly need their product. If you make it for them, they’ll love it. There’s tremendous opportunity there.”

A strong advocate for risk-taking and experimentation, Rand encourages marketers to identify where their audiences are and engage them directly there.

Shiva Manjunath

Shiva Manjunath is the Senior Web Product Manager of CRO at Motive and host of the podcast From A to B. With experience at companies like Gartner, Norwegian Cruise Line, and Edible, he’s spent years digging into user behavior and driving real results through experimentation.

Shiva is known for challenging the myth of “best practices,” emphasizing that optimization requires context, not checklists. “If what you believe is this best practice checklist nonsense, all CRO is just a checklist of tasks to do on your site. And that’s so incorrect,” he says.

At Gartner, a simplified form (typically seen as a CRO win) led to a drop in conversions, reinforcing his belief that true experimentation is about understanding why users act, not just what they do.

Through his work and podcast, Shiva aims to demystify CRO and encourage practitioners to think deeper, test smarter, and never stop asking questions.

FoodPanda knows that hunger cannot wait. In the image above you can see that they retarget with two magical words: “FREE+DELIVERY”

FoodPanda knows that hunger cannot wait. In the image above you can see that they retarget with two magical words: “FREE+DELIVERY”

Knowing one dimension makes it possible to calculate the other. If you have an element with 1000px width, your height would be 750px. This calculation is made as follows:

Knowing one dimension makes it possible to calculate the other. If you have an element with 1000px width, your height would be 750px. This calculation is made as follows: